KotlinDL 0.3 Is Out With ONNX Integration, Object Detection API, 20+ New Models in ModelHub, and Many New Layers

Introducing version 0.3 of our deep learning library, KotlinDL.

KotlinDL 0.3 is available now on Maven Central with a variety of new features – check out all the changes that are coming to the new release! We’re currently introducing a lot of new models in ModelHub (including the first Object Detection and Face Alignment models), the ability to fine-tune the Image Recognition models saved in ONNX format from Keras and PyTorch, the experimental high-level Kotlin API for image recognition, a lot of new layers contributed by the community members and many other changes.

In this post, we’ll walk you through the changes to the Kotlin Deep Learning library in the 0.3 release:

- ONNX integration

- Fine-tuning of ONNX models

- ModelHub: support for the DenseNet, Inception, and NasNet model families

- Object detection with the SSD model

- Sound classification with the SoundNet architecture

- Experimental high-level API for Image Recognition

- 23 new layers, 6 new activation functions, and 2 new initializers

- How to add KotlinDL to your project

- Learn more and share your feedback

ONNX integration

Over the past year, library users have been asking us to add support for working with models saved in the ONNX format.

Open Neural Network Exchange (ONNX) is an open-source format for AI models. It defines an extensible computation graph model and definitions of built-in operators and standard data types. It works well with both of today’s most popular frameworks, TensorFlow and PyTorch.

We use the ONNX Runtime Java API to parse and execute models saved in the `.onnx` file format. You could read more about this API in the documentation of the project.

KotlinDL has a separate `onnx` module that provides the ONNX integration support. To use it in your project, you should add a different dependency.

There are two ways to run predictions on the ONNX model. If you want to use LeNet-5, one of our models from ModelHub, you can load it in the following manner:

To load a model that is in the ONNX format, instantiate OnnxInferenceModel and run the predictions.

If the model has a very complex output, for example a few tensors like YOLOv4 or SSD (which can be loaded from ModelHub, too), you may want to call a predictRaw method:

to get access to all the output and parse the output manually.

Finding an appropriate model in ONNXModelHub is easy: just start your search from the top-level object ONNXModels and go deeper to CV or ObjectDetection. Use this chain of inner objects like a unique model identifier to obtain the model itself or its preprocessing method. For example, the SOTA model from 2020 for the Image Recognition task, called EfficientNet, can be found by following ONNXModels.CV.EfficientNet4Lite.

Fine-tuning of ONNX models

Of course, running predictions on ready-made models is good, but what about fine-tuning them a little for our tasks?

Unfortunately, the ONNX Java API does not support training mode for models, but we do not need to train the entire model as a whole to perform Transfer Learning tasks.

The classical approach to the Transfer Learning task is to freeze all layers, except for the last few, and then train the top few layers (the fully connected layers at the top of the network) on a new piece of data, often changing the number of model outputs.

These top layers can be viewed as a small neural network, whose input is the output of a model consisting of frozen layers. These frozen layers can be considered as preprocessing of a small top model.

We have implemented this approach in our library, which has an ONNX model as a preprocessing stage and a top model as a small KotlinDL neural network.

Suppose you have a huge model in Keras or PyTorch that you want to fine-tune in KotlinDL: cut off the last layers from it, export to the ONNX format, load into KotlinDL as an additional preprocessing layer via ONNXModelPreprocessor, describe the missing layers using the KotlinDL API, and train them.

In the example below, we load the ResNet50 model from our ONNXModelHub and fine-tune it to classify cats and dogs (the embedded Dogs-vs-Cats dataset is used):

The topModel is just the simplest neural network and can be trained quickly as it has few parameters.

The complete example can be found here.

NOTE: Since there is no API for cutting layers and weights from the model saved in the ONNX format, you need to perform these operations yourself before exporting to the ONNX format. We’re going to add to ModelHub a lot of models from PyTorch and Keras prepared this way in the 0.4 release.

ModelHub: support for the DenseNet, Inception, and NasNet model families

In the 0.2 release, KotlinDL added storage of models, which could be loaded from JetBrains S3 storage and cached on disk. We first called it ModelZoo and have since renamed it to ModelHub.

ModelHub contains a collection of Deep Learning models that are pre-trained on large datasets like ImageNet or COCO.

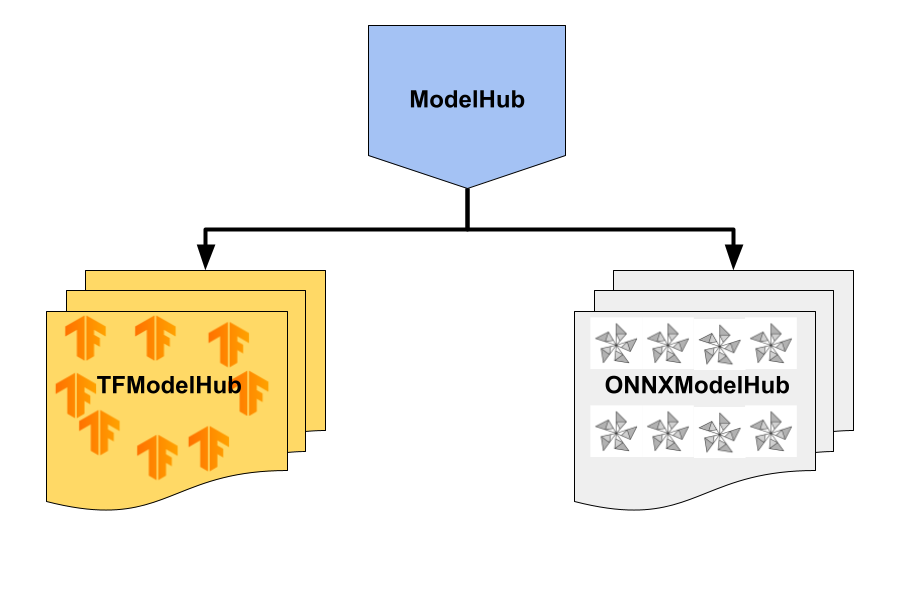

There are two ModelHubs currently: the basic TFModelHub, available in the `api` module, and an additional ONNXModelHub, available in the `onnx` module.

TFModelHub currently supports the following models:

- VGG’16

- VGG’19

- ResNet18

- ResNet34

- ResNet50

- ResNet101

- ResNet152

- ResNet50v2

- ResNet101v2

- ResNet152v2

- MobileNet

- MobileNetv2

- Inception

- Xception

- DenseNet121

- DenseNet169

- DenseNet201

- NASNetMobile

- NASNetLarge

ONNXModelHub currently supports the following models:

- CV

- Lenet

- ResNet18

- ResNet34

- ResNet50

- ResNet101

- ResNet152

- ResNet50v2

- ResNet101v2

- ResNet152v2

- ObjectDetection

- SSD

- FaceAlignment

- Fan2d106

All models in TFModelHub include a special loader of model configs and model weights, as well as the special data preprocessing function that was applied when the models were trained on the ImageNet dataset.

Here’s an example of how you can use one of these models, ResNet50, for prediction:

Now you’ve got a model and weights and you can use them in KotlinDL.

NOTE: Don’t forget to apply model-specific preprocessing for the new data. All the preprocessing functions are included in ModelHub and can be called via the preprocessInput function:

A complete example of how to use ResNet’50 for prediction and transfer learning with additional training on a custom dataset can be found in this tutorial.

NOTE: When working with ONNX models, you do not have to load the weights separately (see the ONNX integration section above).

Object detection with the SSD model

Until v0.3, our ModelHub contained models suitable for solving the Image Recognition problem. But starting with this release, we are gradually expanding the library’s capabilities for working with images. We’d like to introduce to you the Single Shot MultiBox Detector (SSD) model, which is capable of solving the Object Detection problem.

Object detection is the task of detecting instances of objects of a certain class within an image.

The SSD model is trained on the COCO dataset, which consists of 328,000 images, each with bounding boxes and per-instance segmentation masks in 80 object categories. The model can be used in real-time prediction to detect objects.

We designed an API for Object Detection, which hides the details of image post- and preprocessing.

The result of the object detection is drawn on a Swing Panel; the image is preprocessed for SSD input.

The famous YOLOv4 model is also available in ONNXModelHub. However, we haven’t added the postprocessing of YOLOv4 output because some operations are missing in the Multik library (the Kotlin analog of NumPy). We’re looking for contributions from the community, so please don’t hesitate to join the effort!

NOTE: Of course, you can load the standard API to load the model and call the predictRaw method to handle the result manually, but we suggest avoiding these difficulties

Experimental high-level API for Image Recognition

With the ObjectDetection task, we offered a simplified API for predictions. Likewise, with the Image Recognition task, we can simplify the interaction with models loaded from ModelHub by hiding the image preprocessing, compilation, and model initialization from the user.

To illustrate how this works, let’s load and store on disk a pre-trained model of the particular type ImageRecognitionModel. Models of this type are not capable of additional training – they can only make predictions. On the other hand, they are extremely easy to work with.

The syntax for working with pre-trained models uses brackets, which is nice.

ImageRecognitionModel has methods that immediately return human-readable labels and accept image files as input.

This is an experimental API for hardcore backend engineers, for whom the model is a black box with entry and exit. We’d love to hear about your experience with and your thoughts about this approach.

Sound classification with the SoundNet architecture

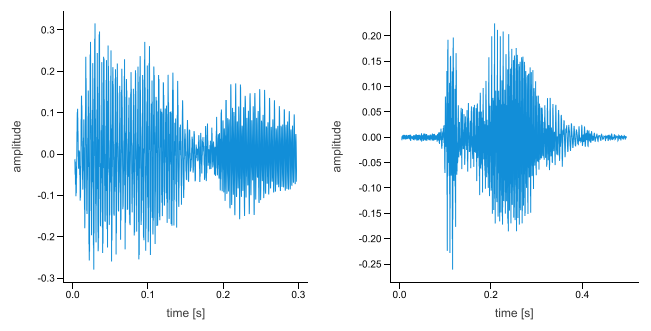

The KotlinDL library is taking its first steps in the audio domain. This release adds a few layers required for building a SoundNet-like model, such as Conv1D, MaxPooling1D, Cropping1D, UpSampling1D, and other layers with the “1D” suffix.

Let’s build a toy neural network inspired by the SoundNet model’s architecture:

This is a CNN that uses only 1D parts for convolutions and max-pooling of the input sound data. This network was able to achieve approximately 55% accuracy on test data from FSDD after ten epochs and approximately 85% after 100 epochs.

SoundBlock is a pretty simple composition of the two Conv1D and one MaxPool1D layer:

When the model is ready, we can load the Free Spoken Digits Dataset (FSDD) and train the model. The FSDD dataset is a simple audio/speech dataset consisting of recordings of spoken digits in .wav files at 8 kHz.

The trained model will recognize the digit from the sound recorded as a .wav file correctly. Feel free to train your pronunciation with our toy SoundNet model!

The complete code of the example can be found here.

23 new layers, 6 new activation functions, and 2 new initializers

Many of the contributors to this release have added layers to Kotlin for performing non-trivial logic. With these added layers, you can start working with neural networks that process not only photos but also sound, video, and 3D images:

- Softmax activation layer (by D. Lowl)

- LeakyReLU activation layer (by Masoud Kazemi)

- PReLU activate layer (by Masoud Kazemi)

- ELU activation layer (by Maciej Procyk)

- ThresholdedReLU activation layer (by Masoud Kazemi)

- Conv1D layer (by Maciej Procyk)

- MaxPooling1D layer (by Masoud Kazemi)

- AveragePooling1D layer (by Masoud Kazemi)

- GlobalMaxPooling1D layer (by Masoud Kazemi)

- GlobalAveragePooling1D layer (by Ansh Tyagi)

- Conv3D layer (by Maciej Procyk)

- MaxPooling3D layer (by Ansh Tyagi)

- AveragePooling3D layer (by Masoud Kazemi)

- GlobalAveragePooling3D layer (by Ansh Tyagi)

- GlobalMaxPool2D layer (by Masoud Kazemi)

- GlobalMaxPool3D layer (by Masoud Kazemi)

- Cropping1D and Cropping3D layers (by Masoud Kazemi)

- Permute layer (by Ansh Tyagi)

- RepeatVector layer (by Stan van der Bend)

- UpSampling1D, UpSampling2D, and UpSampling3D layers (by Masoud Kazemi)

Two new initializers:

- Identity initializer (by Hauke Brammer)

- Orthogonal initializer (by Ansh Tyagi)

And six new activation functions:

- Gelu activation function (by Ansh Tyagi)

- HardShrink activation function (by Ansh Tyagi)

- LiSHT activation function (by Veniamin Viflyantsev)

- Mish activation function (by Xa9aX ツ)

- Snake activation function (by cagriyildirimR)

- TanhShrink activation function(by Femi Alaka)

These activation functions are not available in the TensorFlow core package, but we decided to add them, seeing how they’ve been widely used in recent papers.

In the next release, we’re going to achieve layer parity with the current set of layers in Keras and, perhaps, go further by adding several popular layers from the SOTA implementations of recent models that are not yet included in the main Keras distribution.

We would be delighted to look at your pull requests if you would like to contribute a layer, activation function, callback, or initializer from the recent paper!

How to add KotlinDL to your project

To use the full power of KotlinDL (including the `onnx` and `visualization` modules) in your project, add the following dependencies to your build.gradle file:

Or add just one dependency if you don’t need ONNX and visualization:

You can also take advantage of KotlinDL’s functionality in any existing Java project, even if you don’t have any other Kotlin code in it yet. Here is an example of the LeNet-5 model entirely written in Java.

Learn more and share your feedback

We hope you enjoyed this brief overview of the new features in KotlinDL 0.3! For more information, including the up-to-date Readme file, visit the project’s home on GitHub. Be sure to check out the KotlinDL guide, which contains detailed information about the library’s basic and advanced features and covers many of the topics mentioned in this blog post in more detail.

If you have previously used KotlinDL, use the changelog to find out what has changed and how to upgrade your projects to the stable release.

We’d be very thankful if you would report any bugs you find to our issue tracker. We’ll try to fix all the critical issues in the 0.3.1 release.

You are also welcome to join the #kotlindl channel in Kotlin Slack (get an invite here). In this channel, you can ask questions, participate in discussions, and get notifications about the new preview releases and models in ModelHub.

Let’s Kotlin!

Subscribe to Kotlin Blog updates