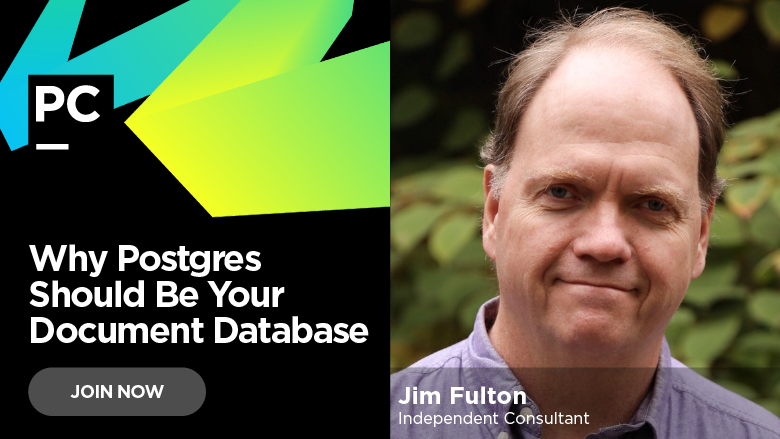

Interview with Jim Fulton for “Why Postgres Should Be Your Document Database” Webinar

Jim Fulton, my friend and former co-worker, was one of the original leaders in the Python community. Over the years he created important Python software (cPickle), Python’s original “killer app” (Zope, as stated in magazine articles), and a leading build system (buildout).

He’s now turning his two decades of experience with Python databases to PostgreSQL and its JSONB column type for high-performance document applications, and we have for a PyCharm webinar on March 20. Jim is an independent consultant for Python and database projects and took some time out for an interview.

Quick summary…what’s the webinar about, who is it for, and why should they care?

The webinar is about using PostgreSQL as a document database and tradeoffs of using PostgreSQL versus non-transactional databases. We’ll look at examples using PyCharm to develop database-intensive web applications in Python.

Let’s rewind to two points in the past. It’s 1994, you and I meet at the first Python “conference” at NIST. What were you doing and what attracted you to Python?

I was supporting data-analysis activities at the USGS. We were using a data manipulation tool named Rand RDB. It was based on the idea of creating a 4GL by using Unix shell pipelines to string together data manipulation components. Data transformations were expressed using Perl expressions. Perl was predominantly a text-manipulation language at the time (Perl 4) and made it easy to introduce computational mistakes with numeric data.

I decided I wanted to replace the data transformation tool with a scripting language that was more careful with numbers. I asked on the Perl mailing list if Perl might grow OO features to allow me more control over how computations were performed. I was assured that Perl would never support OO. :) This was in 1994.

I went shopping for an OO scripting language and found Python. The OO features and the clean indentation-based syntax was very appealing to me. Python had a much more robust type system that already behaved the way I needed it too. It had a short tutorial that allowed me to have a working knowledge of the language in a couple of hours. (The language was much smaller then.) I started using it for other projects and found I could get to working software much quicker than with Perl, which itself was a major improvement over other languages I was using, like Fortran and Ada.

Next…it’s 1998 and the software soon to be known as Zope is being open sourced. What was Zope and why did it matter?

Zope was an application platform that people could use to build web applications completely through the web. At the time, the tools for building web applications were very primitive. There weren’t established Python web frameworks and non-python alternatives were typically very low-level and difficult to use. With the through-the web model, people could install the application and get started building their applications very quickly. Not only was there no edit-compile cycle, there was no edit-process-restart cycle. Changes were visible right away.

It provided an “object file system”. Content was arranged in a hierarchy. People could assemble applications using pre-built components such as folders, files, templates, SQL “methods” and Python methods. Zope’s URLs reflected the hierarchy. Competing systems typically hid hierarchies behind big fancy numbers (http://example.com/foo/0,1245,,00.html).

Zope also implemented “environmental acquisition”, allowing objects lower in the hierarchy to access data from objects higher in the hierarchy. This was a form of delegation that was similar to the way JavaScript objects get data from their prototypes. Acquisition supported component reuse and hierarchical security.

Although I didn’t realize it at the time, Zope’s through-the-web development experience with real-time changes backed by an object-oriented database was a lot like traditional Smalltalk environments with persistent memory images. I’m a big fan of Smalltalk, and was very happy to learn that Adele Goldberg, one of the creators of Smalltalk used Zope for a time.

Zope contained an object database, written in Python. Why, what was interesting about it, and was it the right decision?

The object-database, ZODB, both suggested and enabled Zope’s hierarchical through-the-web development model. It made data management as simple as saving attributes or items on Python objects. ZODB has a lot of interesting features beyond being a transactional ACID object-oriented database, including a pluggable layered storage architecture, multi-level caching, conflict resolution, transactional undo, and time travel.

Building the ZODB was the right decision, because Zope wouldn’t have existed without it.

PostgreSQL has rich JSON support. What should developers think about it?

It’s an opportunity to get some of the benefits of a document-oriented database:

- Flexible schema

- Composite objects avoiding joins

- Ease of getting started

…while keeping the benefits of Postgres:

- ACID/transactions

- The ability to easily combine variable and hierarchical data with a relational schema.

Another way to think of this is, if you have chunks of data that are always used together and those data aren’t easily described by flat records, then it may make sense to leverage JSON data types, because you can avoid joins and ORMs.

The JSON support is well-integrated with the rest of PostgreSQL. You can do relational joins between JSON columns and other columns, as well as self-joins to query related values.

BTW, when we talk about JSON in PostgreSQL, we really mean the JSONB type, not the JSON type. While there are theoretical uses for the JSON type, it’s mostly a decoy, which many newcomers fall for. :)

How has this impacted some of the design you do for customer work?

It’s allowed me to pursue flexible OO designs while still taking advantage of PostgreSQL’s strengths.

First of all, there are some standard flexible indexes for JSONB columns that support a wide variety of common queries. For example, suppose I have documents that describe tasks like:

{

"submitter": "JimF",

"title": "Make order processing asynchronous",

"Points": 7,

"tags": ["backend", "enhancement"]

}

I can search for tasks by tag:

select from tasks where data @> '{"tags": ["backend"]}'

And the search will be supported by the generic index, as will search by submitter:

select from tasks where data @> '{"submitter": "JimF"}'

The generic index only does exact matches, but we can also use expression indexes to create BTrees indexes:

create index on tasks (((data ->>’points’)::int))

Which supports queries like:

select from tasks where (data->>’points’)::int < 9

Or we can create a fulltext index on title:

create index on tasks using gin (((data->>'title')::tsvector))

Supporting searches like:

select * from t where (v->>'title')::tsvector @@ 'asynchronous'

Some things that are important to note:

- The queries above are possible without indexes

- The last two examples indexed expressions

The ability to index expressions provides a lot of power, especially when combined with stored procedures. What’s happening with an expression index is that the expression is evaluated at index time, not search time. (It’s a little more complicated than that…expressions may be evaluated at search time depending on memory and result sizes.)

The projects I’ve worked on often involve heterogeneous data where the way data are accessed might depend on content type. I can index a text-extraction function that extracts text in a content-type specific way and then do text searches that span different content types. Function calls can be indexed like any other expression.

You’re a big fan of transactions. Can you give a sneak peek of what you’ll cover in the webinar about transactions?

Well, I’m a big fan of correct software and transactions make correctness a lot easier because:

- Transactions are atomic. If there’s an error after data have been saved, the database will rollback the changes. Without atomicity, the programmer would have to make updates that canceled previous change, which might fail.

- Transactions provide consistency not just within, but between different database records.

- Transactions are isolated. There can be multiple hosts, processes and threads operating on the data and transactions prevent them from interfering. Transactions provide an alternative to other concurrency-control techniques that are notorious for producing errors.

I’ll talk about why transactions are important and the tradeoffs one should consider when considering non-transactional databases. I’ll touch on ways that web frameworks support transaction management and challenges in dealing with inherently non-transactional systems like email.

Your talk is about PostgreSQL as a document database. But you actually propose a further step into objects. Can you explain?

Managing data as JSON documents provides a lot of flexibility and frees developers from performing complex joins just to assemble objects. ORMs can help with this of course, but with a lot of additional complexity. In fact, with an ORM, the joins are still there, they’re just expressed a different way.

But documents are fairly dumb. Imagine if Python didn’t have classes and all you had were dictionaries, lists, strings, numbers, booleans and None.

Recently I created Newt DB to combine the ease of use of ZODB with the power of PostgreSQL. Newt DB provides a full object-oriented database on top of Postgres. It saves data using 2 representations, python pickles and JSON documents. The pickle format is far more expressive than JSON and so supports the full range of Python types, including application-defined classes. The JSON representation isn’t full fidelity, but is optimized to facilitate search, display and reporting in PostgreSQL and other, non-Python clients.

Modeling objects as Python + JSON avoids joins to assemble objects. Cross-object references avoid joins in Python. (Technically, these are self-joins, but they’re automated transparently.) As objects are accessed, they’re loaded and cached transparently.

Subscribe to PyCharm Blog updates