Scala Plugin

Scala Plugin for IntelliJ IDEA and Android Studio

Promo image

Promo image

IntelliJ IDEA

News

releases

scala

IntelliJ Scala Plugin 2025.1 Is Out!

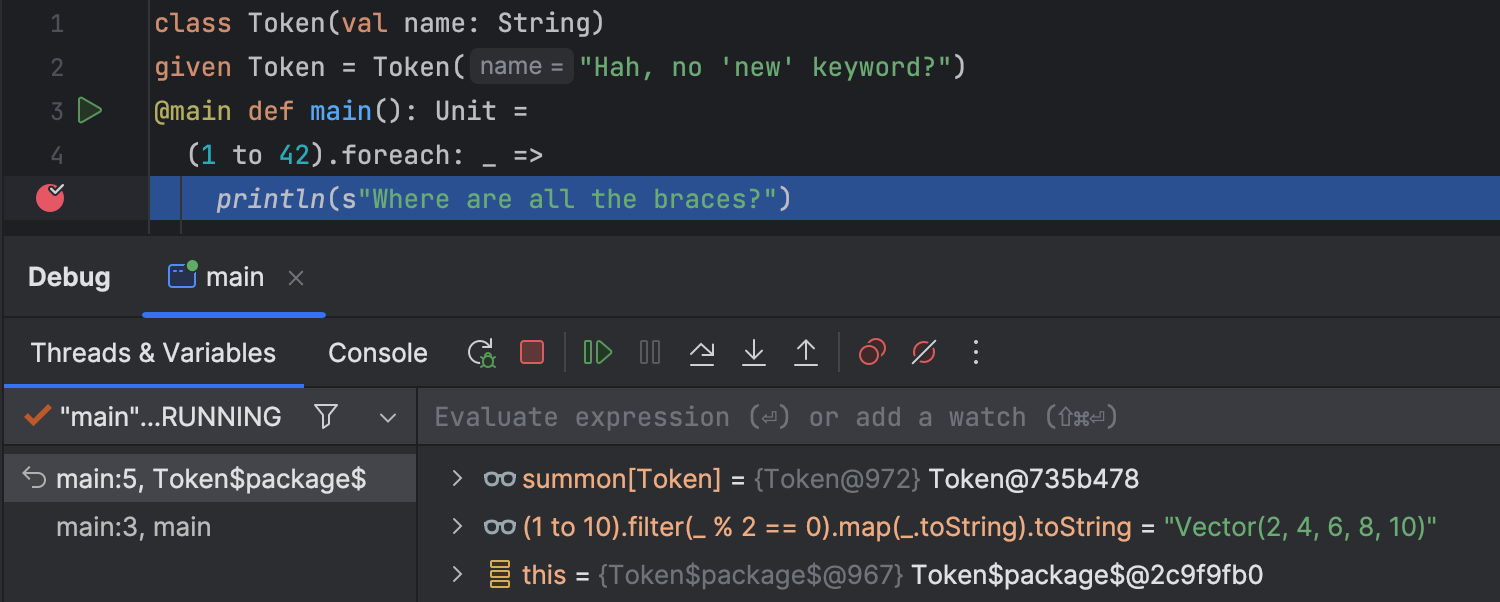

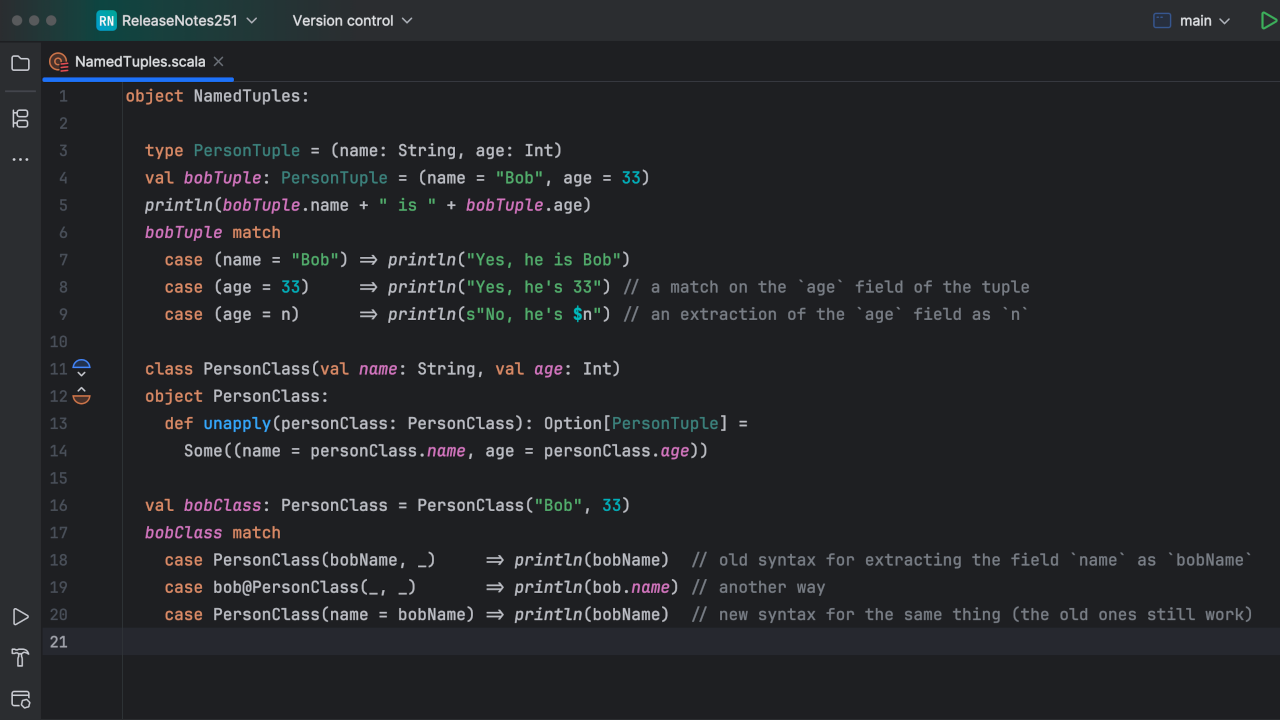

Scala Plugin 2025.1 is out! The update brings: - Support for the new syntax of context bounds and givens. - Improved handling of named tuples - A new action: "Generate sbt managed sources" - X-Ray hints for apply methods and all parameter names