JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

JetBrains AI Assistant Integrates Google Gemini and Local LLMs

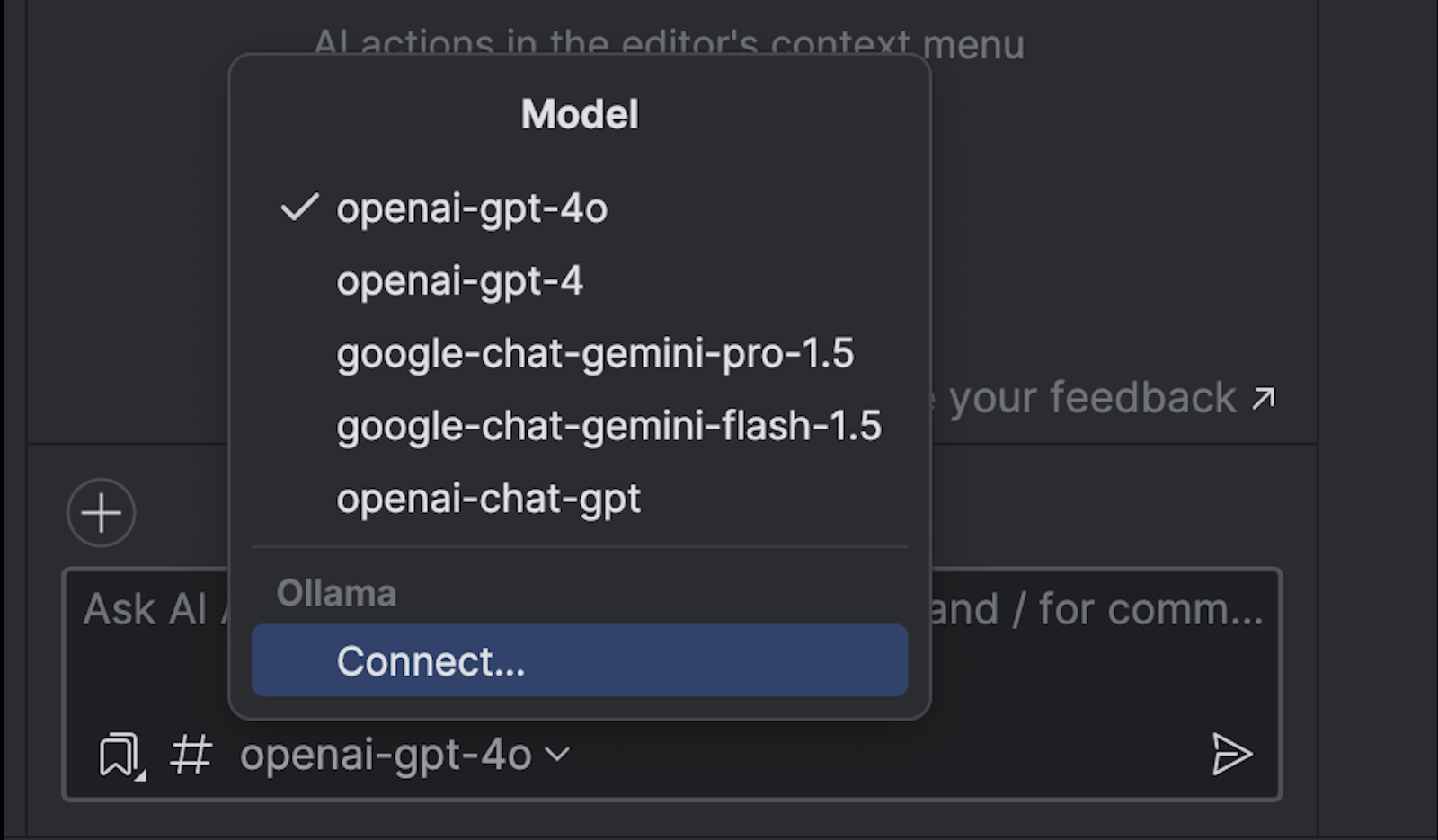

We’ve now added Gemini 1.5 Pro and Gemini 1.5 Flash to the lineup of LLMs used by JetBrains AI Assistant. These LLMs join forces with OpenAI models and local models.

What’s special about Google models?

Gemini 1.5 Pro and 1.5 Flash on Google Cloud’s Vertex AI will deliver advanced reasoning and impressive performance, unlocking several new use cases. Gemini Flash 1.5 will specifically help when cost efficiency at high volume and low latency is paramount.

How to try Google models

Starting from the 2024.3 version of JetBrains AI Assistant, you can pick your preferred LLM right in the AI chat. This expanded selection allows you to customize the AI chat’s responses to your specific workflow, offering a more adaptable and personalized experience.

Local model support via Ollama

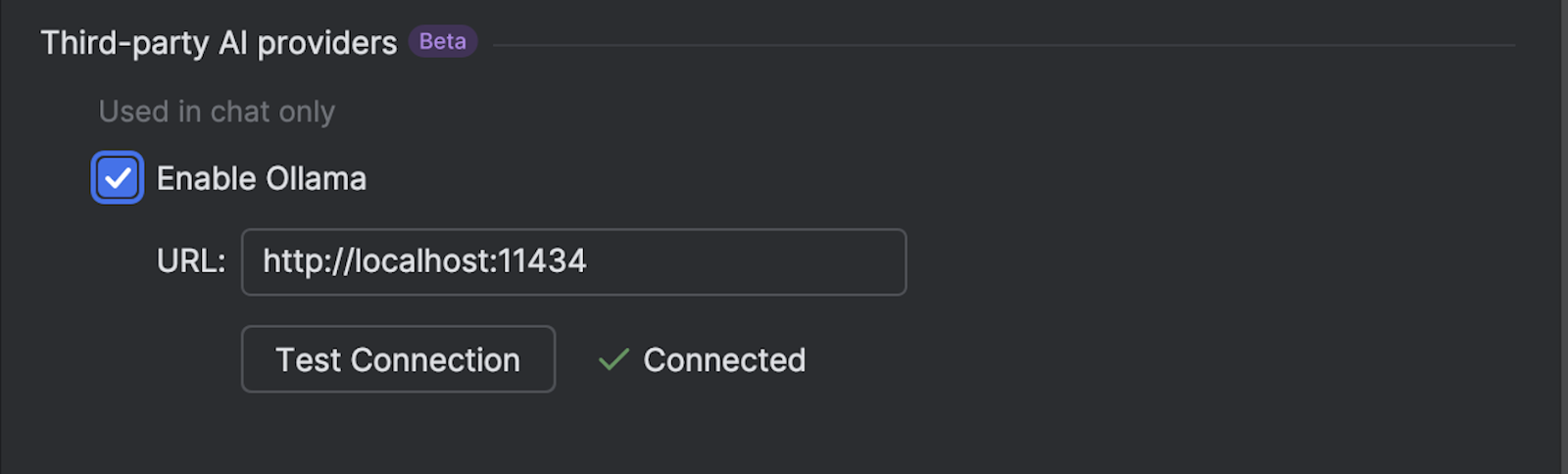

In addition to cloud-based models, you can now connect the AI chat to local models available through Ollama. This is particularly useful if you need more control over your AI models, and it offers enhanced privacy, flexibility, and the ability to run models on local hardware.

To add an Ollama model to the chat, enable Ollama support in AI Assistant’s settings and configure the connection to your Ollama instance.

Explore these new models, and let us know what you think! ?

Subscribe to JetBrains AI Blog updates