JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

The Future of AI in Software Development

AI is no longer a distant idea. It’s already here and changing how we build software. As it advances, new questions emerge about its impact.

How deeply will AI be woven into software development? What new opportunities will emerge for companies building AI-powered tools? Perhaps most importantly, how will developers and AI collaborate over the next three to five years?

The AI 2027 outlook highlights the need for practical use, domain-specific design, and a focus on real results over hype.

In this article, we’ll offer insights into how AI is transforming the development landscape today and its potential impact on software development over the coming decade. Here’s an in-depth look at the challenges and opportunities that lie ahead for developers and organizations.

Two possible futures

We’ll explore two potential timelines for the future: one where AGI fundamentally reshapes software development, and another where AI merely enhances our current practices.

Here’s a quick recap of the trends we’ll have to stay on top of in order to know which future we’re headed toward:

First off is the rise of Artificial General Intelligence (AGI), where machines gain human-like cognitive abilities. OpenAI’s CEO, Sam Altman, has expressed confidence about AGI being right around the corner. However, experts like Meta’s Yann LeCun are more cautious, arguing that current systems remain far from that goal.

At Google I/O 2025, Google introduced Gemini 2.5 Pro with “Deep Think” reasoning, plus new tools like AI Mode for Search and Gemini Flow for video generation.

Alternatively, AI is enhancing software development through advanced tools that assist developers in coding and problem-solving. Platforms like GitHub Copilot or JetBrains AI integrate models from various AI research organizations, allowing developers to choose the most effective AI assistance for their specific tasks.

Tools such as JetBrains Junie, Cursor Composer, Codex, or Windsurf Flows enable automating various tasks at the codebase level at a rate unimaginable before. This integration boosts productivity and democratizes coding.

As AI continues to evolve, the software development landscape stands at a crossroads between the pursuit of AGI and the augmentation of human capabilities through AI-powered tools.

Scenario 1: A full-blown AI future

As technology gets closer and closer to the level of AGI, we’re beginning to see AI agents that don’t just assist, but actively write code. These systems can handle complex engineering tasks, with capabilities approaching those of senior-level developers. Early examples include Devin, which can plan and execute full development workflows, and Honeycomb, which integrates autonomous agents into real-world software pipelines. In systems evaluation, progress is often measured using benchmarks like SWE-bench, which focuses on resolving localized GitHub issues. Taken together, these efforts mark the emergence of autonomous AI agents that we can call “AI developers”.

We can even imagine AI developers eventually working together in teams, much the same way humans do today, with each having its own specialized area (development, testing, project coordination, etc.). This idea is gaining traction in research. Examples include RepoAgent, which applies multiple agents to full-stack tasks in real repositories, and AgentCoder, which simulates collaborative agent roles throughout the software workflow.

AI developers need a home, too

AI developers will demand their own workspace ecosystem. This isn’t just a theoretical concept – it’s an immediate challenge facing organizations integrating AI into their development workflows.

This evolution will unfold naturally over the next few years, from enhanced ML capabilities in enterprise environments to fully customizable AI developer solutions. For some AI developers, this “home” may exist within centralized cloud-based platforms.

For others, local inference offers an alternative path. Tools like Ollama already allow powerful models to run directly on consumer hardware. With quantization, even 30B+ parameter models can run locally on high-end GPUs, opening the door to secure, offline-first AI development environments. Organizations that recognize and adapt to this shift early will gain a meaningful edge on the AI-driven development landscape.

AI teams need tools

As teams of AI developers (let’s call them “AI teams”) become more advanced, they’ll need specialized tools to manage every part of their workflow, from coding and testing to handling documentation and requirements. The AutoCodeRover team was among the first to show how advanced tools make it far easier for agents to solve problems. Since then, we’ve seen an uptick in environments and platforms for AI developers: hide, OpenHands, and others. AI teams rely on robust, integrated platforms, just like humans do.

The ultimate breakthrough would be a platform that gives AI models direct, seamless access to all the essential tools in one centralized space. This setup would allow AI developers to operate as smoothly as human developers within a single interface by eliminating the need for complex integrations.

Early efforts in this direction include OpenAI’s Computer-Using Agent (CUA), which enables models to interact with existing software on a desktop, and Anthropic’s ComputerUse, which gives Claude the ability to control full computing environments.

Both approaches reflect a shared goal: equipping AI developers with the same types of digital toolkits that humans use.

Humans need next-generation IDEs to collaborate with AI teams

Even with AI teams doing the heavy lifting, we’ll still need humans to guide, refine, and approve the final product. Next-generation IDEs will be essential for this, bridging the gap between human insight and AI productivity.

In fact, there’s an entire new field of study emerging, known as Human-AI eXperience (HAX). This field is all about finding efficient ways for human developers to interact with their AI tools. Soon enough, we’ll be faced with a flood of AI-generated code, and we’ll need IDEs that can understand and verify this code for us, while we humans stay focused on the big picture stuff (setting requirements, visualizing project progress, etc.).

At JetBrains, we’re exploring more effective ways for humans to collaborate with AI systems. We’re staying on top of broader developments in this space, and view tools like Claude Code, Warp AI, Gemini CLI, Google Jules, Cursor, Coedium, and DevGPT as strongly indicative of how the landscape is shifting.

AI teams need a marketplace

As AI developers produce more code, they’ll need a dedicated marketplace – a central hub for storing, finding, and reusing AI-generated code (like GitHub for AI agents).

Existing platforms aren’t built for this use case. They assume human intent, manual review, and slow feedback cycles. An AI-first marketplace would need different foundations, like built-in sandboxing to safely test unknown contributions, automated verification pipelines to check compatibility and quality, and metadata designed to help AI developers understand, rate, and select what they need.

Without these features, reuse becomes risky and inefficient. With them, AI teams can move faster, collaborate safely, and continuously improve shared assets.

AI will face regulations

The AI learning curve isn’t specific to developers. Lawyers and policymakers will also have to learn the ropes.

The first problem is safety. Obviously AI is not immune to making mistakes, and these mistakes can cause real-world damage. Studies have shown that trustworthy AI code may be a long way off. For the foreseeable future, we should be treating AI-generated code with the same scrutiny that we apply to, say, self-driving cars. Formal audits and certifications are a must.

Secondly, the prevalence of AI may raise new questions about intellectual property and its definitions. For example, when we use publicly available code to train models, are we infringing on the rights of the original authors? Recent lawsuits, such as those involving GitHub Copilot and Open AI, force us to ask this question. Attribution and licensing will only become more complex as AI generates code at scale.

This creates a major opportunity for companies that develop tools to inspect and certify AI-generated code. Tools that can identify AI-created code, flag potential risks, and approve it will protect users and build trust.

Software may look different from the inside

AI developers could end up training themselves, learning and evolving their coding patterns through countless iterations of trial and error. Early work in this direction appeared in 2022, including projects like CodeRL, which applied reinforcement learning to optimize code generation based on execution feedback. While the code might still be written in familiar languages like Java or Kotlin, its structure could be optimized entirely for machine efficiency, not human readability.

An even more frightening thought is that we could end up with new programming languages that don’t follow any of the patterns we humans are familiar with, as they were designed entirely for AI developers. This isn’t science fiction – it’s the next frontier in software development, where AI might write code that is essentially unrecognizable to us.

As Erik Meijer outlines in his ACM article, we may soon need to accept a world where code serves machines first, with humans relying on meta-tools to inspect and tweak the output. The result? Faster, more efficient business systems, even if we need new abstractions to understand what’s going on under the hood.

Software may look the same, but with AI developers choosing their favorites

In the AI era, a language’s success might depend more on its available codebase than its elegant syntax. It’s simple math – the more code examples available, the better AI can understand that language and generate code in it.

This creates a unique opportunity to position programming languages for AI adoption. The goal isn’t to replace programming languages, but to develop them for this new collaborative future.

Languages like Rust, with strong memory safety guarantees, or Dafny, which supports formal verification, offer valuable properties in contexts where reliability matters. Others, like Julia, scale well for numerical and data-heavy tasks, making them attractive for AI workflows. The race to become AI’s preferred programming language is just beginning.

The legacy code challenge

Let’s talk about the elephant in the room: legacy code. While everyone’s excited about AI generating new applications, there’s a trillion-dollar reality we can’t ignore. We have a massive amount of code powering our world, and it’s not going anywhere.

The real opportunity here lies in creating smart maintenance solutions that can handle enterprise-scale challenges. Some examples are tools that can help modernize legacy codebases, automatically suggest library updates, and provide deep insights across millions of lines of code.

Scenario 2: AI as an enhancer

In this more conservative vision of the future, AI doesn’t replace developers, but supercharges their work and transforms the toolkit they rely on. Developers remain firmly in control, and AI merely helps their productivity skyrocket.

Advancement in AI support beyond coding

In the future, activities like debugging, profiling, and configuring development environments are likely to become partially or fully automated. So far, automation has primarily focused on accelerating coding itself. The next generation of AI tools will likely handle a broader range of tasks, such as finding bugs and optimizing performance.

However, even as these tasks are automated, human developers will still be critical in reviewing and verifying the AI’s work. The challenge will be creating tools that assist in these tasks and build trust, enabling developers to quickly confirm that the AI’s actions are correct and helpful.

AI developers as part of human teams

Software teams are already moving towards including AI as active contributors. We can see early signs in tools like GitHub bots that fix vulnerabilities without human input. Before long, AI agents could take on everyday tasks like resolving issues, updating documents, and tidying up codebases.

This shift lets developers spend more time on complex, creative work while AI handles the routine upkeep.

IDEs adapted to work with AI developers

This shift is already underway. As AI joins development teams, human developers will need tools to manage these new digital teammates. That means assigning tasks, tracking progress, and reviewing output, all within the IDE.

New, user-friendly interfaces will help developers see what each agent is working on, check task lists, and respond to questions. As AI performs more updates and maintenance, these manager-style tools will become essential. Like any teammate, AI may also reach out for help when needed.

AI assistants beyond development

What if everyone involved in the development process (product managers, QA engineers, DevOps teams, and more) had their own AI helper? We seem to be headed in this direction already, with startups popping up to offer AI-powered assistance to roles outside traditional software engineering.

The end goal? Less time spent on routine tasks and more time for people to focus on strategic work, reviewing results, and making key decisions.

There’s a boatload of untapped potential for increased productivity here. Now is the time to start exploring what each professional needs from their AI assistant and get a few prototypes up and running.

AI assistants as technical leads

It won’t be long before AI assistants can act as “tech leads”, ready to answer any question you have about your project. LLMs can already handle general questions and give insights on specific sections of code, but for now, only within the highly specific context presented by the prompt.

As these models evolve, they’ll be able to draw from the entire codebase, the project’s development history, issues from the tracker, team chats, and all documentation.

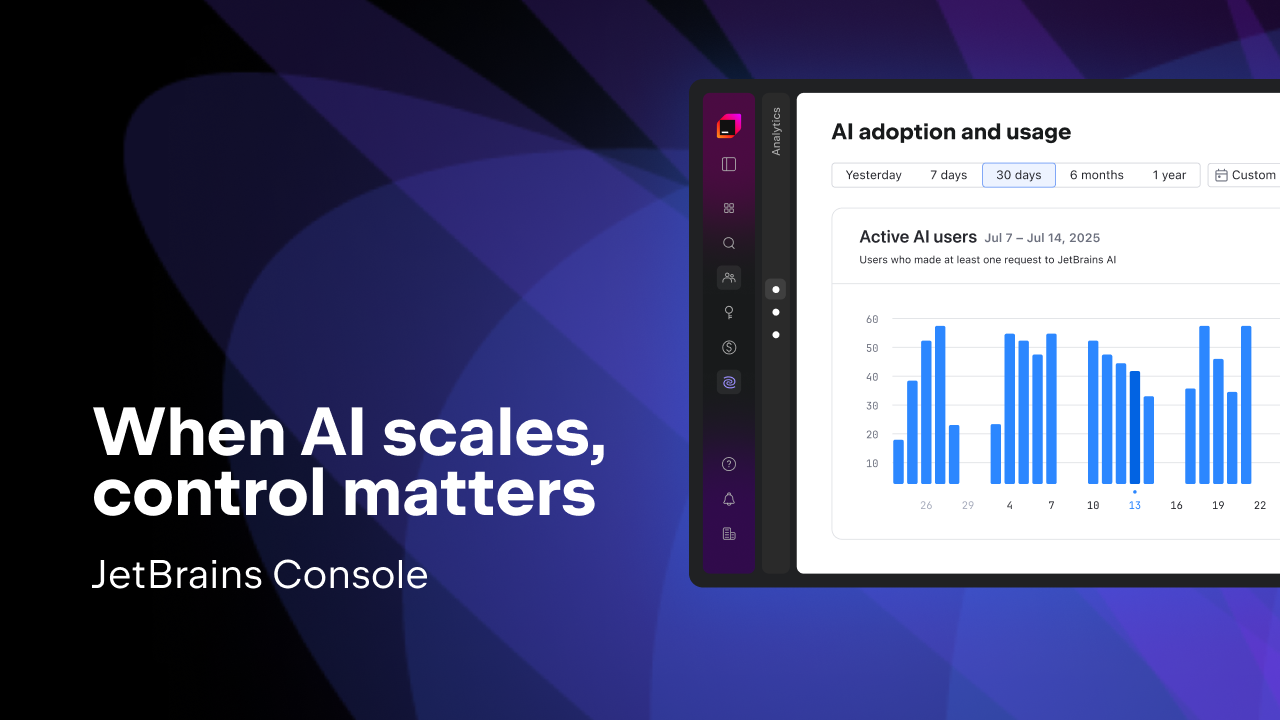

AI needs control

As AI generates more and more code, we will need ways to keep track of it, verify its quality, and ensure it works as expected. Simply reviewing AI-generated code will not be enough to guarantee its reliability. One likely development is the introduction of Git-level tagging to mark code as AI-generated, making it easier to trace and manage throughout the lifecycle.

This is only part of the picture. We will also need tools designed specifically for testing and auditing code written by machines. Platforms like SonarQube are already evolving to support more automated quality checks, and efforts to build policy-aware validation frameworks are gaining traction.

Looking ahead, companies working in this space may help shape how we standardize, track, and certify AI-generated code. Broader regulatory initiatives are also emerging, such as the EU AI Act and guidance from the US National Institute of Standards and Technology (NIST), both of which emphasise transparency, accountability, and traceability as essential principles for responsible AI use in software development.

AI-powered education

As AI reshapes development, our approach to teaching programming should keep pace. Imagine new devs learning not just to code, but to code in an AI-enhanced environment that mirrors real-world development. By integrating AI into programming education, we can help beginners adopt tools faster and more naturally, setting them up for success with the technologies they’ll use.

Wrapping up: An AI-powered future is inevitable

We’ve looked at current AI trends shaping software development and explored two vastly different AI-driven scenarios. Regardless of what the future holds, one thing is clear: AI will evolve from a supportive assistant into a proactive player in coding, testing, and analysis. This shift will create new roles for developers as guides and reviewers, collaborating closely with AI agents.

As AI automates more routine work and gains richer project context, we’ll need fresh interfaces, reliable verification tools, and adaptable workflows. Preparing for this future means advancing machine learning, experimenting with interfaces, and deepening AI integration. Thanks for joining us on this exploration!

Subscribe to JetBrains AI Blog updates