Qodana

The code quality platform for teams

The Dark Side of AI at Work: Why “Shadow AI” Could Cost You

Table of Contents

The rise of artificial intelligence has brought incredible opportunities for productivity and innovation. But with these benefits comes a growing threat: shadow AI. Just as “shadow IT” once referred to unapproved apps in the workplace, shadow AI describes employees using unauthorized AI tools, often without realizing the potential harm.

While it may start as an innocent attempt to speed up coding or generate documentation, shadow AI creates significant vulnerability and compliance risks for businesses.

“Today, organizations are grappling with an emerging artificial intelligence trend where employees use consumer-grade AI in business situations” says a recent post from Google Cloud Platform. But can we understand the true relevance ot modern businesses without diving deeper?

Why shadow AI can be dangerous

Employees may use these tools with good intentions, speeding up tasks, generating code, or drafting content, but without oversight, this practice exposes organizations to serious consequences.

From data leaks to compliance breaches and security vulnerabilities, shadow AI introduces risks that can undermine trust, damage reputations, and even result in financial penalties.

1. Data leakage

When employees paste source code, credentials, or sensitive business data into public AI assistants, they may unknowingly share confidential information with third-party providers. This creates a risk of intellectual property loss and data breaches. In fact, real-life examples have impacted many businesses from T-Mobile to TaskRabbit.

2. Security vulnerabilities

AI-generated code can look clean on the surface but often introduces hidden bugs, unpatched libraries, or exploitable patterns. Without strict review, organizations may deploy insecure code into production environments.

3. Compliance violations

Industries regulated by GDPR, HIPAA, or other data protection laws face severe consequences if employees use unapproved AI services. Even a single compliance slip can lead to fines, lawsuits, or reputational damage.

4. Lack of auditability

Shadow AI creates blind spots. If employees use unauthorized AI tools, companies have no visibility into what code was generated, where it came from, or whether it complies with licensing and security requirements.

Real-world example: Samsung’s Source Code Leak via ChatGPT

In 2023, Samsung engineers used ChatGPT to debug code by pasting proprietary source code into the AI tool. Because OpenAI retains user inputs for training, the engineers inadvertently exposed sensitive intellectual property, prompting the company to ban ChatGPT internally. Forbes have more details on this story here.

How Qodana reduces shadow AI risks

Qodana acts as a compliance and security safeguard, ensuring that AI-driven development stays safe and controlled.

Compliance assurance

Qodana checks code for license violations and compliance gaps, reducing the risk of unapproved dependencies or legally problematic snippets being introduced by AI tools. Standard dependancy and risk frameworks like OWASP checks are supported by Qodana’s functionality and it is SOC 2-certified.

Security-focused scanning

By detecting vulnerabilities, misconfigurations, and insecure coding practices, Qodana ensures that AI-generated code is subject to the same rigorous security standards as human-written code. We are also supported by Mend.io to help bring you better security checks and greater analysis.

Policy enforcement across teams

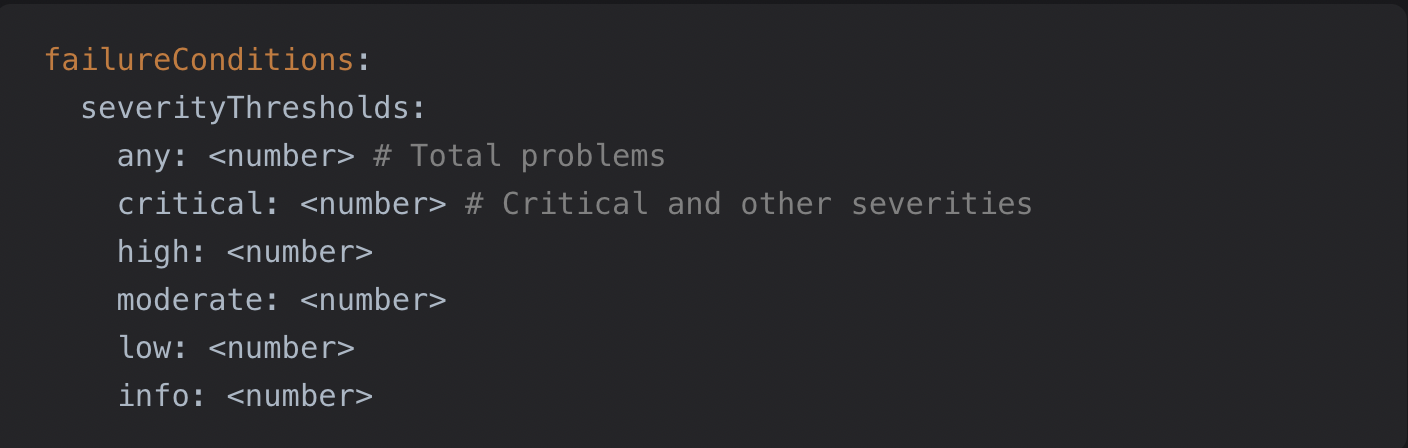

With customizable rules, organizations can enforce coding standards that prevent unsafe or unverifiable AI outputs from entering production. This is a benefit that’s often downplayed in the face of compliance risks but it can be just as important.

This could be anything from enforcing linting and formatting rules (e.g., ESLint, Prettier, Pylint) to requiring a minimum code coverage percentage from unit tests before merging or blocking pull requests if static analysis finds unresolved high-severity issues (Quality gates).

Traceability and transparency

Qodana provides detailed reports that improve auditability, helping businesses prove compliance and monitor whether unauthorized AI usage is affecting their business operations.

Teams can use the Insights Dashboard to check on the health of projects across the board and note patterns that could alert them to security risks and other challenges.

Building trustworthy AI adoption

Shadow AI may feel like a shortcut, but it introduces unnecessary risks that can compromise security and compliance at scale. With Qodana, companies can embrace AI responsibly, giving employees access to powerful tools while ensuring code remains secure, compliant, and traceable.

Qodana doesn’t just detect vulnerabilities, it helps organizations build confidence in adopting AI the right way.

Subscribe to Qodana Blog updates