JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

Koog × A2A: Building Connected AI Agents in Kotlin

If you’ve ever tried building a system of multiple AI agents, you’ve probably run into the problem. It starts simple enough: You’ve got one agent writing blog posts, another proofreading them, and maybe a third suggesting or generating images. Individually, they’re effective. But getting them to work together? That’s where things might start falling apart.

Each agent speaks its own “language”: One uses a different API interface, another has its own message format, and they all might come with specific authentication requirements. Making them communicate means writing custom integration code for every single connection. Instead of focusing on making your agents smarter, faster, or more useful, you’re stuck building bridges between them.

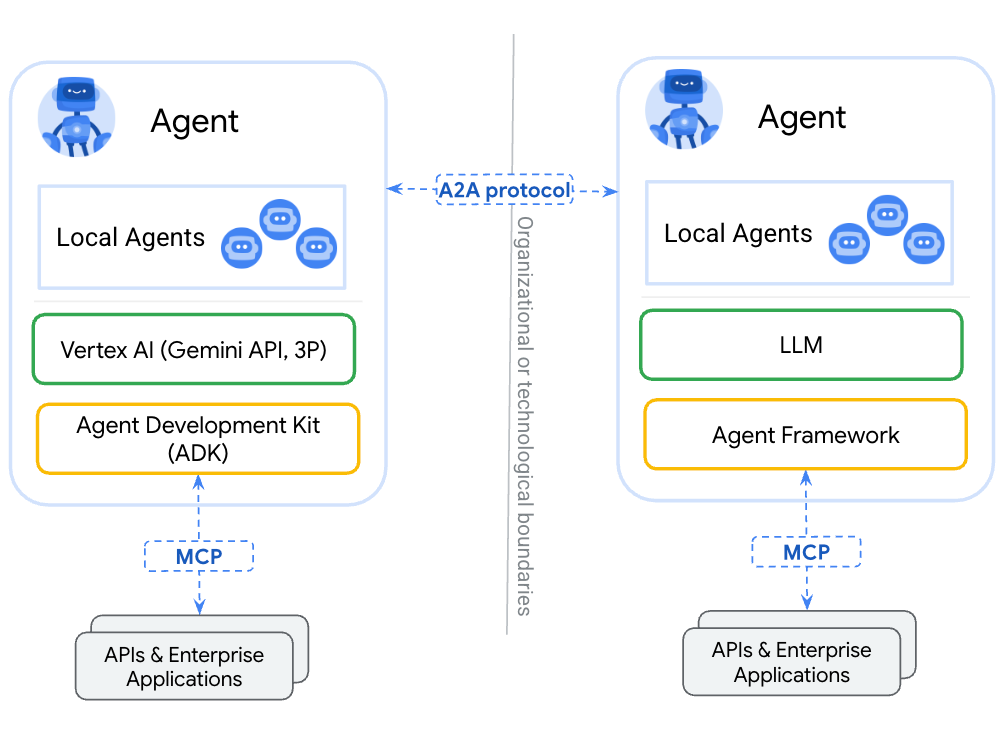

What A2A does: The cross-agent communication layer

This is where the Agent2Agent (A2A) Protocol takes over.

With A2A, your agents can communicate directly through a standardized protocol, working as a universal translator for your AI ecosystem. Your blog-writing agent seamlessly passes content to your proofreader, which triggers your image generator, while the proofreader loops back with corrections and the image generator requests style clarifications. All orchestrated through a single, unified communication layer.

Instead of managing dozens of point-to-point connections, A2A provides:

- Plug-and-play connectivity: Agents automatically discover and connect with each other.

- Standardized messaging: Unified format, clear protocol, and zero translation headaches.

- Built-in orchestration: Define workflows once, and let A2A handle the coordination.

- Scalability without complexity: Add or reuse agents without rewriting existing connections.

What is the result? You spend time improving your agents’ capabilities, not debugging their conversations. And the best part – you can implement your agents using any language or framework you like. For JVM users, Koog is a top choice, and as of version 0.5.0, it integrates seamlessly with the A2A ecosystem.

What Koog does: The internal orchestration engine

Koog is a Kotlin-based framework for building AI agents targeting JVM, Android, iOS, WebAssembly, and in-browser applications. It excels at:

- Complex workflow management: Design graph-based strategies with support for loops, branches, fallbacks, and parallel branch execution.

- Ready-to-use components: Benefit from its built-in nodes for calling LLMs and external tools, summarizing message history, and executing entire strategies.

- Tool orchestration: Turn any function in your code into a tool your AI agent can use, whether sequentially or even in parallel

- Native MCP integration: Connect seamlessly to any MCP server using the Kotlin MCP SDK.

- Memory and storage support: Built-in support for agent memory and RAG (retrieval-augmented generation) workflows with efficient context management.

- Fault tolerance: Built-in retries, checkpointing, recovery mechanisms, and state persistence to ensure reliable execution.

- Observability: Full agent event handling, logging, and support for OpenTelemetry with built-in integrations with Langfuse and W&B Weave.

In short, Koog is great for building reliable AI agents.

Why pair Koog with A2A

Koog and A2A cover different layers of the AI agent stack. When used together, they complement each other and fill the gaps.

Koog already handles the hardest parts of AI orchestration needed for real-world enterprise use.

A2A adds the missing piece: It enables your Koog agents to communicate with any other A2A-compatible agents in your ecosystem. Instead of building custom integrations for each external service, your Koog AI workflows can automatically discover and use other agents.

The result is a perfect match: Koog’s advanced workflows become A2A tasks that any agent can request, while your Koog agents tap into the full power of the A2A ecosystem. And since Koog runs on backend, on-device, and in-browser environments, you can deliver inter-connected AI more broadly and effectively than ever before.

How is this possible? Let’s see!

A2A protocol

The A2A protocol defines the essential building blocks for agent-to-agent communication:

- Agent discovery through standardized agent cards (JSON documents that describe capabilities).

- Message formats for requests and responses with consistent schemas.

- Task lifecycle management with clear states: submitted → working → completed/failed.

- Transport layers such as JSON-RPC, gRPC, and REST.

- Security schemes using standard OAuth2, API keys, and JWT tokens.

- Error handling with standardized error codes.

Agent cards: Digital business cards

Every agent in the A2A ecosystem publishes its capabilities through an “agent card” – a standardized JSON file hosted at some URL, e.g. /.well-known/agent-card.json, on the agent’s domain. The agent card acts as a digital business card, allowing other agents to discover the services it provides.

An agent card typically contains:

- Basic information: Such as agent name, description, and version.

- Skills: What the agent can do (e.g. draft documents, proofread text, analyze data, and generate images).

- Endpoints: How to reach the agent.

- Other optional information: Enabled capabilities, authentication, and more.

This discovery mechanism eliminates the need for manual integration work. When an agent needs a specific skill, it simply checks the relevant agent card to understand how to interact with that service.

In Koog, agent cards are defined using Kotlin data classes:

val agentCard = AgentCard(

name = "Blog Writer",

description = "AI agent that creates high-quality blog posts and articles",

url = "https://api.blog-writer.com/a2a/v1",

version = "1.0.0",

capabilities = AgentCapabilities(streaming = true),

defaultInputModes = listOf("text/plain"),

defaultOutputModes = listOf("text/markdown"),

skills = listOf(

AgentSkill(

id = "write-post",

name = "Blog Post Writing",

description = "Generate engaging blog posts on any topic",

tags = listOf("writing", "content", "blog"),

examples = listOf("Write a post about AI trends")

)

)

)

Universal messaging: One simple pattern

A2A uses a single, standardized message format for all inter-agent communication. This simplicity is powerful – instead of learning dozens of different APIs, agents only need to understand one communication pattern.

Every interaction follows the same flow:

- Send a message with the task request and parameters.

- Receive either immediate results or a task for tracking.

- Get updates via real-time channels for longer operations.

This universal approach means adding new agent capabilities doesn’t require changing communication protocols. Whether you’re asking an agent to summarize text or generate a complex report, the message structure remains consistent.

In Koog, creating and sending a message is straightforward using already implemented objects and protocols:

val message = Message(

role = Role.User,

parts = listOf(

TextPart("Write a blog post about the future of AI agents")

),

contextId = "blog-project-456"

)

val request = Request(

data = MessageSendParams(

message = message,

configuration = MessageConfiguration(

blocking = false, // Get first response

historyLength = 5 // Include context

)

)

)

val response = client.sendMessage(request)

The message format supports rich content through different Part types, including TextPart for plain text content, FilePart for file attachments and DataPart for structured JSON data.

This unified structure means your Koog agents can seamlessly communicate with any A2A-compatible agent, whether it’s for text processing, file analysis, or complex data transformations.

Task lifecycle: Smart workflows

A2A intelligently manages different types of work based on complexity and duration:

Immediate messages: Simple operations like text formatting or quick calculations return results directly in the AI’s response. No waiting, no tracking needed.

Long-running tasks: Complex operations like document analysis or multi-step workflows are scheduled and return a task. The requesting agent can then monitor progress and retrieve the task results once ready.

Real-time updates: For time-consuming operations, Server-Sent Events (SSE) provide live progress updates. This keeps agents informed without requiring constant polling.

class BlogWriterExecutor : AgentExecutor {

override suspend fun execute(

context: RequestContext<MessageSendParams>,

eventProcessor: SessionEventProcessor

) {

val task = Task(

contextId = context.contextId,

status = TaskStatus(

state = TaskState.Submitted,

message = Message(

role = Role.Agent,

parts = listOf(TextPart("Blog writing request received")),

contextId = context.contextId,

taskId = context.taskId,

)

)

)

eventProcessor.sendTaskEvent(task)

...

}

}

Built-in security: Industry standards only

A2A doesn’t reinvent security. Instead, it relies on proven, widely-adopted standards like OAuth2, API keys, and standard HTTPS.

This approach means developers don’t need to learn new authentication schemes. If you understand modern web API security, you already understand A2A security. The system inherits all the tooling, best practices, and security audits that come with these established standards.

"securitySchemes": {

"google": {

"openIdConnectUrl": "https://accounts.google.com/.well-known/openid-configuration",

"type": "openIdConnect"

}

}

class AuthorizedA2AServer(

agentExecutor: AgentExecutor,

agentCard: AgentCard,

agentCardExtended: AgentCard? = null,

taskStorage: TaskStorage = InMemoryTaskStorage(),

messageStorage: MessageStorage = InMemoryMessageStorage(),

private val authService: AuthService, // Service responsible for authentication

) : A2AServer(

agentExecutor = agentExecutor,

agentCard = agentCard,

agentCardExtended = agentCardExtended,

taskStorage = taskStorage,

messageStorage = messageStorage,

) {

private suspend fun authenticateAndAuthorize(

ctx: ServerCallContext,

requiredPermission: String

): AuthenticatedUser {

val token = ctx.headers["Authorization"]?.firstOrNull()

?: throw A2AInvalidParamsException("Missing Authorization token")

val user = authService.authenticate(token)

?: throw A2AInvalidParamsException("Invalid Authorization token")

if (requiredPermission !in user.permissions) {

throw A2AUnsupportedOperationException("Insufficient permissions")

}

return user

}

override suspend fun onSendMessage(

request: Request<MessageSendParams>,

ctx: ServerCallContext

): Response<CommunicationEvent> {

val user = authenticateAndAuthorize(ctx, requiredPermission = "send_message")

// Pass user data to the agent executor via context state

val enrichedCtx = ctx.copy(

state = ctx.state + (AuthStateKeys.USER to user)

)

// Delegate to parent implementation with enriched context

return super.onSendMessage(request, enrichedCtx)

}

// the rest of wrapped A2A methods

// ...

}

How to integrate Koog agents with A2A

The Koog framework comes with both the A2A client and server built right in. This means your Koog agents can seamlessly talk to other A2A-enabled agents while also making themselves discoverable to the outside world. Here’s a simple example demonstrating how you can implement this.

How to wrap Koog agents into A2A servers

First, define a strategy for the agent. Koog provides convenient converters (toKoogMessage, toA2AMessage) to seamlessly transform between Koog and A2A message formats, eliminating the need for manual serialization. Specialized nodes such as nodeA2ASendMessage handle the message exchange process, making communication workflows straightforward to implement:

fun blogpostWritingStrategy() = strategy<MessageSendParams, A2AMessage>("blogpost-writer-strategy") {

val blogpostRequest by node<MessageSendParams, A2AMessage> { input ->

val userMessage = input.toKoogMessage().content

llm.writeSession {

user {

+"Write a blogpost based on the user request"

+xml {

tag("user_request") {

+userMessage

}

}

}

requestLLM().toA2AMessage()

}

}

val sendMessage by nodeA2ARespondMessage()

nodeStart then blogpostRequest then sendMessage then nodeFinish

}

Second, define the agent itself. Once you install the A2AServer feature, your agent becomes discoverable and accessible to others in the ecosystem, enabling the creation of sophisticated networks where specialized agents collaborate seamlessly.

fun createBlogpostWritingAgent(

requestContext: RequestContext<MessageSendParams>,

eventProcessor: SessionEventProcessor

): AIAgent<MessageSendParams, A2AMessage> {

// Get existing messages for the current conversation context

val messageHistory = requestContext.messageStorage.getAll().map { it.toKoogMessage() }

val agentConfig = AIAgentConfig(

prompt = prompt("blogpost") {

system("You are a blogpost writing agent")

messages(messageHistory)

},

model = GoogleModels.Gemini2_5Flash,

maxAgentIterations = 5

)

return agent = AIAgent<FullWeatherForecastRequest, FullWeatherForecast>(

promptExecutor = MultiLLMPromptExecutor(

LLMProvider.Google to GoogleLLMClient(System.getenv("GOOGLE_API_KEY")),

),

strategy = blogpostWritingStrategy(),

agentConfig = agentConfig

) {

install(A2AAgentServer) {

this.context = requestContext

this.eventProcessor = eventProcessor

}

handleEvents {

onAgentFinished { ctx ->

// Update current conversation context with response from the agent

val resultMessge = ctx.result as A2AMessage

requestContext.messageStorage.save(resultMessge)

}

}

}

}

Third, we need to wrap the agent into the executor and then define a server.

class BlogpostAgentExecutor : AgentExecutor {

override suspend fun execute(

context: RequestContext<MessageSendParams>,

eventProcessor: SessionEventProcessor

) {

createBlogpostWritingAgent(context, eventProcessor)

.run(context.params.message)

}

}

val a2aServer = A2AServer(

agentExecutor = BlogpostAgentExecutor(),

agentCard = agentCard,

)

The final step is to define a server transport and run the server.

val transport = HttpJSONRPCServerTransport(

requestHandler = a2aServer

)

transport.start(

engineFactory = Netty,

port = 8080,

path = "/a2a",

wait = true,

agentCard = agentCard,

agentCardPath = A2AConsts.AGENT_CARD_WELL_KNOWN_PATH

)

Now your agent is ready to handle requests!

How to call other A2A-enabled agents from a Koog agent

First, you need to configure an A2A client and connect it to fetch an Agent Card.

val agentUrl = "https://example.com"

val cardResolver = UrlAgentCardResolver(

baseUrl = agentUrl,

path = A2AConsts.AGENT_CARD_WELL_KNOWN_PATH,

)

val transport = HttpJSONRPCClientTransport(

url = agentUrl,

)

val a2aClient = A2AClient(

transport = transport,

agentCardResolver = cardResolver

)

// Initialize client and fetch the card

a2aClient.connect()

Then you can use nodeA2ASendMessage or nodeA2ASendMessageStreaming in your strategy to call these clients and receive a message or task response.

val agentId = "agent_id"

val agent = AIAgent<String, String>(

promptExecutor = MultiLLMPromptExecutor(

LLMProvider.Google to GoogleLLMClient(System.getenv("GOOGLE_API_KEY")),

),

strategy = strategy<String, String>("a2a") {

val nodePrepareRequest by node<String, A2AClientRequest<MessageSendParams>> { input ->

A2AClientRequest(

agentId = agentId,

callContext = ClientCallContext.Default,

params = MessageSendParams(

message = A2AMessage(

messageId = Uuid.random().toString(),

role = Role.User,

parts = listOf(

TextPart(input)

)

)

)

)

}

val nodeA2A by nodeA2AClientSendMessage(agentId)

val nodeProcessResponse by node<CommunicationEvent, String> {

// Process event

when (it) {

is A2AMessage -> it.parts

.filterIsInstance<TextPart>()

.joinToString(separator = "\n") { it.text }

is Task -> it.artifacts

.orEmpty()

.flatMap { it.parts }

.filterIsInstance<TextPart>()

.joinToString(separator = "\n") { it.text }

}

}

nodeStart then nodePrepareRequest then nodeA2A then nodeProcessResponse then nodeFinish

},

agentConfig = agentConfig

) {

install(A2AAgentClient) {

this.a2aClients = mapOf(agentId to client)

}

}

agent.run("Write blog post about A2A and Koog integration")

Next steps

To dive deeper into Koog and A2A, check out these useful materials:

Subscribe to JetBrains AI Blog updates