JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

JetBrains AI Assistant 2024.3: Refine Your AI Experience With Model Selection, Enhanced Code Completion, and More

JetBrains AI Assistant 2024.3 is here! A highlight of this release is the flexibility to choose your preferred chat model. Select between Google Gemini, OpenAI, or local models to tailor interactions for a more customized experience.

This update also brings advanced code completion for all major programming languages, improved context management, and the ability to generate inline prompts directly within the editor.

More control over your chat experience: Choose between Gemini, OpenAI, and local models

You can now select your preferred AI chat model, choosing from cloud model providers like Google Gemini and OpenAI, or connect to local models. This expanded selection allows you to customize the AI chat’s responses to your specific workflow, offering a more adaptable and personalized experience.

Google’s Gemini models now available

The lineup of LLMs used by JetBrains AI now includes Gemini 1.5 Pro 002 and Flash 002. These models are designed to deliver advanced reasoning capabilities and optimized performance for a wide range of tasks. The Pro version excels in complex applications, while Flash is tailored for high-volume, low-latency scenarios. Now, AI Assistant users can leverage the power of Gemini models alongside our in-house Mellum and OpenAI options.

Local model support via Ollama

In addition to cloud-based models, you can now connect the AI chat to local models available through Ollama. This is particularly useful for users who need more control over their AI models, offering enhanced privacy, flexibility, and the ability to run models on local hardware.

To add an Ollama model to the chat you need to enable Ollama support in AI Assistant’s settings and configure the connection to your Ollama instance.

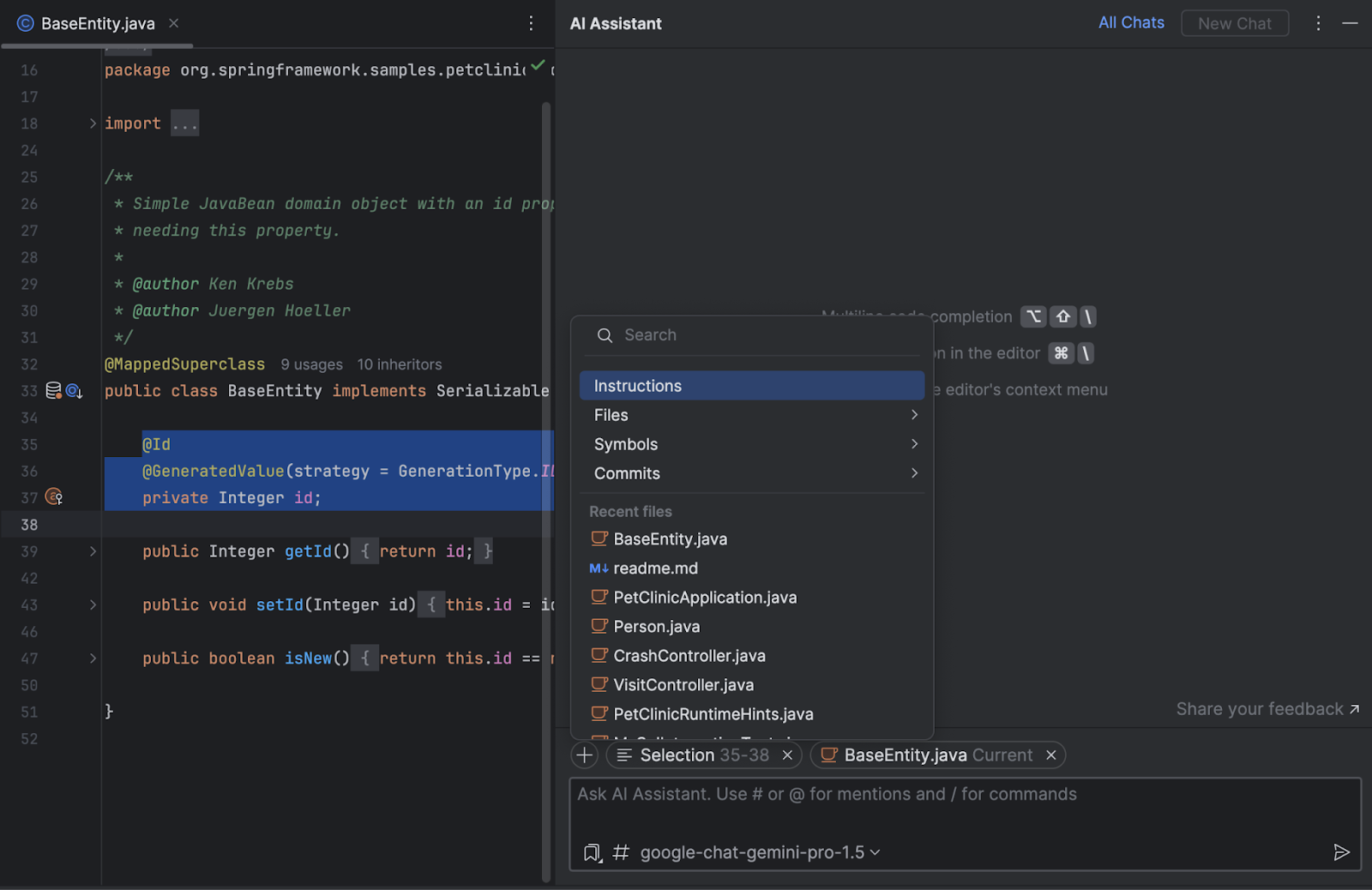

Improved context management

In this update, we’ve made context handling in AI Assistant more transparent and intuitive. A revamped UI lets you view and manage every element included as context, providing full visibility and control. The open file and any selected code within it are now automatically added to the context, and you can easily add or remove files as needed, customizing the context to fit your workflow. Additionally, you can attach project-wide instructions to guide AI Assistant’s responses throughout your codebase.

Cloud code completion with broader language support

JetBrains has released its own large language model (LLM) model, Mellum, specifically designed to enhance cloud-based code completion for developers. This new model, specialized for coding tasks, has expanded support for several new languages, including JavaScript, TypeScript, HTML, C#, C, C++, Go, PHP, Scala, and Ruby. Now, the code completion experience is unified across JetBrains IDEs, offering syntax highlighting for suggested code, the flexibility to accept suggestions token by token or line by line, and overall reduced latency.

Local code completion enhancements: Multi-line support for Python and contextual improvements

Local code completion has significantly improved, now offering multi-line suggestions for Python. Additionally, optimizations have been made across other programming languages. For Kotlin, retrieval-augmented generation (RAG) enables the model to pull information from multiple project files, ensuring the most relevant suggestions. The support for JavaScript, TypeScript, and CSS has also seen enhancements to their existing RAG functionality. Furthermore, local code completion has been introduced for HTML.

These improvements mean that suggestions appear faster across all languages, creating a more seamless coding experience. Best of all, local code completion is included for free in your IDE, allowing you to start utilizing these powerful features immediately.

Streamlined in-editor experience with inline AI prompts

The new inline AI prompt feature in AI Assistant introduces a direct way to enter your prompts right in the editor. Just start typing your request in natural language and the AI Assistant will recognize it and generate a suggestion. Inline AI prompts are context-aware, automatically including related files and symbols for more accurate code generation. This feature supports Java, Kotlin, Scala, Groovy, JavaScript, TypeScript, Python, JSON, YAML, PHP, Ruby, and Go file formats, and is available to all AI Assistant users.

We also improved the visibility of changes applied. There is now a purple mark in the gutter next to lines changed by AI Assistant, so you can easily see what has been updated.

Make multiple file-wide updates easily

AI Assistant now offers file-wide code generation, enabling streamlined edits across an entire file. This functionality allows for modifications across multiple code sections, including adding necessary imports, updating references, and defining missing declarations. Currently available for Java and Kotlin, it is triggered by the Generate Code action when no specific selection is made in the editor, offering a seamless experience for broad, file-wide adjustments.

Get instant answers about IDE features and settings in AI Chat

Say goodbye to searching through settings or documentation! With the new /docs command, you can now access documentation-based answers directly in the AI chat. Simply ask AI Assistant about a feature, and it will provide interactive step-by-step guidance.

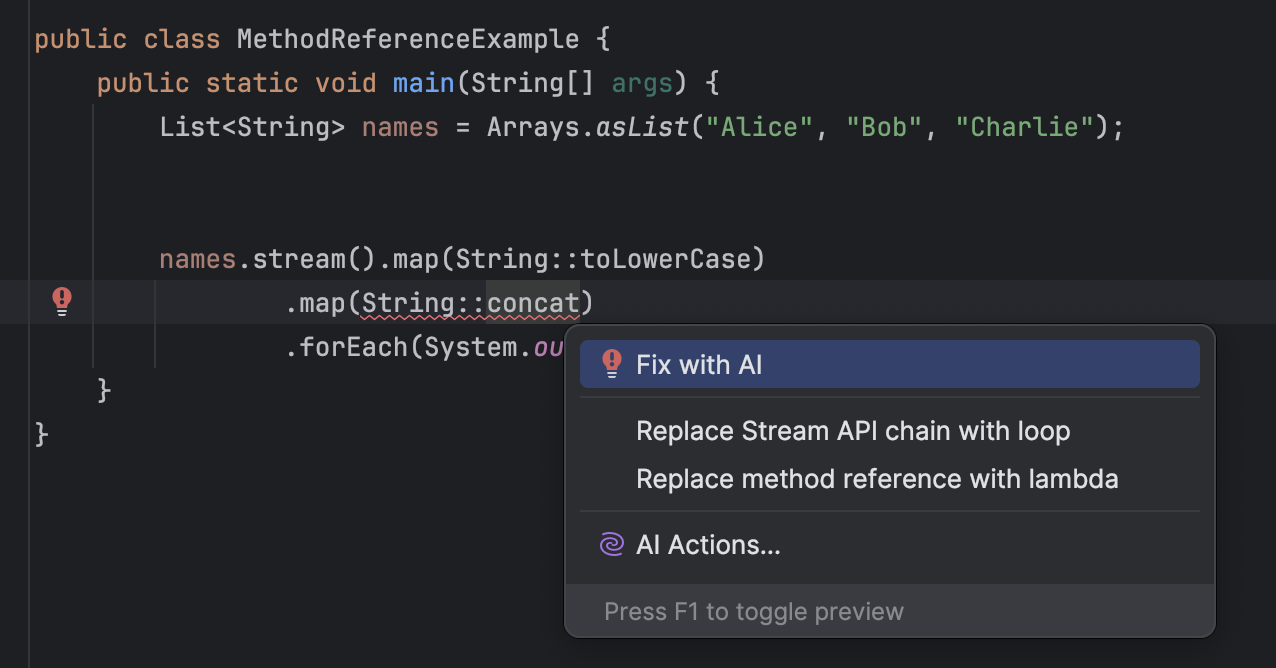

AI-powered quick-fix for faster error resolution

When a JetBrains IDE inspection flags a problem – whether it’s a syntax error, missing import, or something else – it suggests a quick-fix directly within the editor. With the latest update, Fix with AI takes this a step further. This new capability uses AI context awareness to suggest fixes that are more precise and applicable to your specific coding context, making it faster and easier to resolve coding problems without any manual input.

Explore AI Assistant and share your feedback

Explore these updates and let AI Assistant streamline your development workflow even further. As always, we look forward to hearing your feedback. You can also tell us about your experience via the Share your feedback link in the AI Assistant tool window or by submitting feature requests or bug reports in YouTrack.

Happy developing!

Subscribe to JetBrains AI Blog updates