JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

How Do LLMs Benefit Developer Productivity?

LLMs are everywhere! I am sure you have heard of large language models (LLMs) and may have seen demonstrations of what they can do. You may have even tried to play with some of the popular ones yourself. No matter your opinion of or experience with LLMs, you cannot deny that they are a popular topic these days, and the influence of LLMs and other AI assistance is becoming phenomenal.

The breakthrough of LLMs is that they allow computers to analyze and understand human language. Before the popularization of LLMs, computers could only receive instructions rigidly, usually via pre-set instructions or a user interface. Sometimes, they also required you to develop certain skills to get the machine to work correctly. However, with LLMs, the user interface becomes more flexible. You can tell the machine to get the job done in the same way you would communicate with another human being. For example, you can now simply ask a smart home device to play some music, and many tasks that seemed impossible for computers have become possible. These tasks require the capability to understand natural language and construct a meaningful response, for example, to chat with users, generate text, or summarize information.

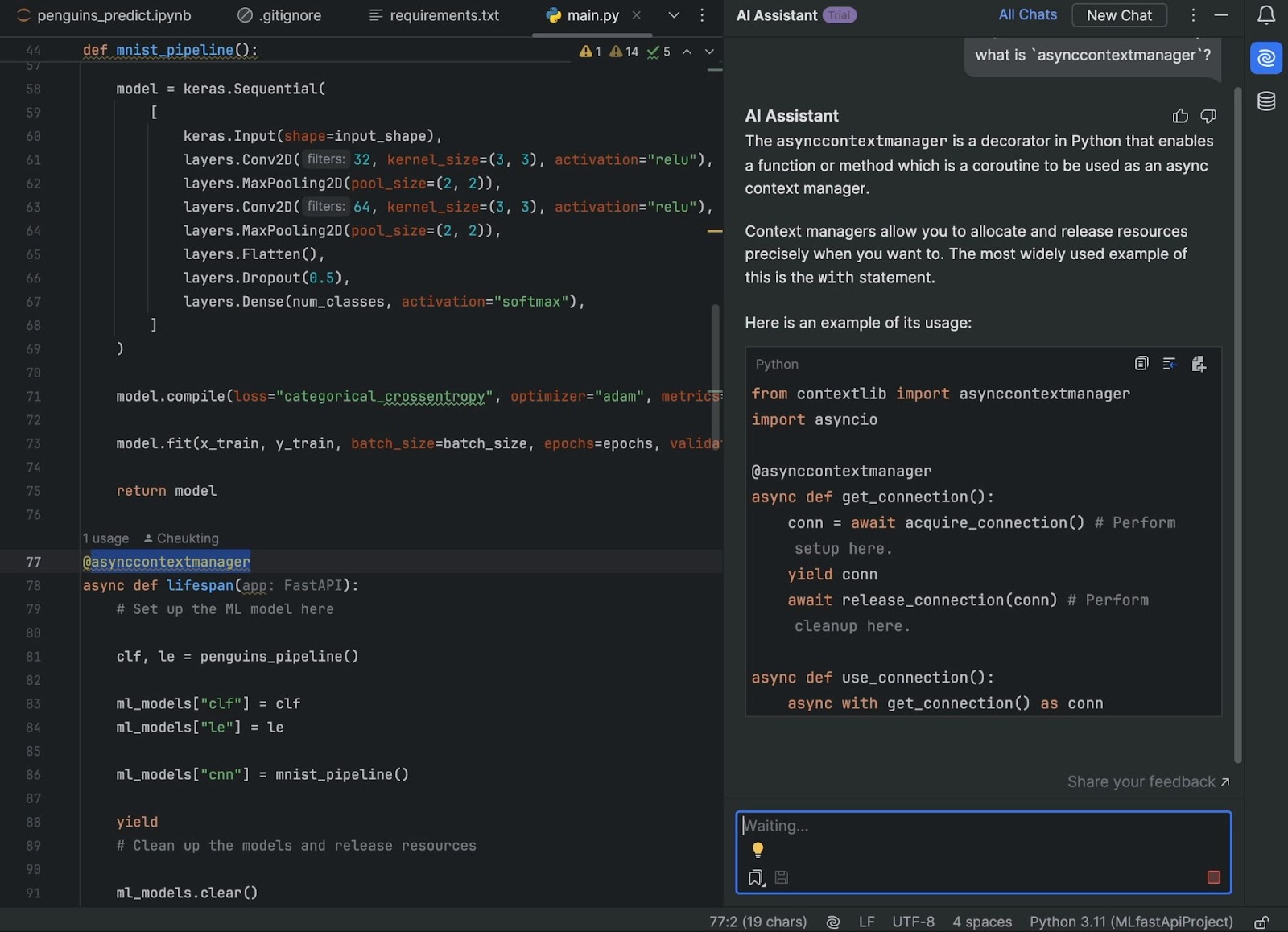

Not limited to natural language, coding assistants are now available that can understand and analyze programming languages, allowing them to help developers write and understand code. One such tool that harvests the power of LLMs and helps developers in their day-to-day work is JetBrains AI Assistant.

LLM basics – what are they?

Although LLMs seem very powerful, they are not magic. They are the fruit of AI researchers’ labor and are based on math and science. To understand how LLMs work, let’s go through some of the basics.

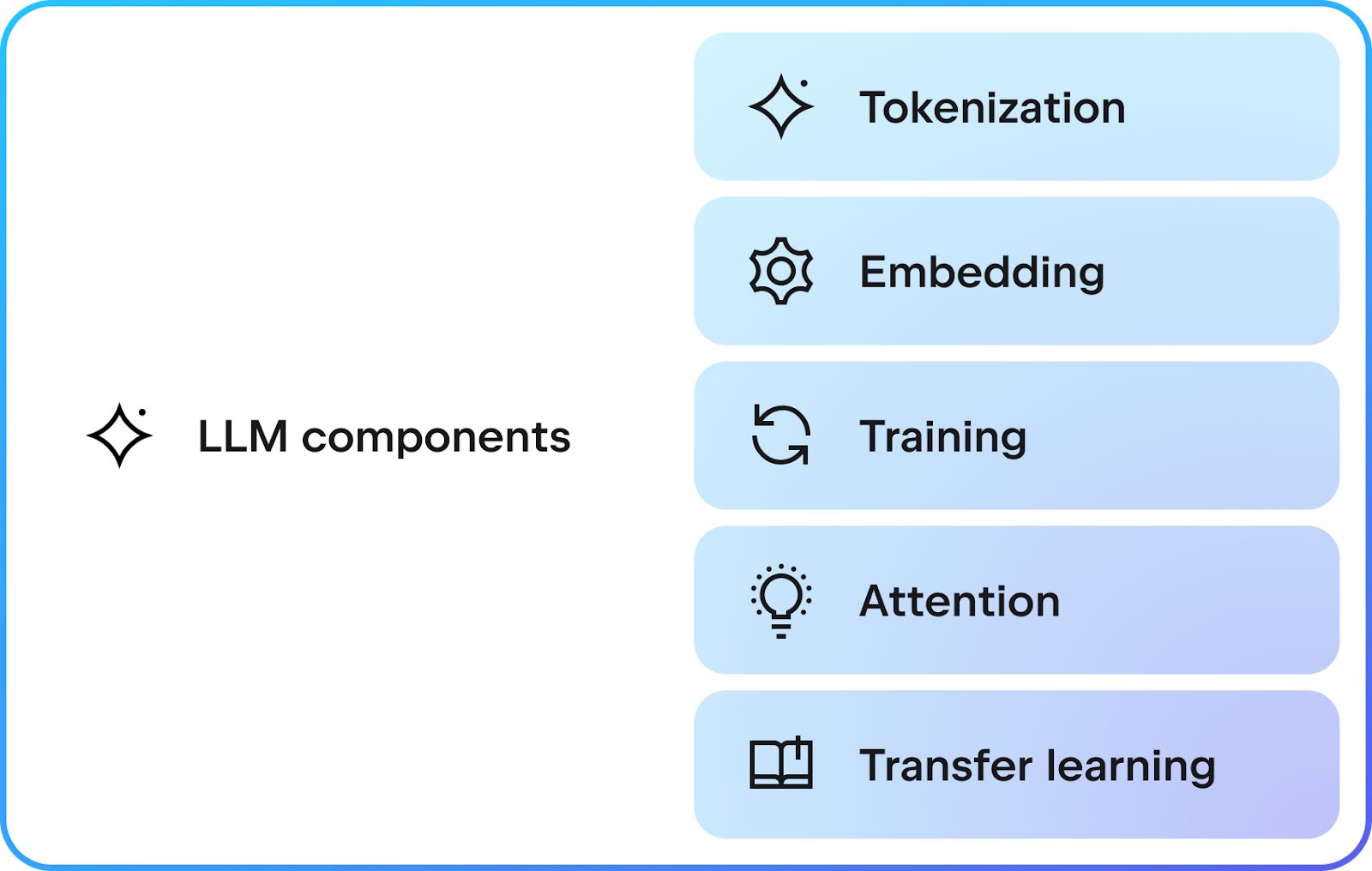

The foundation of LLMs is natural language processing (NLP). Since machines and computers operate in binaries and numbers, we have to convert the natural language to a mathematical format that a machine can process. Researchers in NLP analyze languages and convert sentences into tokens (tokenization), which, in turn, they convert into vectors (embedding). By providing many examples in the form of training data (training), the researchers make it possible for the computer to pick up natural language patterns and contexts (attention) for computation.

The amount of data required for training varies depending on the purpose of the model. If the scope of what the model is trying to achieve is narrow, the training set may not need to be as large as it would be for a general-purpose model. For example, a model designed for tasks involving medical terms and concepts will benefit from a smaller but more specific data set.

To train a general-purpose model that can handle various contexts, a larger neural network and training dataset are necessary. Even though these heavier requirements may make training a general-purpose model seem hard, due to decades of storing information on the internet and the advancement of computer processing power, there are now complicated LLMs that are trained with huge amounts of data and can perform well in general tasks.

However, for specific tasks like understanding code, we need to reinforce these LLMs by providing code examples for transfer learning. Furthermore, since technology is evolving every day – code can be deprecated relatively quickly, for example – these models also need to be retrained with new knowledge and code examples to stay up to date.

All LLMs can hallucinate, which is when they produce results that seem linguistically coherent and grammatically correct but that don’t make sense or even contain bias. In the most extreme cases, the LLMs may provide results that are totally fabricated. Why does this happen? Since LLMs are based in mathematics, the neural network in the model, which is represented as a huge numeric matrix, is used to compute a result in the form of numbers, which are then translated back into languages that are understandable by humans. Most of the time, with a well-trained model, these results reflect what has been trained in the model and provide reliable answers. However, sometimes, the complexity of the models introduces a significant amount of noise via the huge number of weights in the network, leading to hallucinations. The danger of LLM hallucinations is that misleading information is presented to users as facts. At this point, it is the user’s responsibility to double-check and to determine whether to trust what the LLM provided.

JetBrains AI as a tool

Now that we understand what LLMs are, it should be clear that they LLMs can be great tools in our day-to-day development workflow. Here are a couple of ways that JetBrains AI Assistant can be used to help you in your work.

Use prompts to get refactoring suggestions

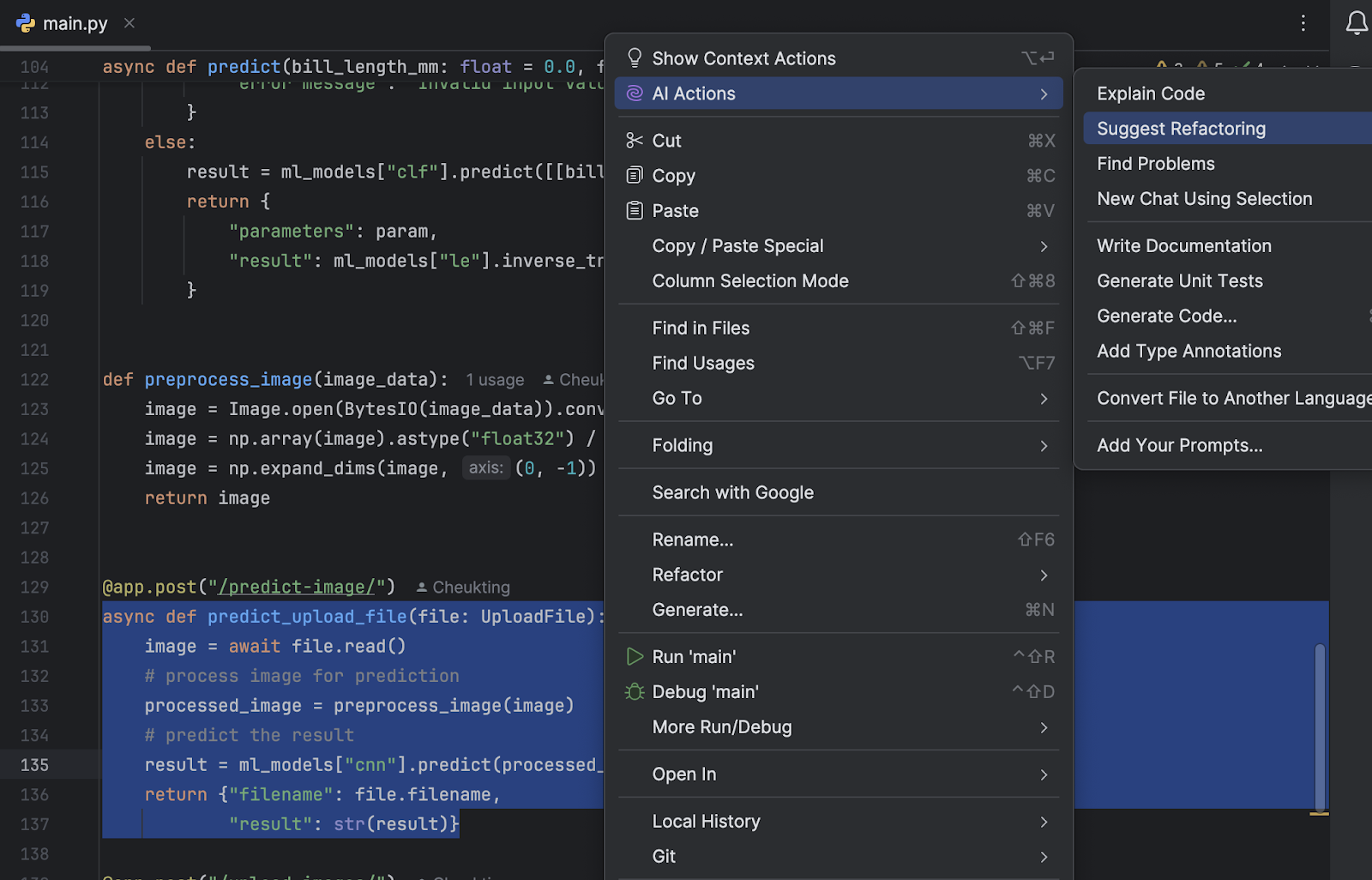

Sometimes, we need to refactor code when a new design comes in, or it becomes necessary to tidy up old code to improve readability as a project grows. This can be a challenging and time-consuming task, especially since it can introduce bugs. JetBrains AI Assistant streamlines this work for you, providing refactoring suggestions to increase the readability of your code.

Simply highlight the part of the code you want to refactor and use the keyboard shortcut Alt+Enter (Windows/Linux) or ⌥⏎ (macOS). AI Assistant will then suggest a refactoring for you.

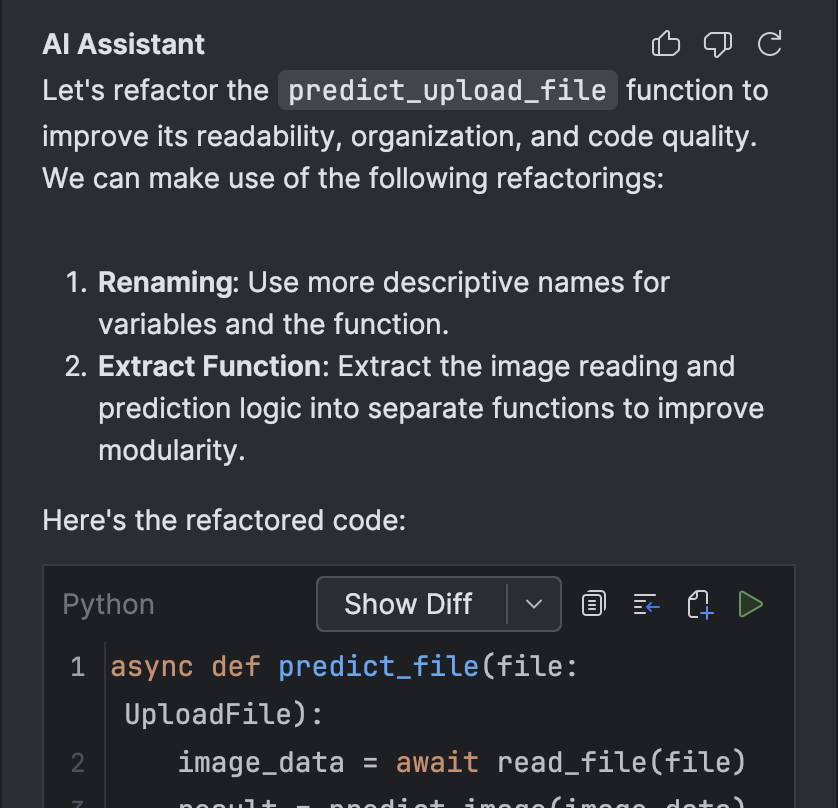

The prompt passed to AI Assistant will appear in a dedicated tool window, along with a step-by-step explanation of the suggestion. If you would like AI Assistant to create a new suggestion with another prompt, you can do so here.

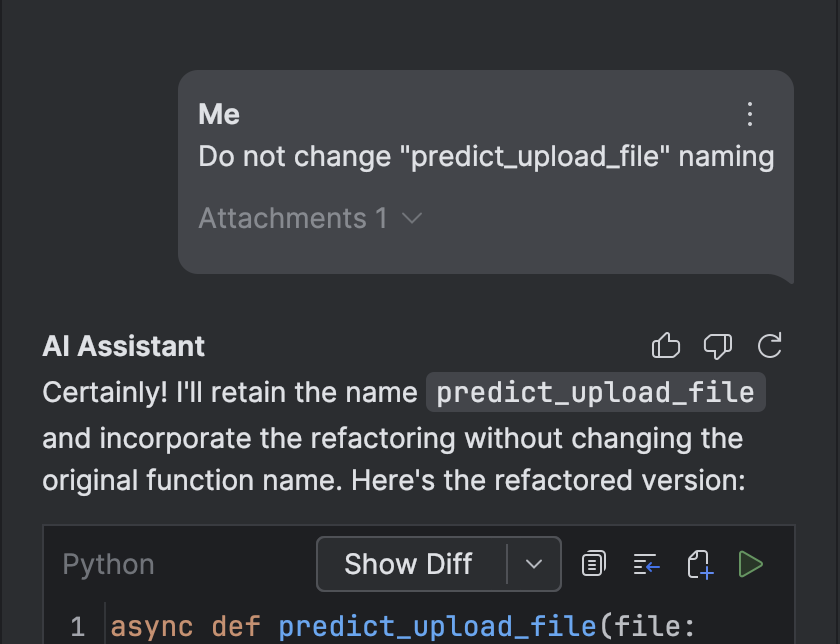

You can also fine-tune the suggestion if you are not happy with it. For example here, we want to keep the function name to ensure it is consistent throughout the code file, so we simply say “Do not change ‘preduct_upload_file’ naming”.

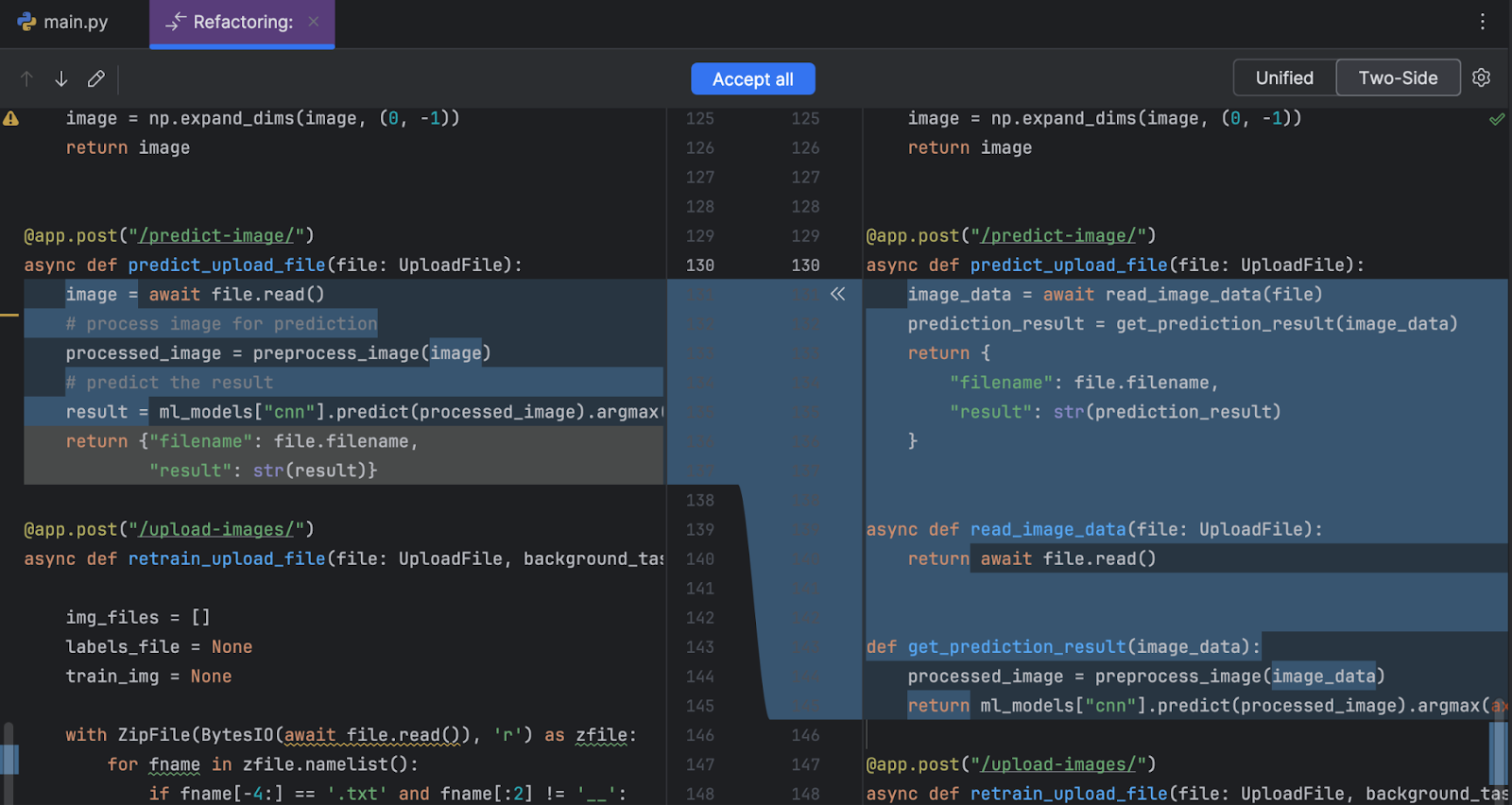

Once you are happy with the suggestion, you can use the Show Diff button to see the suggested refactorings next to the original code. This makes it easy to review all the suggestions at once and finalize the changes.

Generate tests with AI

Although important, writing tests may not be the most exciting task. Even with the help of tools like code coverage, sometimes tests can be poorly written and unable to capture potential bugs or points of failure. With the help of JetBrains AI, you can focus on reviewing the functionality of suggested unit tests rather than spending energy writing them.

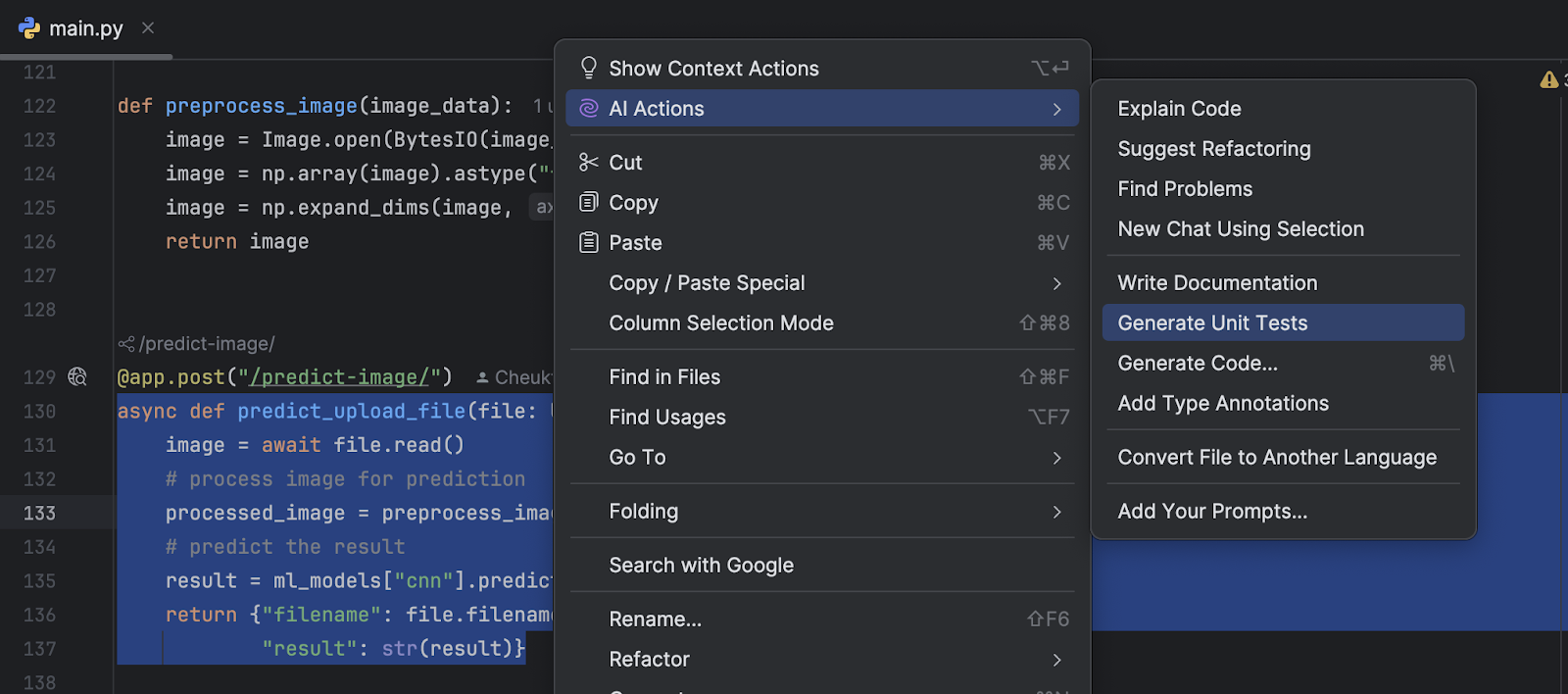

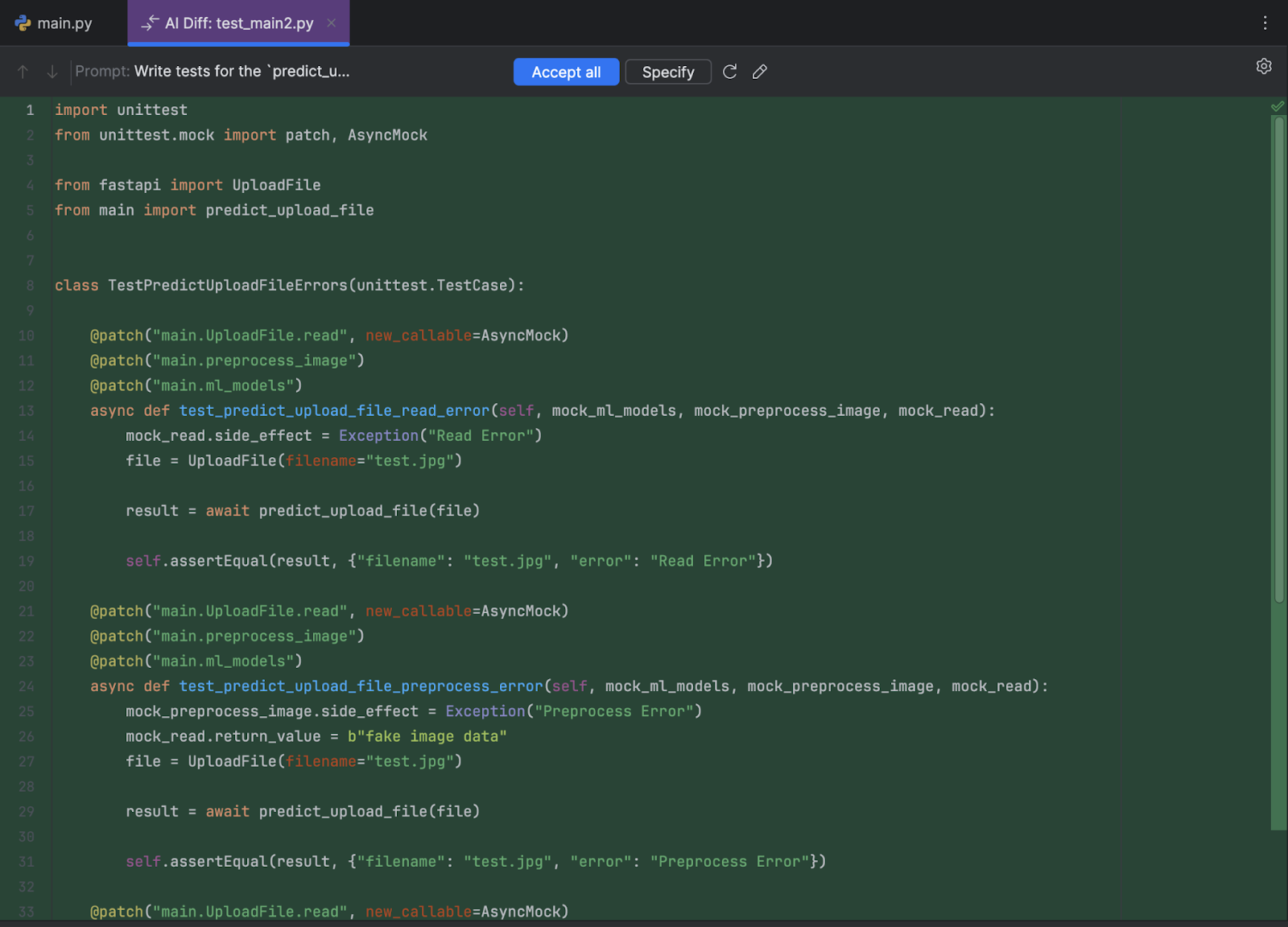

To do that, highlight the code (usually a function), right-click on it, and select Generate Unit Tests from the AI Actions menu.

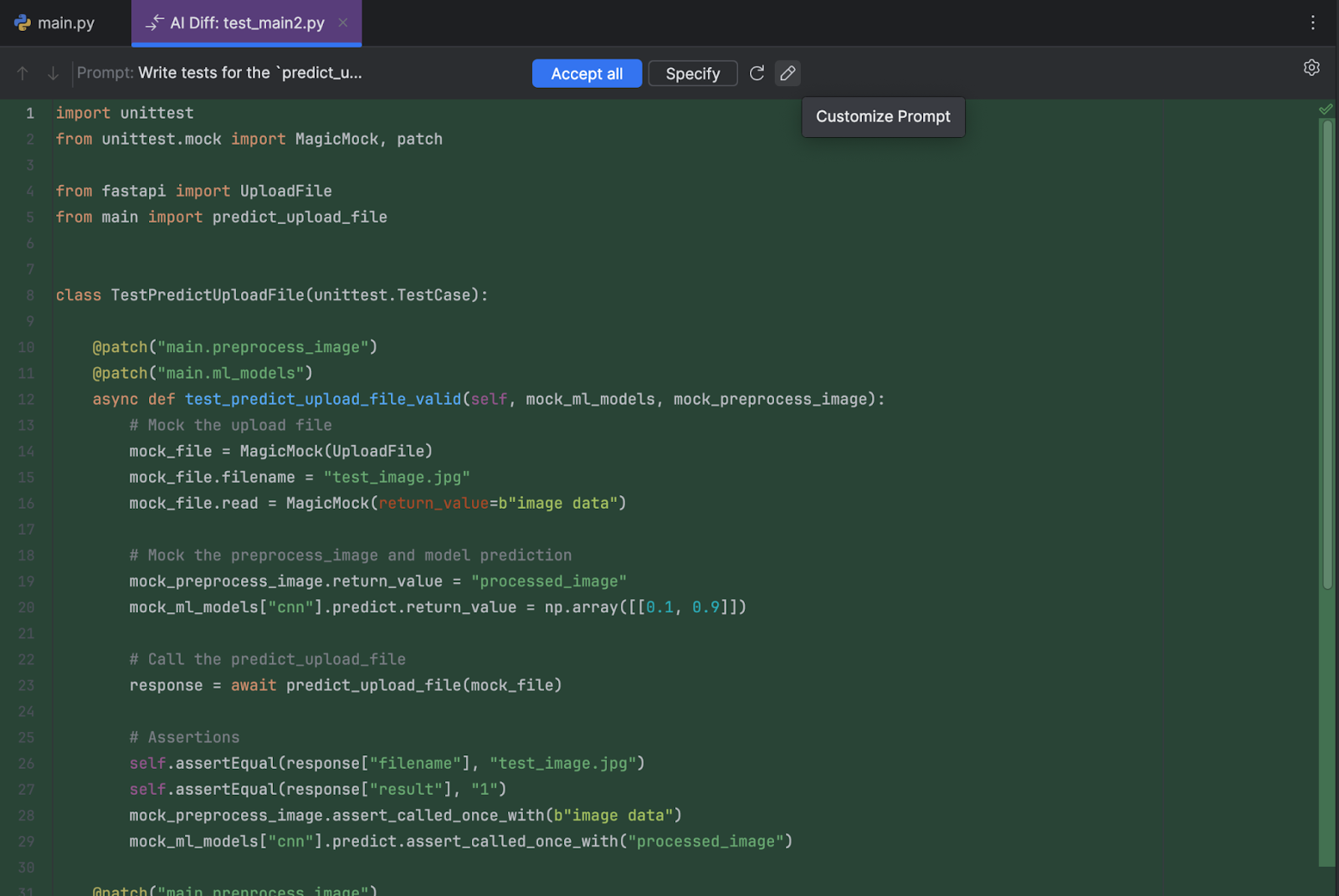

You will see the generated unit tests in a new test file, and they can be reviewed and changed at any time.

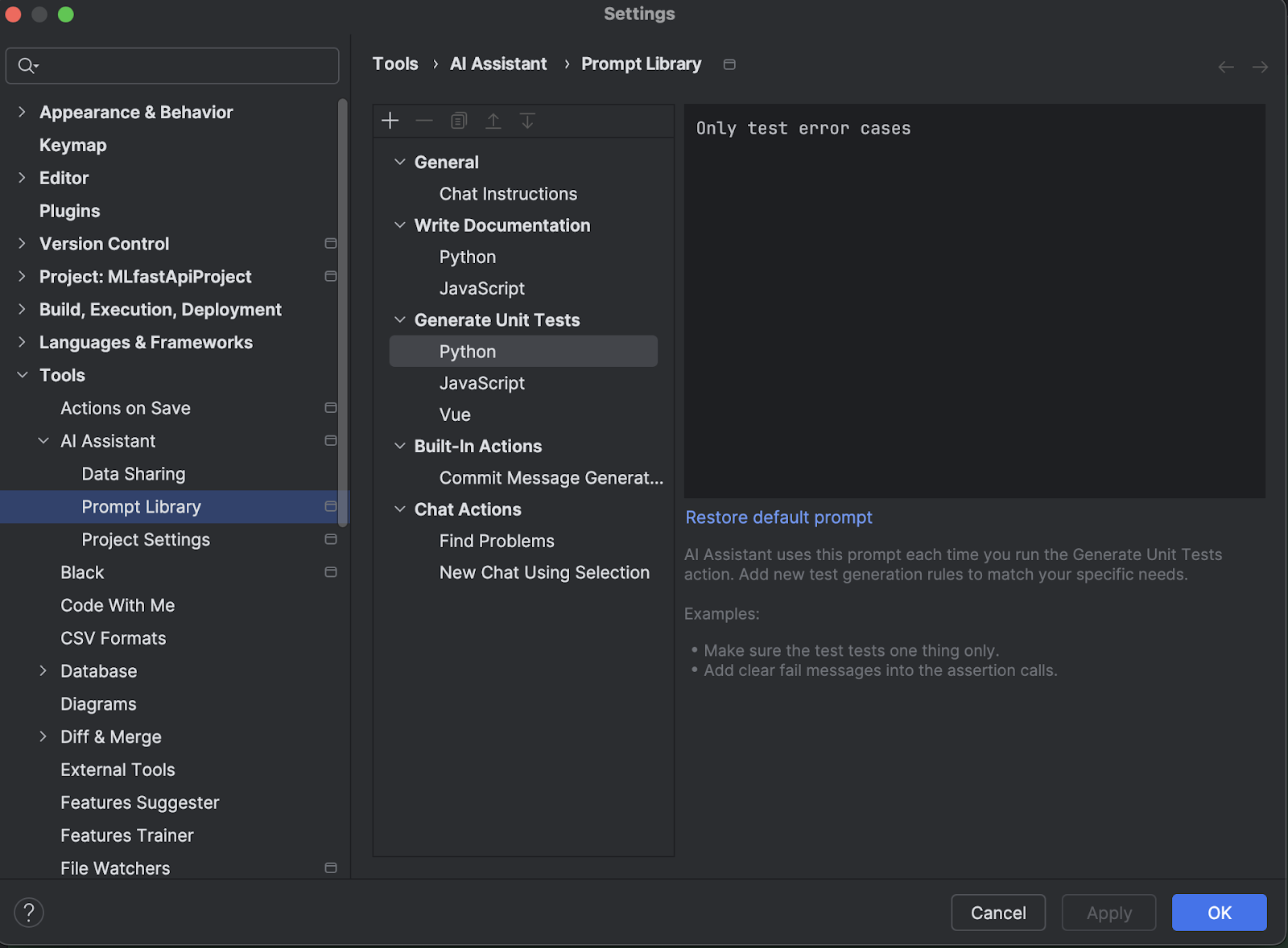

You can also customize the prompt from the Prompt Library in the AI Assistant settings. To access the settings, use the keyboard shortcut Ctrl+Alt+S (Windows/Linux) or ⌘, (macOS). For example, we could specify that we only want to test error cases. After we update the prompt, we’ll see that our tests are only cases with errors.

Once you are happy with the generated tests, click Accept all. You can manually change any of the code generated afterward as well.

Generate commit messages

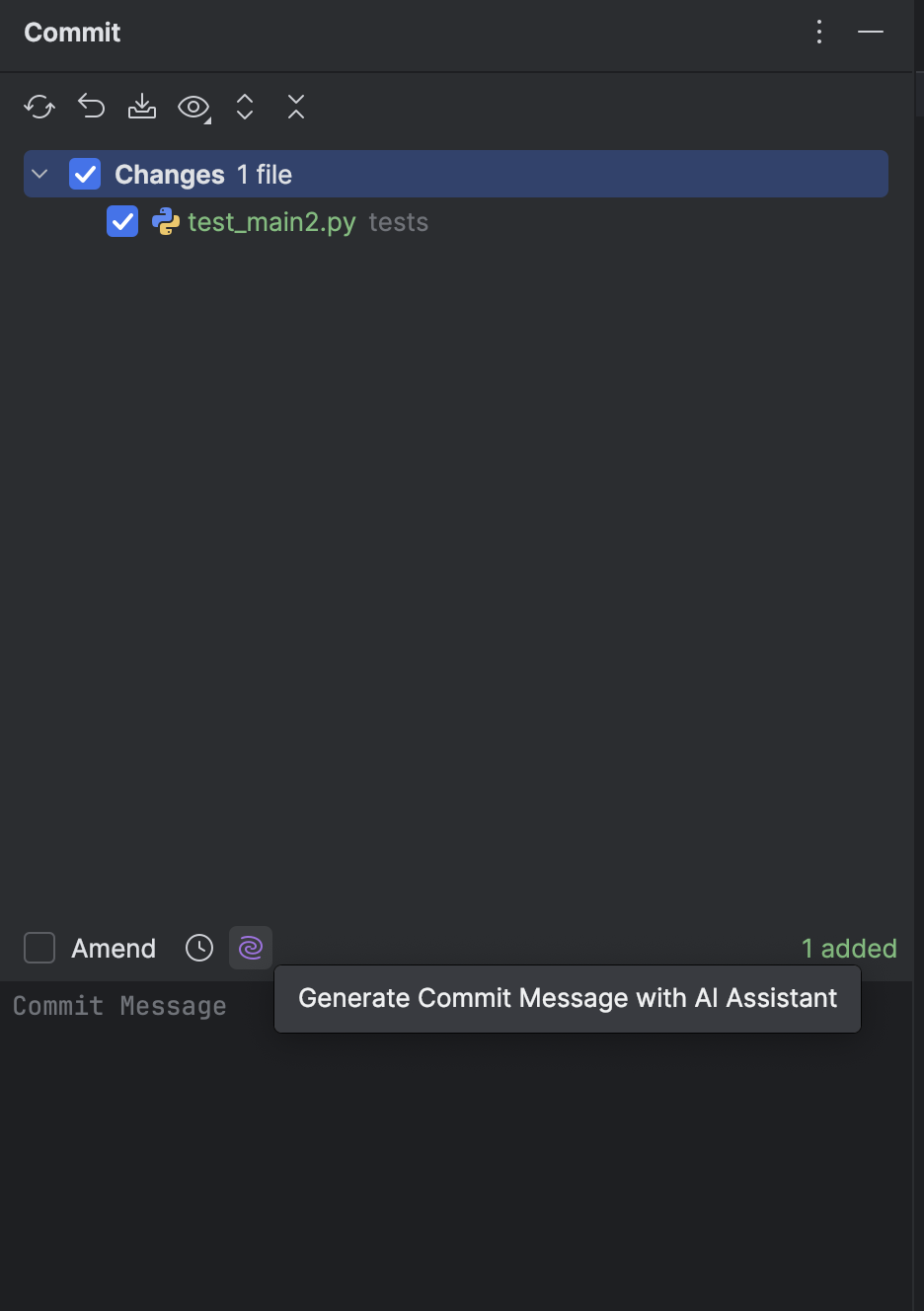

JetBrains AI Assistant can also make your life easier by automatically generating commit messages for you. Commit messages are important for documenting and communicating with the wider team regarding changes. It is important to make your commit messages consistent and communicate the changes effectively.

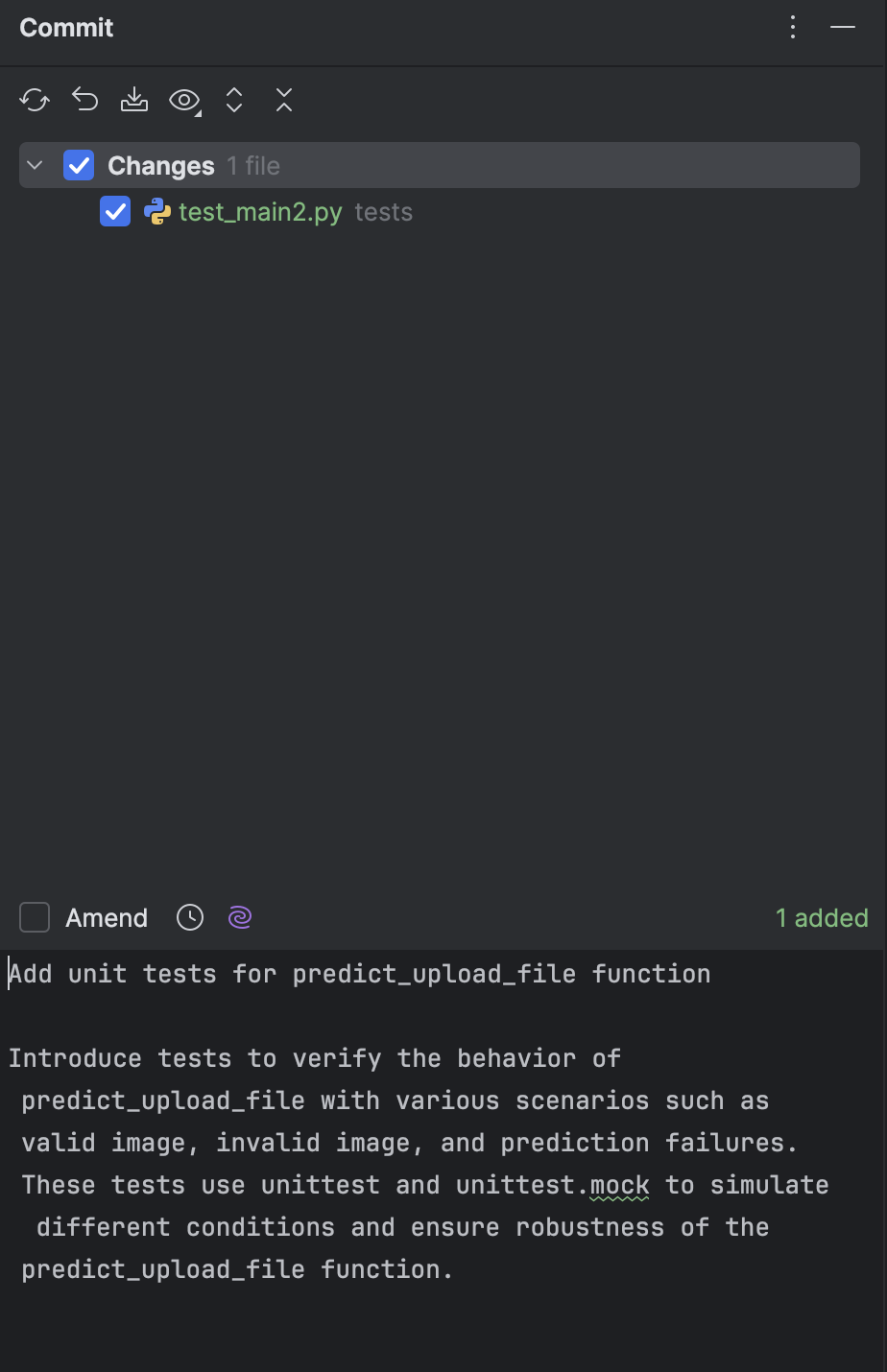

When committing your work, simply click on the icon to Generate Commit Message with AI Assistant and a commit message will be generated for you.

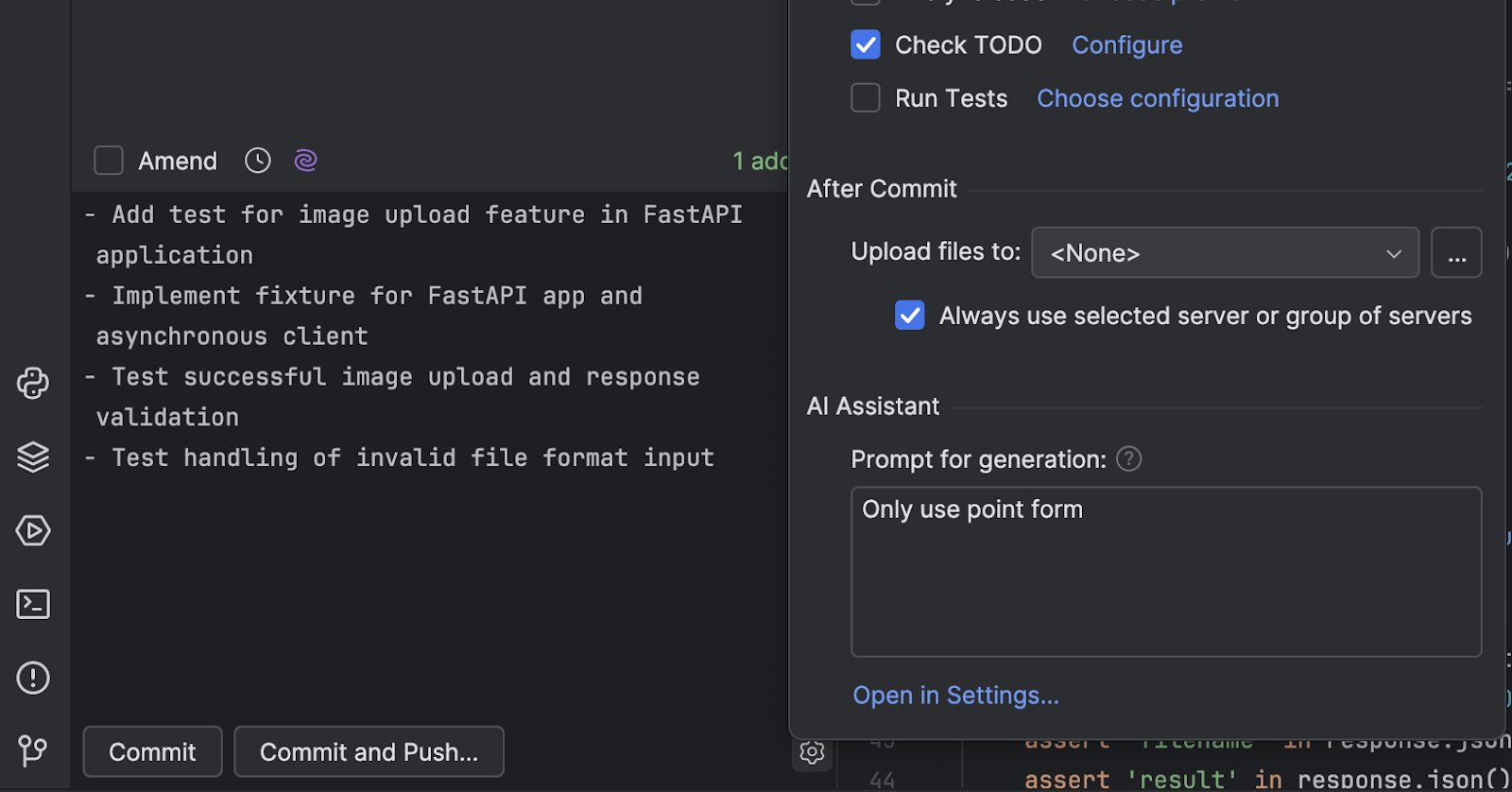

If you want to customize the style of the message, or even share the prompt you used with the team so the style stays consistent, you can click on the little cog icon at the bottom. At the bottom of the pop-up menu that appears, you will find the Prompt for generation box, where you can type in your own custom prompt to implement your desired style. In this case, we asked AI Assistant to “Only use point form”.

After changing the prompt, click the AI Assistant icon to regenerate the commit message. You can see that it then adapts to the new prompt instructions.

By asking JetBrains AI Assistant to take care of composing commit messages and applying specific rules for commit message generation, we standardize the style of the commit messages, ensuring consistency within the project.

Cautions about data privacy

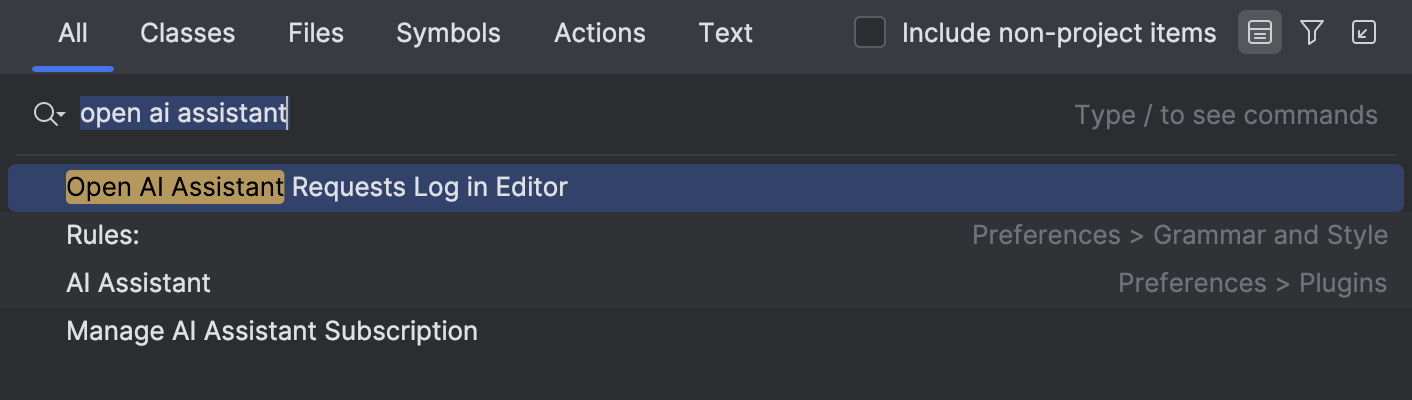

As you can imagine, the backbone of these LLMs and AI models is the training data. Acquiring huge amounts of data becomes more important for LLM researchers, and thus, data privacy becomes a greater concern. JetBrains AI never uses your data to train any ML models that generate code or text, and you will have full control of your data. For details, you can read the Data Collection and Use Policy of JetBrains AI. You can review the data sent to external services at any time by searching for “Open AI Assistant Requests Log” in Search Everywhere:

You can also control how your data is shared in the Data Sharing options in Settings.

Conclusion

I hope this article has helped you understand how LLMs work and the benefit of using them in your work with JetBrains AI. The next time you are writing some unit tests, refactoring your code, or committing your work, perhaps you will give AI Assitant a try and see if it fits well into your workflow. It might even become an indispensable tool for you.

However, LLMs do not take away our responsibility. We must apply our knowledge and judgment and avoid being misled by occasional hallucinations.

Not only does JetBrains AI Assistant give you suggestions from LLM models, but it also empowers you to customize the prompts you give to the LLMs. This means you can truly use JetBrains AI Assistant as a tool that expands your creativity as a developer, making you and your team more productive.

Subscribe to JetBrains AI Blog updates