JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

When Tool-Calling Becomes an Addiction: Debugging LLM Patterns in Koog

I was testing my agent built on Koog, JetBrains’ open-source framework for building AI agents in Kotlin. I fed it a task from SWE-bench-Verified, a real-world GitHub issue that tests whether AI can actually write code.

For the first 100 messages, everything looked promising. The agent methodically explored the codebase, identified bugs, wrote test cases, and attempted fixes. But as the conversation grew, it hit a fundamental limitation: the context window.

Every LLM has a maximum context size (the total amount of text it can process simultaneously). When your agent’s conversation history approaches this limit, you need to compress it somehow. Simply truncating old messages loses critical information, while generic summarization often misses essential details.

Koog’s approach is more sophisticated. It extracts facts according to specific concepts you define, replacing the entire conversation history with these distilled insights:

RetrieveFactsFromHistory(

Concept(

"project-structure",

"What is the project structure of this project?",

FactType.MULTIPLE

),

Concept(

"important-achievements",

"What has been achieved during the execution of this current agent",

FactType.MULTIPLE

),

Concept(

"pending-tasks-and-issues",

"What are the immediate next steps planned or required? Are there any unresolved questions, issues, decisions to be made, or blockers encountered?",

FactType.MULTIPLE

)

// ... more concepts

)

When compression was triggered, the agent should have continued working with extracted facts like “The bug is in sympy/parsing/latex/_parse_latex.py” and “Tests are located in sympy/parsing/tests/“. Instead, it started the entire task from scratch, as if suffering from complete amnesia.

Something was broken in our compression logic, so it was time to debug.

The investigation

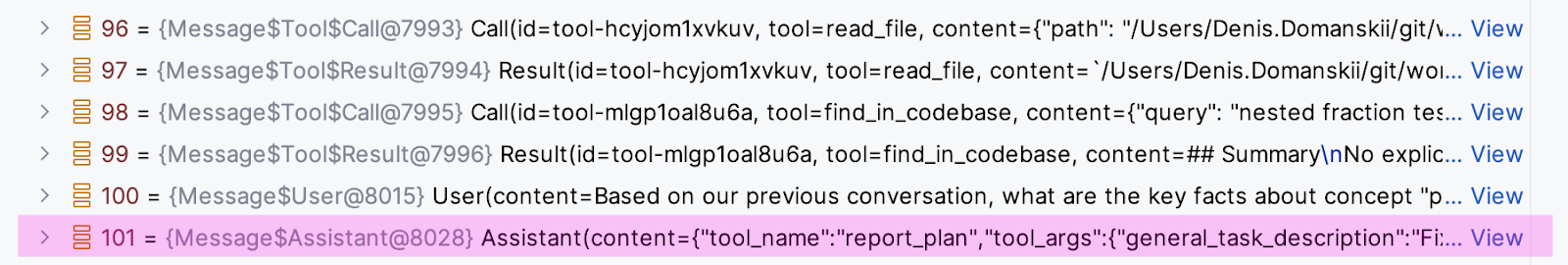

First, I looked at what we were sending to the LLM using our fact extraction logic. After ~100 chat messages of tool calls and results, I found the following:

User: Based on our previous conversation, what are the key facts about "project-structure" (What is the structure of this project?)

This was our initial implementation, and it was probably the most obvious approach – just ask the LLM to extract facts. Simple, right?

We should’ve gotten a summary, but instead we got this:

Assistant: tool_name=report_plan(...)

It was not a summary, but another tool call. Suddenly, the amnesia made sense. Instead of useful facts, the compressed history contained gibberish like tool_name=report_plan(...). No wonder the agent started over – it had no meaningful information about its previous work.

Ask for a summary, and the model responds with another tool call.

That raised a more disturbing question: Why was the LLM responding with tool calls when I explicitly asked for a summary?

My first thought was that maybe the available tools were confusing it. I tried sending an empty tool list to explicitly prevent any tool calls.

The response?

Assistant: Tool call: read_dir("./src")

It was calling tools that didn’t even exist anymore.

Things were quickly getting weird. Maybe the system prompt was the culprit? My original agent system prompt read, “You’re a developer. Solve the user’s task, write tests, etc.” Could it be that this was overriding the summarization request?

I tried making the summarization instruction the system prompt and demoting the original system message to a user message (as you can’t have two system messages).

But the tool calls just kept coming.

Then I stopped to take a proper look at the message pattern:

System: You are a developer, help the user complete their task...

User: Latex parsing of fractions yields wrong expression...

Tool call: read_dir(".")

Tool result: [directory contents]

Tool call: read_file("./README.md")

Tool result: [file contents]

Tool call: write_file("./test/reproduce.py", ...)

Tool result: File created successfully

Tool call: run_command("python -m pytest")

Tool result: Tests failed

[... 96 more tool calls and results ...]

User: Summarize the conversation above focusing on the following...

Then it hit me – it wasn’t ignoring my instructions. After 100 examples of the pattern Tool call → Tool result → Tool call → Tool result, the model had learned that this conversation had exactly one acceptable response format: more tool calls.

The pattern prison

Few-shot learning is one of our most powerful prompt engineering techniques. You show the model a few examples of input-output pairs, and it learns the pattern. We use it deliberately all the time.

But we’d created an implicit few-shot example without meaning to. Think about it from the model’s perspective. It’s seen 100 consecutive examples of:

- Receive a message

- Respond with a tool call

- Receive a tool result

- Respond with another tool call

By message 101, this pattern was so deeply reinforced that even explicit contrary instructions couldn’t break through. The model’s attention mechanism – the system determining what parts of the context to focus on – locked entirely onto the tool-calling pattern.

I even found instances where the model tried to comply, but couldn’t escape the pattern. One response was:

Tool call: write_file( path="summary.txt", content="Here is the summary of modified files: ..." )

It was writing the summary, but as a file operation. The pattern was so strong that it reshaped the response format even when trying to follow the new instructions.

Breaking free

The solution required thinking structurally, not semantically. We needed to break the messages’ pattern, not just add better instructions.

The key insight is that LLMs process conversations using special tokens (like < |im_start| > and < |im_end| >) that mark message boundaries. These tokens are part of their training, teaching them to recognize the structure of multi-turn conversations. When the model sees 100 instances of these tokens in a rigid pattern, it learns that this is how this conversation must continue.

My fix was to combine all messages into a single string, wrapped in custom XML-like tags:

val combinedChatHistory = buildString {

append("<conversation_to_extract_facts>n")

messages.forEach { message ->

when (message) {

is ToolCall -> append("<tool_call>${message.content}</tool_call>n")

is ToolResult -> append("<tool_result>${message.content}</tool_result>n")

// ... handle other message types

}

}

append("</conversation_to_extract_facts>n")

}

Then build the final prompt:

Prompt.build(id = "swe-agent") {

system ("Summarize the content inside <conversation_to_extract_facts> focusing on 'project-structure' (What is the structure of this project?).")

user (combinedChatHistory)

}

This worked immediately. Here’s what changed:

- Before: 100+ individual messages, each reinforcing the tool-calling pattern, followed by a user request.

- After: Just two messages in total: a system instruction to compress the history and the wrapped conversation data.

To the LLM, our XML tags are just content, not structural message boundaries. This let it finally “hear” the summarization request instead of being trapped in the learned pattern.

The final piece

Breaking the pattern was only half the solution. Even with working compression, the agent acted confused after resuming. It would read the compressed facts, then start exploring the project from scratch, more efficiently thanks to the insights, but still from the beginning.

Once I considered it, the problem became obvious – we never told the agent what had happened. From its perspective, it suddenly saw a project summary it didn’t remember creating, almost like you or I waking up with someone else’s notes on our desk.

This revealed another quirk of LLM psychology. The fix was surprisingly elegant – have the agent explain the situation to itself:

System: You are a developer, help the user complete their task... User: Latex parsing of fractions yields wrong expression... Assistant: I was actively working on this task when I needed to compress my memory due to context limits. Here's what I accomplished so far: [summary of progress, modified files, current state] I'm ready to continue from where I left off. User: Great, please continue.

Two subtle things make this work:

- Self-trust: By having the Assistant role explain the situation, we leverage the model’s tendency to trust and maintain consistency with its own statements. Information from itself is more believable than external claims about what happened.

- Natural continuation: The final user message (“Great, please continue”) provides a natural prompt for action. If we ended with the assistant’s explanation, the model might consider the conversation complete, as no user response often signals the end of an interaction.

The agent seamlessly picked up where it left off, understanding why they lost all the chat history.

What this means

This behavior reveals something fundamental about how LLMs process context. The context window isn’t just memory – it’s active training data that shapes behavior in real time. When patterns in that context are strong enough, they can override explicit instructions.

For anyone building agents or long-running LLM applications:

- Watch your patterns: Repetitive message structures create behavioral ruts. If your agent needs to do different types of tasks, ensure variety in your message patterns.

- Structure beats instructions: When you need to break a pattern, changing the structure (like our XML wrapping) is more potent than adding more instructions.

- Self-consistency is powerful: LLMs strongly maintain consistency with their previous statements, which can be used to maintain continuity across context boundaries.

The fix is now part of Koog’s compression system. When you call RetrieveFactsFromHistory(...), it handles all of this automatically, breaking patterns, preserving context, and maintaining continuity. You don’t need to think about any of it – all you need to do is provide your concepts.

If you’re curious about the implementation details, the pull request is public.

Denis Domanskii is an engineer at JetBrains working on AI agents. When he’s not teaching LLMs to forget their habits, he’s probably creating new ones.

Co-authored by Claude Opus 4 from Anthropic, who spent 100 messages helping craft this article without once trying to call a non-existent tool. Progress! – Claude

Subscribe to JetBrains AI Blog updates