Interview with Fabien Campagne about DSLs, bioinformatics and his new MPS book

Fabien Campagne, a researcher in the field of bioinformatics, a long-term DSL practitioner, an experienced MPS user and also an author of a new MPS book, sat down with Václav Pech and discussed these interesting topics.

Q: Hello Fabien, it is my great pleasure to be able to ask you a few questions. You’ve been very active in the community around JetBrains MPS in the past and just recently you’ve published a book on MPS. I believe there’s a lot of exciting topics we can talk about. To begin with, would you be willing to tell our readers a bit about yourself?

I was born in France and have been living in New York City for about 15 years. I am a scientist working in the field of bioinformatics. If your readers are not familiar with bioinformatics, very briefly, it is a scientific discipline that helps study biology using computers. In the last ten years, bioinformatics has also started to provide methods that help address open medical questions, such as why some patients who suffer from a specific disease and are treated with a generally effective treatment do not respond to the treatment.

I was born in France and have been living in New York City for about 15 years. I am a scientist working in the field of bioinformatics. If your readers are not familiar with bioinformatics, very briefly, it is a scientific discipline that helps study biology using computers. In the last ten years, bioinformatics has also started to provide methods that help address open medical questions, such as why some patients who suffer from a specific disease and are treated with a generally effective treatment do not respond to the treatment.

I have become really interested in MPS because it offers a unique solution to some of the problems that we are facing in bioinformatics: we work with many computational abstractions and as a field have been lacking a set of methods to handle abstractions in a principled and seamless way.

For instance, we need abstractions to represent data measured from biological systems, but also to represent knowledge accumulated in biology and medicine, or to track analyses as they run on compute grids or clusters. MPS offers a way to define computational abstractions in formal languages and makes it possible to compose these languages seamlessly.

Programs expressed in MPS languages can be generated to code, so we can use succint notations while we think about what is important in the problem that we are trying to solve, and when we have formulated a solution can generate this solution to code to process the data. Language and abstraction composition is very important to us, because bioinformatics has been plagued with systems that do not well work together (e.g., developed in traditional programming languages that cannot be easily combined). This statement is probably a bit controversial, because some people are content with the tools that they use, these groups typically work with one traditional programming languages and a scripting language. Our laboratory is trying to push the enveloppe and see if there is a better way.

Q: What are you currently working on?

We always work on several projects in parallel. On the application side, one or our projects is to develop a blood test to diagnose chronic fatigue syndrome (CFS). We obtain blood from CFS patients and normal controls, separate the blood into three different cell types involved in immunity (B, T and NK cells for the curious) and measure the expression of about 20,000 genes in these cells. We use the data to train machine learning models that we hope will provide an objective diagnosis. Patients who suffer from CFS currently have a difficult time obtaining a diagnosis because there is no easy molecular diagnostic test. We are aiming to develop a simple blood test for this disease.

On the methodology side, we are woking on the next version of GobyWeb. GobyWeb is a system we have developed to analyze large datasets like those generated in the CFS project I mentionned. We published GobyWeb last year and we are now actively working on improved methods. The paper is open-access and available here. If your readers take a look, they will notice that the architecture of the system includes a domain specific language (DSL). We use a DSL to define plugins that can be used by bioinformaticians to extend GobyWeb with new analysis methods. Until GobyWeb version 2, we have used XML to implement the GobyWeb plugin DSL. We have started to reach the limits with XML because its severely limits the kind of syntax that we can implement and also does not make it easy to combine languages (all languages would have to be XML, which is not convenient for some types of abstractions, for instance we developed a language to evaluate boolean expressions that we use to write expressions that determine when some options are shown on a web-page or not, XML does not make it easy to write such expressions succintly, thus we had to create a mini language and write a parser for it).

Q: Clearly, your work is focused on Domain Specific Languages quite a lot. How did you get started with DSLs?

I guess I came to DSLs gradually. I developed a tool during my PhD thesis that created diagrams of protein sequences (this tool, called Viseur is now of historical interest only). I included a tiny language in this tool that end users could learn and use to paint parts of the diagram certain colors to show some information or hyperlink to some documents on the web. This was used quite a bit to make hyperlinked illustrations for web sites and PostScript outputs for publications. At the time, I wrote a lexer and parser with Lex and YACC (Yet Another Compiler Compiler) to support this application specific language.

During my post-doctoral training, I developed improved methods to render such diagrams and I used XML as a means to avoid developping a parser. I liked that the language could evolve quickly, because I could use code generated automatically for serialization and deserialization, but XML felt less natural to use that a well-crafted language.

Q: What tools and technologies for DSLs have you tried in the past?

Besides Lex and YACC, I continued to build tools that used XML over the years. With XML, I used Castor to help with XML data binding. I have also used Scala to build a DSL for boolean expressions in GobyWeb.

Q: How and when did you first meet JetBrains MPS?

I have been working with Java since 1996 and I am always looking for ways to improve my programming productivity. I tried one of the first releases of IntelliJ IDEA. It was so much better than any of the competing tools that I remember wondering how it had been designed. IntelliJ kept improving and I was visiting the Jetbrains web site every year or so and checking the other products out.

The very first public release of MPS was announced in 2006, and I was among the people who downloaded build #320. I took some notes on our Institutes’ wiki, which you can see here, here and here. At the time, my notes indicate I was interested in language oriented programming because I was running into limitations of software framework for our work. Developing a software framework in the syntax of a programming language does not make for the most intuitive notations. MPS seemed to offer a way around such problems.

From this first experience in 2006, I remember being confused by the user interface (it was very different from anything else I had worked with before) and I eventually reached a point when I could not find enough information to finish a tutorial. I decided that the project was not yet ready for the type of work that we do and moved on. I then kind of forgot about MPS for 7 years.

I stumbled upon MPS again in 2013 because I was looking for a good solution to generate Java code from a data persistence DSL. This is related to the work we have done to manage and compress high-throughput sequencing data (Campagne et al, PLOS One, 2013). I started experimenting with MPS again and this time was able to get much further. I decided to throughly evaluate MPS for the type of work that we do, and this open-access article is the result, which presents our experience over the summer 2013 (Simi and Campagne, PeerJ, 2014).

Although in 2013, the documentation was much better, and the tool more polished than it was when I first tried it in 2006, I felt MPS was still lacking in way of introductory and reference material. I started working on my book series (The MPS Language Workbench) to provide such material. While I wrote the book for others, interestingly, I find myself also using the book to refresh my memory about what method or concept to use to do certain things. I could spend time to figure out how to do such things again, but the book makes it much easier and much quicker.

Q: Now that you mentioned the new book you wrote on MPS – this certainly was a big endeavor. What was your motivation for going through the pain and prepare such a detailed guide for MPS users?

I had several motivations. The first was to have a reference manual that I could share with students so that they can learn MPS when they join the lab to work on our research projects. It is sometimes easier to write a book than to teach the same things to different people over and over again. Another motivation was to explain MPS to a larger community of programmers and software engineers, who might find it easier to learn MPS with a book than through experimentation. After all, I did initally try MPS in 2006, but stopped using it when I got stuck at some point. I am hoping my book will prevent this from happening and help grow a community of programmers who will enjoy the benefits of the platform.

I had several motivations. The first was to have a reference manual that I could share with students so that they can learn MPS when they join the lab to work on our research projects. It is sometimes easier to write a book than to teach the same things to different people over and over again. Another motivation was to explain MPS to a larger community of programmers and software engineers, who might find it easier to learn MPS with a book than through experimentation. After all, I did initally try MPS in 2006, but stopped using it when I got stuck at some point. I am hoping my book will prevent this from happening and help grow a community of programmers who will enjoy the benefits of the platform.

Q: What should people expect in the book and where can they get it?

The book offers a succession of reference chapters interleaved with chapters that present an application of the preceding chapter on a practical example. If you read the book from cover to cover, you will encounter a progressive description of MPS. If you go back to the book after you have read it, you can skip the example chapters and just get to a relevant section of one of the reference chapters. I included a detailed index to help get to the relevant reference section.

The eBook is on Google Play and Amazon for now. More distributors may be introduced in the future. I maintain links to the distribution channels at http://books.campagnelab.org.

Q: Do you have any further plans with the book?

Yes, certainly. The book is the first volume of a series. The first volume will be updated to match the changes introduced in MPS 3.1. The second volume is in preparation and will discuss some of the MPS aspects that software engineers can use to customize the MPS user interface (plugin solutions) and to interface with legacy systems (custom persistence).

Q: Could you tell us more about the MPS-based solutions that you created so far? How could our readers try them out?

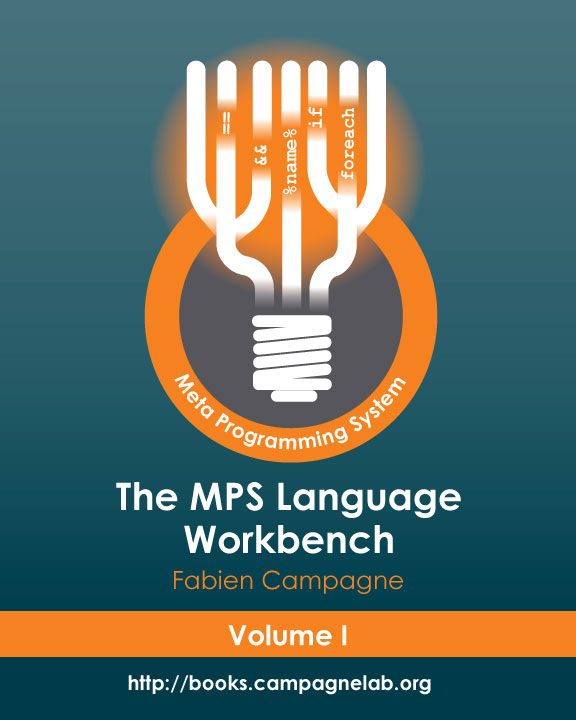

In our PeerJ article (Simi and Campagne, PeerJ, 2014), we present an evaluation of MPS where we constructed a replacement for the BASH language. We use BASH extensively when developing bioinformatics analysis pipelines, because it runs on a variety of platforms. We use it in GobyWeb to express the logic of analyses. BASH scripts are used by GobyWeb to call analysis programs in the right order and with the right data. While I was experimenting with MPS, I thought that it would be interesting to see if we could extend baseLanguage, which is very similar to Java, with language constructs that we can use to execute BASH command pipelines (e.g., lines=`cat file | wc -l `) . Our evaluation shows that this was possible by developing small languages that we could combine with baseLanguage. The result of this evaluation was packaged as a standalone IDE and is available at http://nyosh.campagnelab.org/.

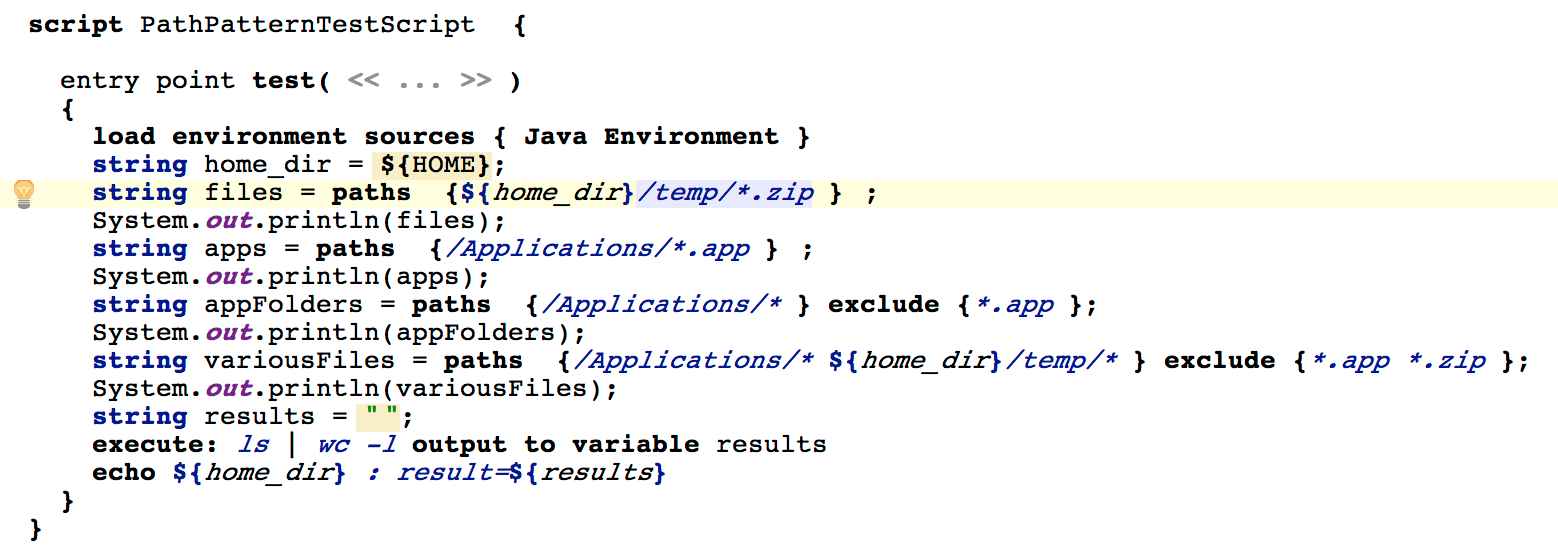

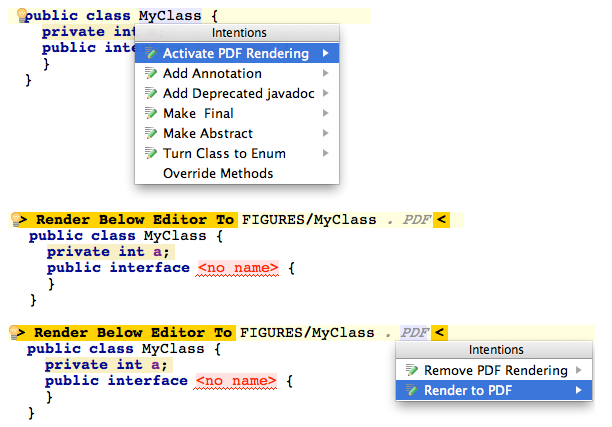

I have also developed a language to help create PDF figures for the books. I would create figures manually for the first volume by taking snapshots of the MPS workbench editors. While starting work on the second volume I decided I should spend a week end doing some infrastructure work. The Editor2PDF project is the result of this. The big advantage is that the figures will be vectorial and print at press resolution. You can find the language at https://github.com/CampagneLaboratory/Editor2PDF. See the tutorial there.

Q: Can you highlight the features of MPS that in your opinion were in particular useful when implementing your projects?

Q: Can you highlight the features of MPS that in your opinion were in particular useful when implementing your projects?

The ability to design custom syntax that is well suited for a problem comes first. Second is the ability to compose languages seamlessly. This makes it possible to reuse capabilities developed for other projects in a very straightforward and no-nonsense manner. Third was the ability to generate the languages to Java source code, compile it under the hood, and even deploy the Jar files to a development GobyWeb plugin repository. Our PeerJ article describes these and other features in more detail (Simi and Campagne, PeerJ, 2014).

Q: Looking at the MPS roadmap reveals that MPS will shortly add support for diagrams, followed by web-based editors, language versioning and improvements to the language definition languages. Where would you personally like to see MPS going in the future?

I am very much looking forward to trying the support for diagrams. Visualization is an integral part of the work that we do and I think we will be trying several ideas to see if the MPS diagram support can help. In fact, I am already planning to update the first volume of the eBook with a chapter dedicated to diagrams. Thanks to modern epublishing platforms pushing such updates can be done seamlessly.

Language versioning is also something that I think will be critical to help grow a community around MPS. I hope the plans for versionning includes some support for language repositories (perhaps leveraging open-source tools such as artifactory). I would love to have the ability to create a language, push it to a repository like we push software artifacts, and have others immediately able to get and use the new language in their work. Versioning will make sure that different releases of the languages can cohabit together and is, in my experience, absolutely necessary to avoid monolytic platforms where everything is included, and must evolve in one release jump together. As MPS grows, we need to be able to refer to specific versions of the languages that are offered on the platform. We may also need the ability to configure configure several language repositories.

Besides the features planned in your roadmap, I think what I would like to see is a simple way to import Java archives, that takes care of dependencies between these archives. MPS already let’s developers import jar files so that the classes in these jar files can be used transparently in MPS. This is great to interface with legacy code developed in the Java community in the last twenty years. However, I am sometimes running into problems when a JAR file depends on a few others. Common jar files such as apache-commons-io tend to be needed in several languages, but importing them several times can cause classloader problems, and at best is confusing when the same classes appear from multiple languages. I think MPS solved this in the past for the JDK by importing all of it into one solution, but think that we may need a more fine-grained solution moving forward when more people use MPS and they need to interface it to the large number of Java archives available today.

Q: Where do you typically turn to for information? Can you recommend any relevant books to people interested in DSLs?

I get most of the information from MPS itself. When a language is part of the MPS platform I try to locate examples of code that use the language. This is easy to do in MPS with the Find Usage feature. I work from the examples and experiment to understand the meaning of each option. Sometimes the project web documentation provides pointers, but I find it to be often out of date with the latest releases of MPS. When I really don’t know I check the forums and if I find nothing there, I contact the Jetbrains team.

Regarding books, I have a copy of Czarnecki’s Generative Programming book, published in 2000. At the time, this was a good introduction to the field. It is a bit outdated with respect to the description of the tools available, but it has a good description of the various techniques and their advantages and drawbacks. The more recent DSL Engineering book, by Markus Voelter and colleagues is a good resource if you are interested in how differerent tools compare with respect to the features they offer. The book compares MPS with Spoofax and XText.

Q: What do you typically do after you turn off your computer?

I try to play squash three or four times a week with friends. I also enjoy watching movies.

Thank you, Fabien, for sharing your insight.

Develop with pleasure!

-JetBrains MPS Team

Subscribe to MPS Blog updates