JetBrains Research

Research is crucial for progress and innovation, which is why at JetBrains we are passionate about both scientific and market research

Helping Students Get Unstuck: AI-Based Hints for Online Learning

In online learning environments, tasks can often stump students, which can be challenging to navigate since teachers can’t always be there to help. Our Education Research team develops innovative features for education tools and recently built a smart AI-based hints tool that provides personalized feedback for students who might need help solving tasks. This tool goes beyond the automated hints common in massive open online courses (MOOCs) and delivers tailored, effective guidance that helps students move forward.

In this post, we will:

- Explore the currently available options for automated assistance in MOOCs.

- Present our AI-based hint system, showing how it works and what’s technically interesting about it.

- Discuss how students evaluated the plugin and what’s up next in this research stream.

Learning in online courses

In its early days, distance learning was intended for people who were unable to attend classroom lectures, usually full-time workers, military personnel, and those in remote regions. The model has been around for some centuries already: people could take so-called correspondence courses per post, documented as early as the 1720s in Boston, Massachusetts, USA. Sir Isaac Pitman offered correspondence courses with feedback in the mid 1800s from Gloucestershire, UK, and universities were not far behind: students could obtain a degree through the University of London starting in 1858, regardless of whether they lived in London or not.

Let’s zoom ahead to 2000, when online institutions like the University of Phoenix in the USA and The Open University in the UK had already been offering online courses to obtain degrees for about 30 years. As technology became more advanced and the infrastructure evolved for better access to affordable and fast internet, online courses surged in popularity.

The importance of feedback

The first MOOC is often credited to Stephen Downes and George Siemens, who in 2008 made it possible for more than 2000 students online to attend their course in addition to the 25 students at the University of Manitoba campus in Canada. Since then, and especially during the pandemic, MOOCs have become more popular, with many available platforms. For example, there are platforms from universities like MIT and Harvard (their non-profit consortium is now edX and includes many more partners) and private companies like Udacity and Coursera. JetBrains offers its own project-based courses through JetBrains Academy, where learners can build tech skills using the same tools developers rely on every day.

Already the online, often asynchronous, nature of such largely-attended courses poses a problem for helping students in a personalized way. We know that feedback is essential to the learning process, and that there are different types of feedback (see, for example, The Power of Feedback and this review specifically on automated feedback types). Courses with high matriculation numbers, whether on campus or online, often include automated assessments. Sometimes these courses additionally include smaller tutoring sessions, where feedback may be more personalized. Those students receiving both automated and personalized feedback are more likely to improve their learning than those receiving only automated feedback, as confirmed in, e.g., this 2024 paper.

Recently, researchers have started developing automated ways to provide students with more personalized feedback, such as predefining rules or templates, using systems based on previous student submissions, or incorporating large language models (see this recent overview for more details). Here we focus on the landscape of a particular type of assistance for students learning programming online, next-step hint generation, and explore the approaches currently available.

Providing hints to students in online learning

Next-step hint generation is a powerful approach that provides students with targeted, incremental guidance to help them progress through their courses. So when stuck, they are presented with what specific, small step will help them proceed. Once that step is completed, and if they need another hint, another step will be given. For example, a student could be stuck on a programming task and cannot figure out why. To help, the hint could suggest something like the following:

“Add a for loop that iterates over the indices of the ‘secret’ string.”

Hints can be generated as text like in the example above, (pseudo-)code, or a combination of both. Research has shown that only one type of feedback is not enough, and that students learn better when there are several levels like text and code. Instead of only pointing out errors or giving full solutions, they offer adaptive suggestions, include text explanations, and nudge learners toward the next logical step. Hints break down complex tasks into manageable pieces and encourage independent problem-solving, making them an essential tool for personalized learning support.

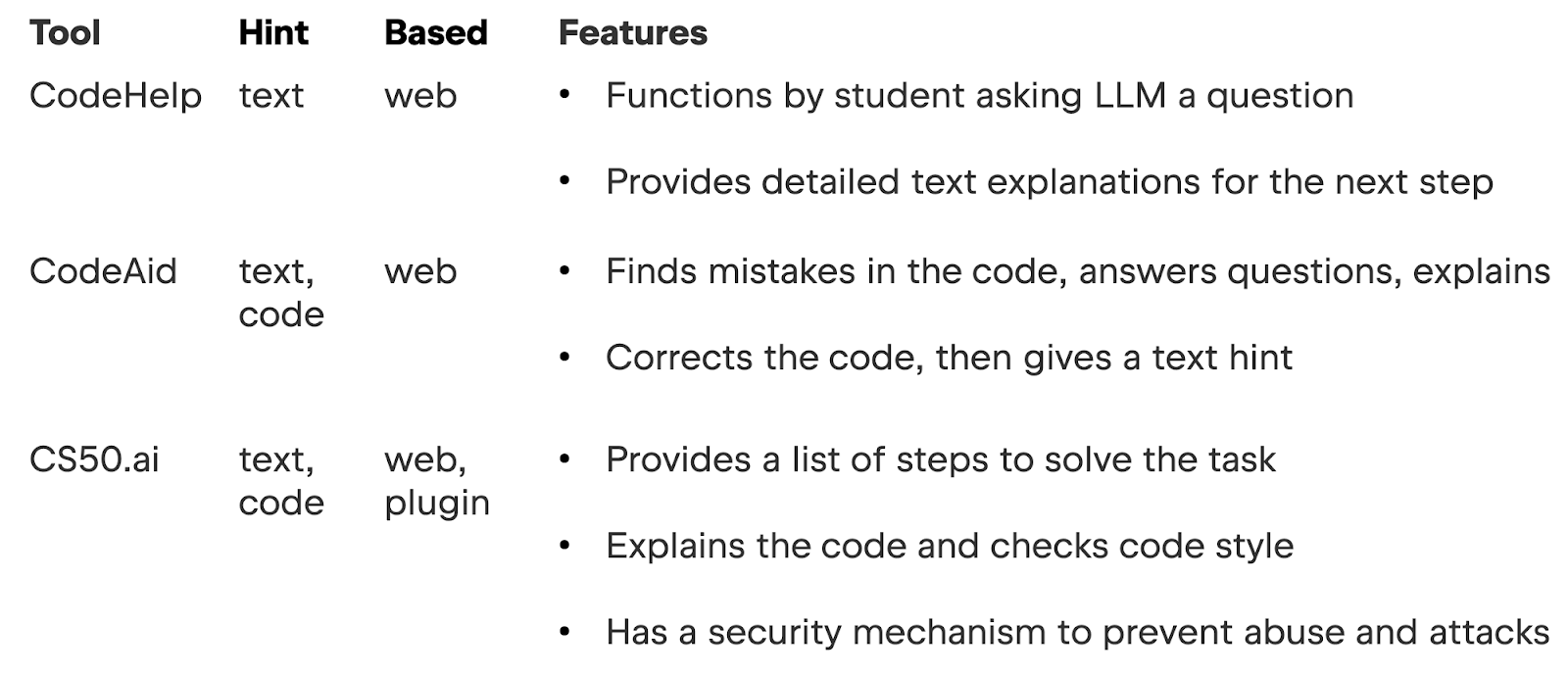

With the recent onset of large language models (LLMs), a few researchers have been exploring the possibilities of combining the open APIs of LLMs, such as gpt-3.5 and gpt-4, with personalized feedback generation. This type of approach comes with the benefit that researchers do not need a large amount of data on student submissions. So far there exist only a handful of these types of systems. Examples of a few tools can be found in the table below, including information about the hint type, where the tool can be used, and features; see this 2022 state-of-the-art review for more details.

The first two tools, CodeHelp and CodeAid, use gpt-3.5, while CS50.ai uses gpt-4. All tools in the table above only use the LLM directly; that is, there is no additional output processing. Without this processing, potential inaccuracies (such as hallucinations or ignored prompt instructions) can arise, thereby compromising the hint quality. The tools also require students to craft their own prompts, adding an extra challenge for students, especially beginners who are already known to struggle with seeking help.

To address such challenges, our research team developed a tool with the following features:

- Part of the course’s integrated development environment (IDE)

- Both text and code hints

- Processing of the LLM-generated code

AI-based hints for in-IDE Learning

Our researchers from the Education Research lab recently developed an AI-based tool for the open-source JetBrains Academy plugin. This research is a part of the PhD work of the lab’s head, Anastasiia Birillo, completed under the supervision of Dr. Hieke Keuning in the Software Technology for Learning and Teaching Group at Utrecht University.

The JetBrains Academy plugin is an extension for JetBrains IDEs, created specifically to support in-IDE learning. The plugin adapts the IDE window by adding a course structure panel, course progress bar, task description panel with theory, explanations, and non-coding types of assignments, and a check button for running tests predefined by the course author. There are already over 50 courses compatible with this format, and any third-party educator can add their own compatible course.

The plugin provides different assignment types, and these tasks can be isolated or combined within a larger project. The types of tasks can be categorized as:

- Theoretical: concepts explained in a text or a video form with related quiz questions or coding tasks

- Practical: a quiz or a programming assignment

With the combination of the hint tool with the plugin, students learn programming languages by coding and simultaneously receiving personalized feedback, all within the IDE. If you are interested in what students and developers think about in-IDE learning, check out this recent paper where our researchers conducted interviews to learn about just that.

How our hint system works

Before getting into the technical features that set our AI-based hint tool apart from tools like the ones mentioned above, let’s look at how the tool works. Here are a few basic technical features:

- The system uses gpt-4o for all LLM interactions.

- The system generates both text and code hints.

- It was originally designed for Kotlin and this version has been validated (see below).

- A Python version was developed later and is already available in the JetBrains Academy plugin.

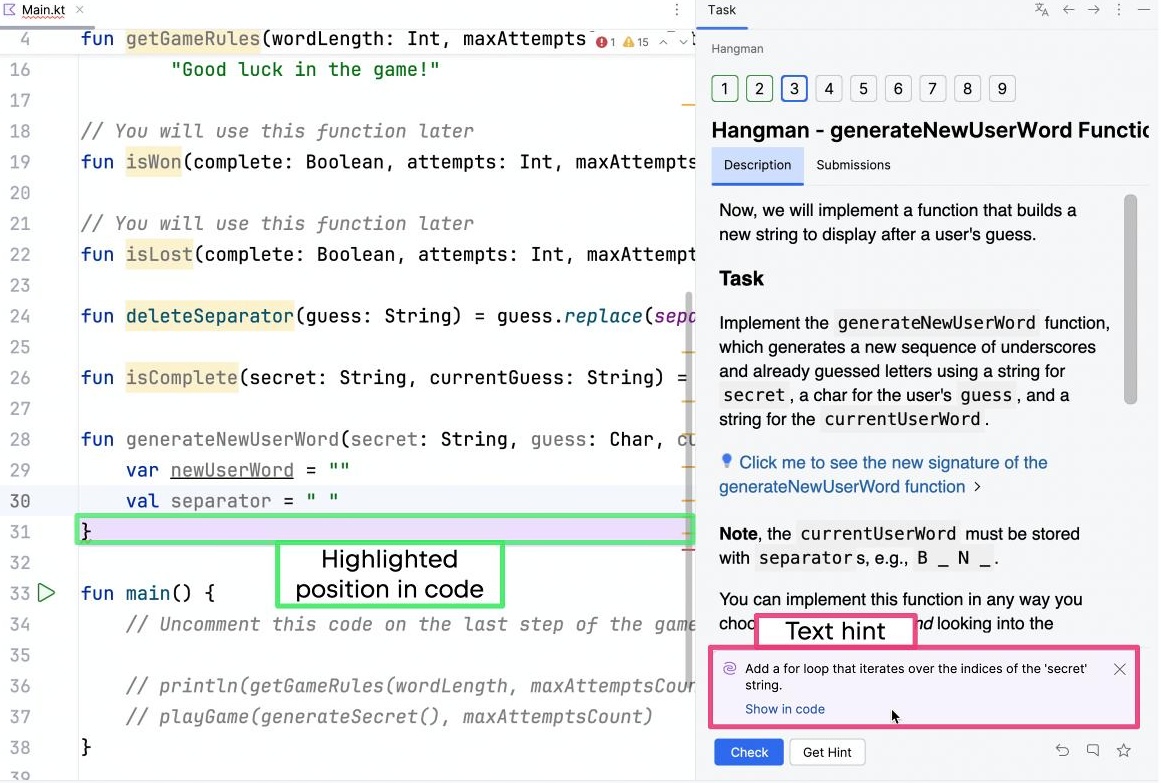

In the example image below, a student is creating a program to simulate the game Hangman, where a user can try to guess a word by inputting letters. The current task requires the student to implement a complex function, generateNewUserWord. In the example, the student has already defined newUserWord and separator, but they don’t know how to implement the part of the function concerning already guessed letters.

To get help with the task, the student can request a hint by clicking the blue check button at the bottom (below the text hint). Clicking this button runs test cases, checks whether the code compiles, and displays the following two elements:

- The text hint, on the bottom right side, marked with a pink rectangle

- The highlighted position in the code, marked with a green rectangle

The highlighting feature is an important part of the design because (i) the hint system should be complex enough to help students with tasks longer than one function, and (ii) when there is a long file and the student is stuck anyway, it might not be obvious where to apply the hint.

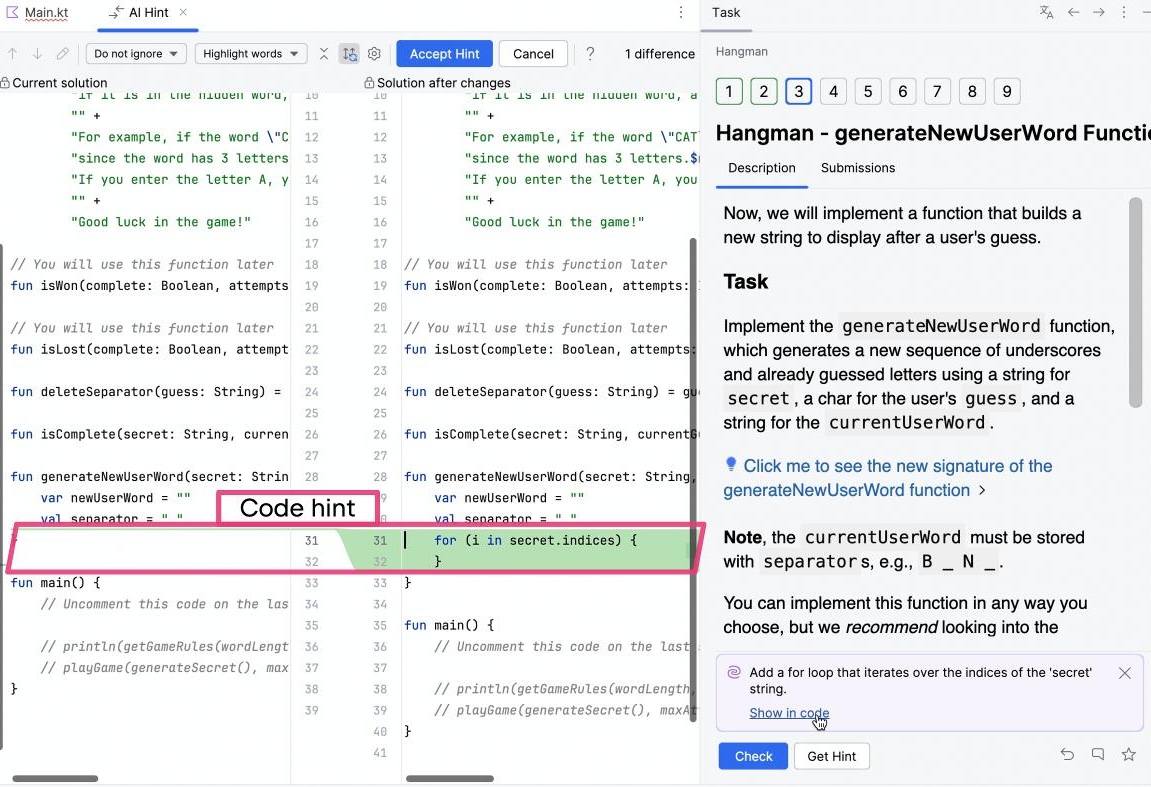

Within the textual hint window, there is also a show in code link. As suggested by its name, it provides the student access to the code hint, which appears as a window with highlighted differences between the student’s code and the solution code. This can be seen in the following screenshot, where the code hint is marked in pink.

On the lower right side of the above image, rows 31–32 are highlighted in green. In this case, these rows represent what is missing from the student’s code, namely, the for loop which should iterate over the indices of the secret string, as stated in the text hint.

From this code hint, the student can choose to accept the hint (found on the top of the above image), in which case the suggested changes are automatically applied, or cancel the hint and return to their own solution to manually modify it. This means the code hint provides bottom-out help, or the exact code needed to progress to the next step. As you can see in the example above, the code hint does not provide the content of the for loop, so the student has a chance to continue on their own.

Students may request a next-step hint at any time during the task, except when the task is already solved. The hints can help the students proceed with the task, or fix a test or compilation errors. There is currently no limitation on the number of hint requests in the plugin’s current version; JetBrains Academy is a MOOC platform where students voluntarily participate in courses, so it is ultimately up to them how they want to learn.

Not only does the in-IDE course format provide students with a professional environment to build their coding skills, it is also a good environment for implementing a novel next-step hint system. For one, the programming assignments can be complex, and the IDE conveniently provides several crucial features, such as an API for static analysis and code quality analysis, the topic of the next subsection.

Code hint quality improvement in the IDE

One might think that because the text hint is shown first to the students, the hint system must also internally generate the text hint before the code one. According to this 2024 paper, as well as our own internal validation reported in §5 of our paper, a hint system delivers much better results when it generates the code hint before the text hint.

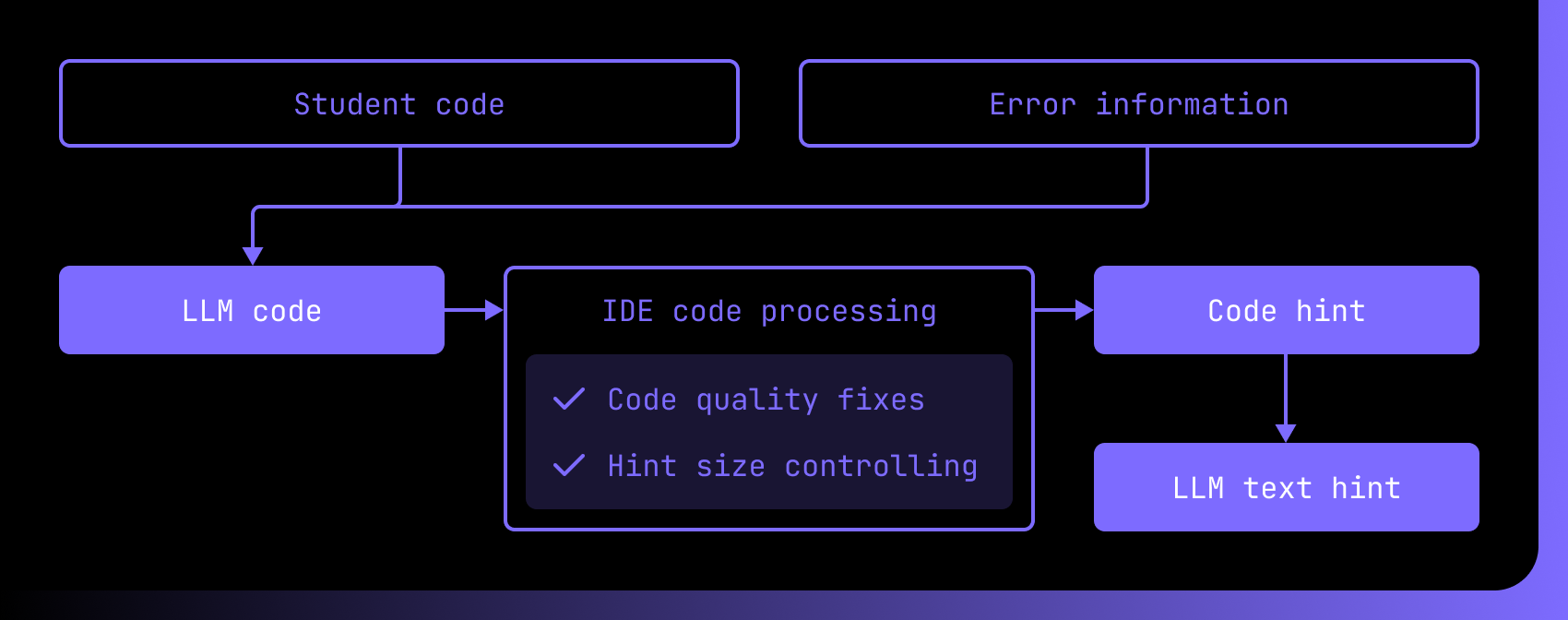

With code as the main entity, we can apply cool IDE features to improve the code instead of just showing an unprocessed hint to the student. For the code processing, we used static analysis and code quality analysis. The figure below depicts the internal system pipeline.

A key feature of our approach is that we process the LLM-generated code using static analysis and code quality analysis, so that the code is examined without executing the program. Automated tools like those found in our JetBrains IDEs can scan a project’s code to check for vulnerabilities such as:

- Programming errors

- Coding standard violations

- Undefined values

- Syntax violations

Our AI-based hint system uses an LLM to generate code based on the student’s code and any error information. However, LLMs are inherently unreliable, having the well-known potential to produce hallucinations (see this 2024 paper and this other 2024 paper for recent research), and we wanted the hints provided to students to be the most accurate possible. The combination of in-IDE code processing and generating the code hint before the text hint enabled us to create a tool with high hint accuracy.

The code processing included ensuring code quality and controlling hint size, among other improvements (more details are available in the paper). The following list describes each in more detail:

- To improve the quality of the generated code, we used the IDE’s code quality inspections for optimizing code and for correcting common security and style issues. We selected 30 Kotlin inspections for which automatic fixes are available. The following code blocks show an example:

month >= && month <= 12

The comparison code from above gets transformed into a Kotlin range as below.

1..12

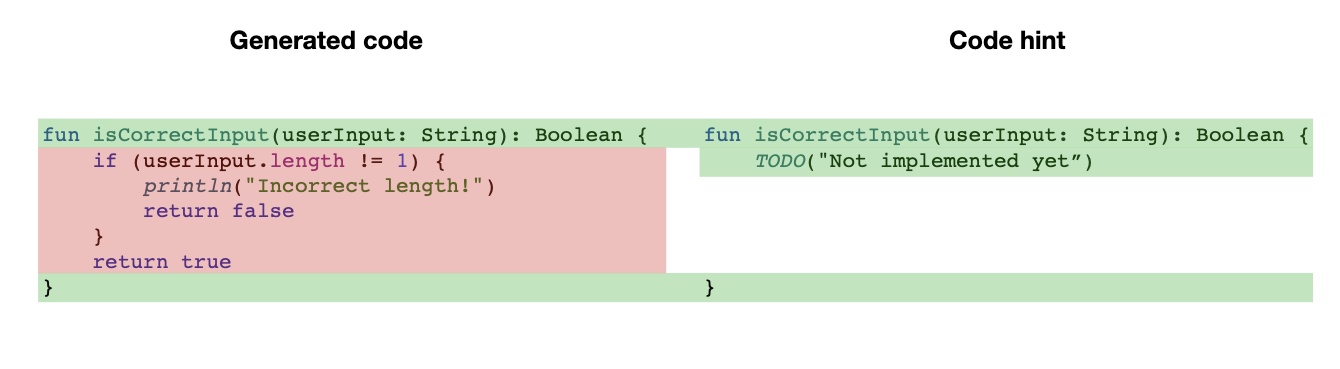

- LLMs do not always comprehend that only one step needs to be generated, even within one function. We believe that a good hint provides one logical action, not multiple ones at a time. To control the hint size, we developed some heuristics (more details can be found in the paper’s supplementary material). The figure below shows an example with additive statement isolation.

By processing the LLM-generated code before the student sees the hint, we can greatly improve the hint’s quality. This quality control gives our hint system an advantage over previously developed tools.

Hint system evaluation by students

In addition to the quality control described in the previous subsection, we validated the quality of the systems with experts and got feedback from students who used the tool in a course. In this subsection, we will only talk about the student validation; the details of the expert validation can be found in §5 of our paper.

As described above, hints have been developed for many MOOCs but less so for in-IDE courses, meaning that the MOOC-hint format is well-studied, while the in-IDE-hint format is not. Considering this, plus the fact that the IDE setting is inherently complex, we were interested in finding out how best to show text hints.

To find out, we conducted a user experience study, containing 15-minute comparative usability interviews with nine students of varying degrees of experience in programming.

From the interviews, we learned that students prefer having:

- The hint in the same context as the task, in contrast to the hint in the code window

- The relevant position highlighted in the code

On top of how to best show text hints, we were interested in what they thought about the usefulness and ease of use of the provided hints, both by looking at usage data and by asking the students directly. The data comes from fourteen students who completed five to six projects in the in-IDE Kotlin Onboarding: Introduction course, available in the JetBrains Academy catalog. More specifically, we used the KOALA tool to collect in-IDE activity data from the students who agreed to participate, followed by a qualitative assessment with open-ended questions.

Overall, we collected 64,512 code snippets; if you are interested in the dataset for research purposes, you can contact our team. The data indicated that:

- Students requested text hints 191 times.

- Of those 191 times, students requested to see a code hint about half the time (101).

- Request distribution across the course’s projects varied: the first basic projects contained less hint-requesting data than the later projects.

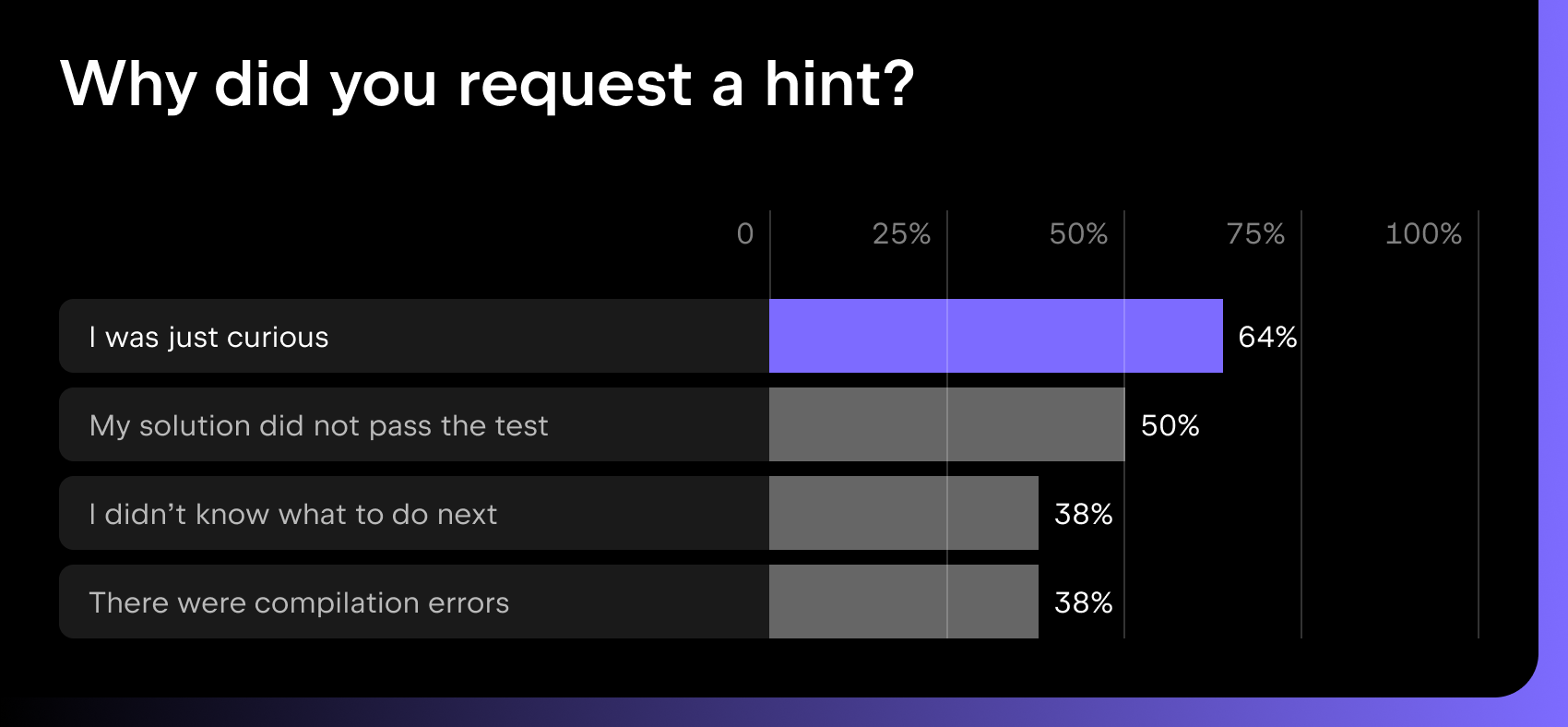

In the qualitative survey, 14 students were asked why they requested a hint, and could give multiple answers. The figure below shows the four most popular reasons for choosing a hint.

As seen in the figure above, the students reported using the hint system for its purpose, as well as for their own curiosity. There is additionally room for improvement with respect to compilation errors.

We also asked them further questions. Insights from their responses are summarized in the following:

- When asked what they did when the hint was unclear, 11 of the 14 students said they then tried to solve the task on their own, without searching online or asking an LLM.

- Half of the students said they preferred to see a combination of both text and code hints, which aligns with what we saw in the TaskTracker data, where about half of the students requested both text and code hints.

A key insight from the interviews was that the hint system is most helpful for beginner students. The hint tool is more helpful than searching online, because students at that level do not know what to search for or what prompt to use to ask an LLM. The flipside of this is that more advanced students prefer a chat-based interface, as in CS50.ai (see above), suggesting that a future version of our system would benefit from combining hints with a chat feature. In general, the students gave positive feedback on our hints system, with many indicating that they would be interested in continuing to use it for future courses.

Watch this space for a follow-up study, where we analyzed how another set of students used the hint tool. This work looks specifically at where any pain points might arise, and what can be done to improve students’ learning experience.

In the meantime, if you are interested in collaborating with our Education Research team, you can find more information on the website.

Subscribe to JetBrains Research blog updates