Procedural macros under the hood: Part II

In our previous blog post, we discussed the essence of Rust’s procedural macros. Now we invite you to dive deeper into how they are processed by the compiler and the IDE.

Compilation of procedural macros

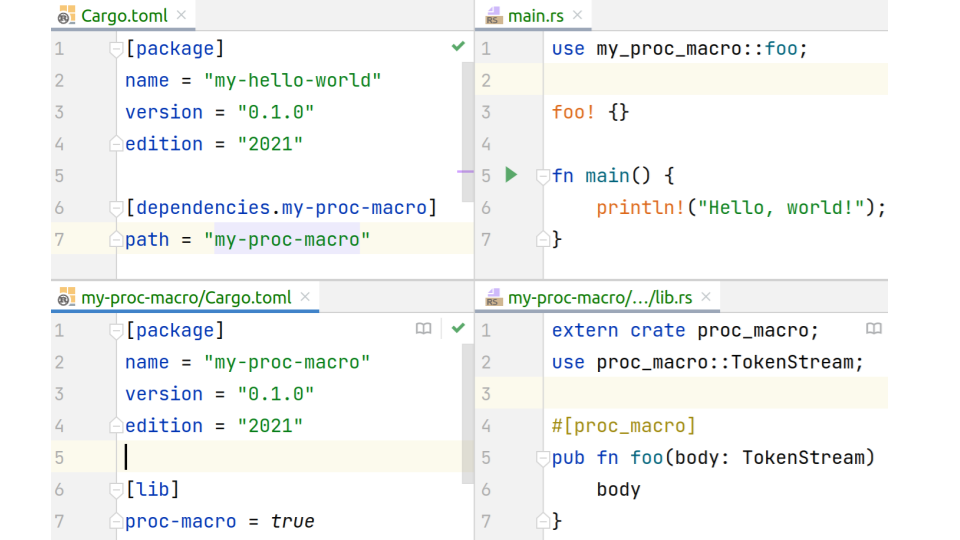

To start, let’s see how we might write a ‘Hello, world’ program using a separate crate inside a procedural macro:

In order to build this project, Cargo performs 2 calls to rustc:

cargo build -vv rustc --crate-name my_proc_macro --crate-type proc-macro --out-dir ./target/debug/deps my-proc-macro/src/lib.rs rustc --crate-name my-hello-world --crate-type bin --out-dir ./target/debug/deps --extern my_proc_macro=./target/debug/deps/libmy_proc_macro-25f996c8b2180912.so src/main.rs

During the first call to rustc, Cargo passes the procedural macro source with the --crate-type proc-macro flag. As a result, the compiler creates a dynamic library, which will be then passed to the second call along with the initial ‘Hello, world’ source to build the actual binary. You can see that in the last line of the second call:

--extern my_proc_macro=./target/debug/deps/libmy_proc_macro-25f996c8b2180912.so src/main.rs

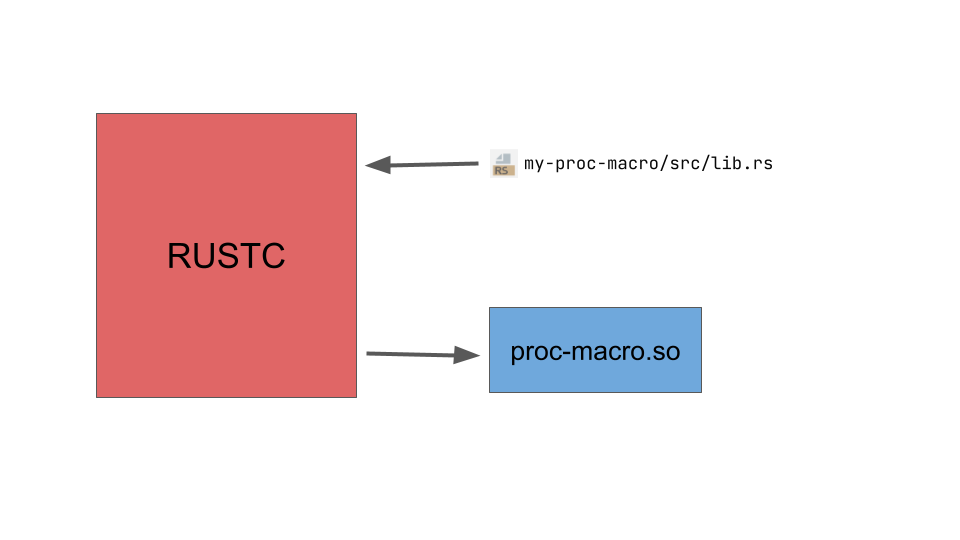

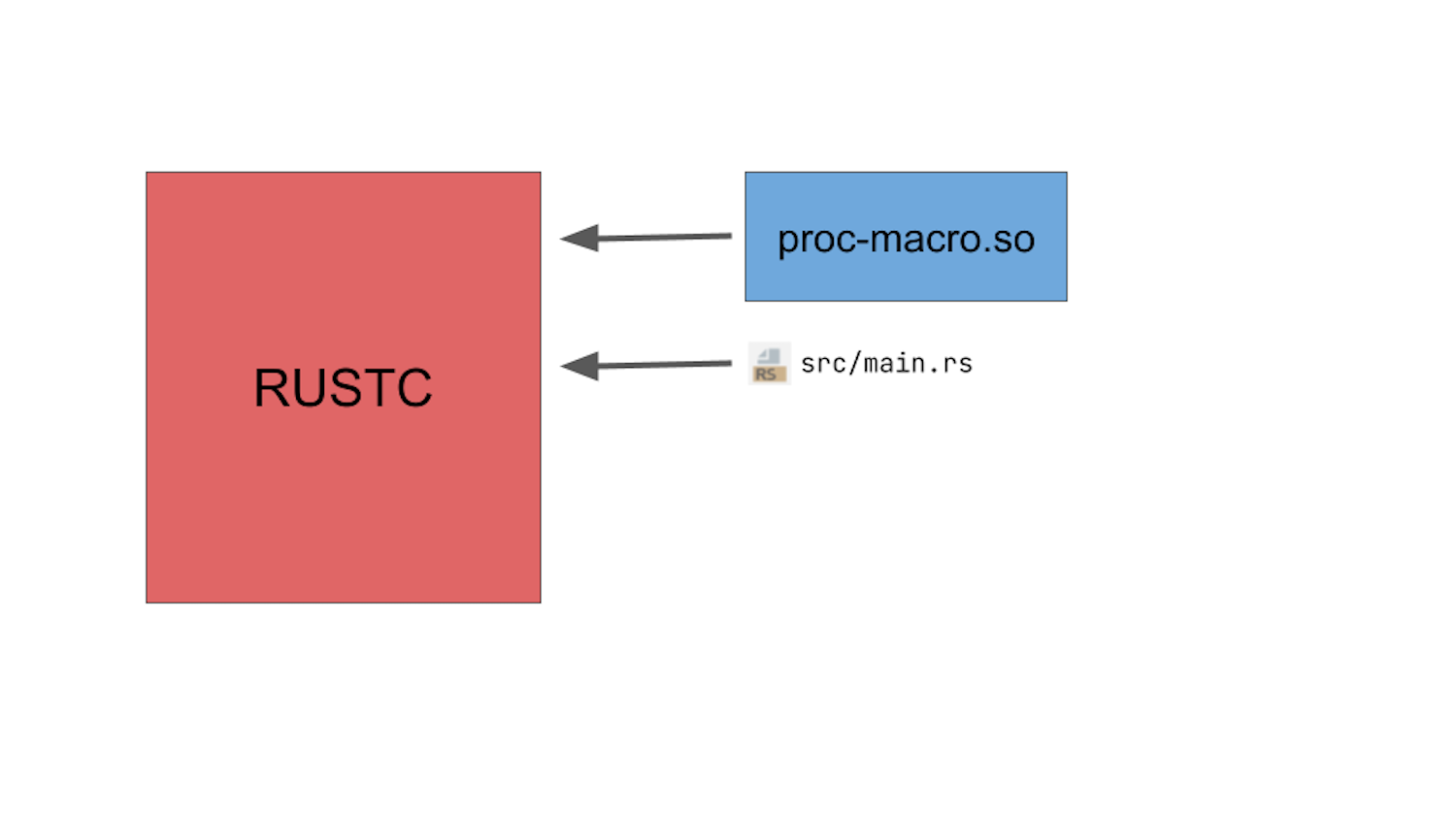

This is how the process can be illustrated.

Here’s the first call to rustc:

And here’s the second call:

First call to rustc: dynamic library

The intermediary dynamic library includes a special symbol, __rustc_proc_macro_decls_***__, which contains an array of macros declared in the project.

This is what a procedural macro’s code could look like after certain changes that rustc makes during compiling:

extern crate proc_macro;

use proc_macro::TokenStream;

// #[proc_macro]

pub fn foo(body: TokenStream) -> TokenStream { body }

use proc_macro::bridge::client::ProcMacro;

#[no_mangle]

static __rustc_proc_macro_decls_4bd76f2d7cc55ae0__: &[ProcMacro] = &[

ProcMacro::bang("foo", crate::foo)

];

The ProcMacro array includes the information on macro types and names, as well as references to procedural macro functions (crate:foo).

The __rustc_proc_macro_decls_***__ symbol is exported from the dynamic library, and rustc finds it during the second call.

readelf --dyn-syms --wide ./target/debug/deps/libmy_proc_macro-25f996c8b2180912.so ... 1020: 00000000001a29a0 16 OBJECT GLOBAL DEFAULT 22 __rustc_proc_macro_decls_4bd76f2d7cc55ae0__ …

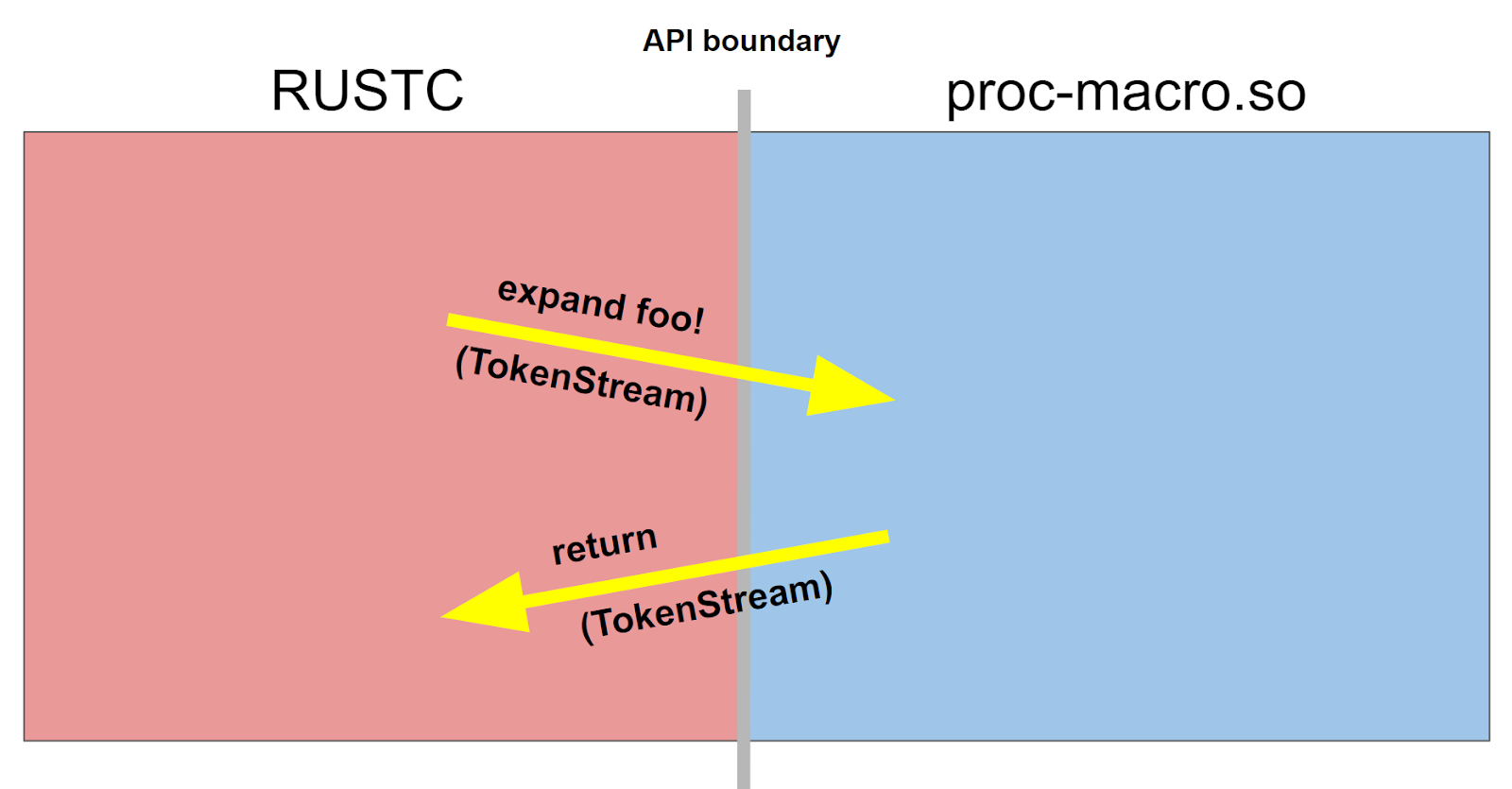

Second call to rustc: ABI

During the second call, rustc finds the __rustc_proc_macro_decls_***__ symbol and recognizes a function reference there.

At this point, you might expect the compiler to command the dynamic library to expand the macro using the given TokenStream:

However, this can’t be done due to the fact that Rust doesn’t have a stable ABI. An ABI – Application Binary Interface – includes calling conventions (like the order in which structure fields are placed in memory). In order for a function to be called from a dynamic library, its ABI should be known to the compiler.

Rust’s ABI is not fixed, meaning that it can change with each new compiler version. So there are two requirements for the dynamic library’s ABI and the program’s ABI to match:

1) Both the library and the program should be compiled using the same compiler version.

2) The codegen backend of the compiler should also be the same.

As you might know, rustc is compiled by itself. When a new compiler version is released, it is first compiled by the previous version, and then compiled by itself. Similarly, in the case of our procedural macro library, it is compiled by the compiler, which it is then linked into. So it seems that rustc and procedural macros are compiled using the same compiler version, and their ABI’s should match. But here comes the second part of the equation – the codegen backend.

Rustc codegen backend and proc macros

This is where it’s helpful to take a look at how things used to work. Prior to 2018, Rust’s ABI had been used for procedural macros, but then it was decided that the compiler’s backend should be modifiable. Today, although the default rustc backend is LLVM-based, there are alternative builds like Cranelift or others – with a GCC backend.

How is it possible to add more backends to the compiler while simultaneously keeping procedural macros working?

There are two solutions that seem to be obvious, but they have their flaws:

- C ABI

In the case of C ABI, a function should be prepended with extern “C”:

extern “C” fn foo() { ... }

This kind of function can take C types or the types declared with the repr(C) attribute:

#[repr(C)] struct Foo { ... }

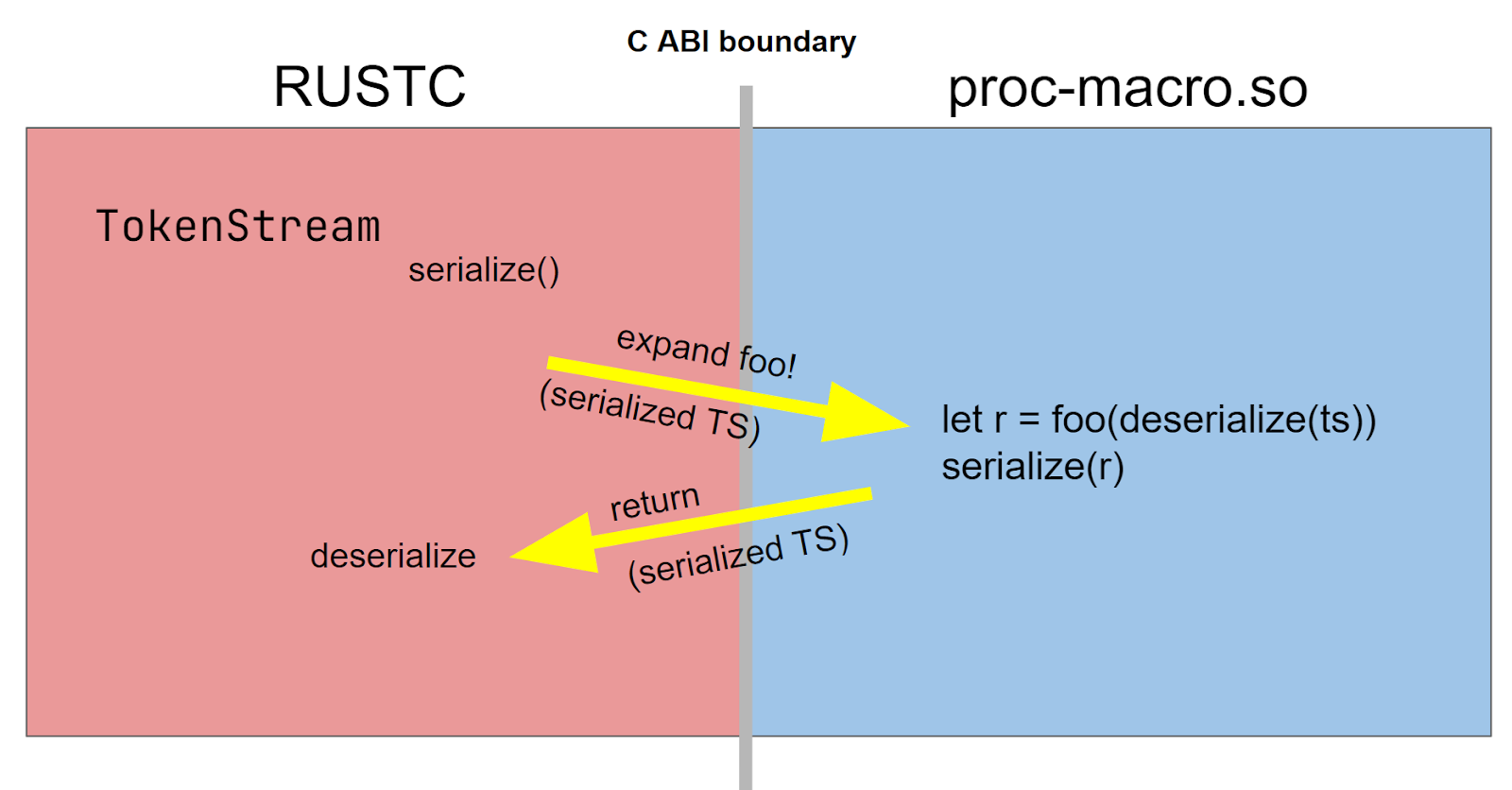

- Full serialization of macro-related types

In this case, macro-related types like TokenStream would be serialized and then passed to the dynamic library. The macro would de-serialize them back to their inner types, call the function, and then serialize the results to pass them back to the compiler:

But it is not that simple. The correct solution came in pull request #49219, called “Decouple proc_macro from the rest of the compiler”. The actual process adopted in rustc is as follows:

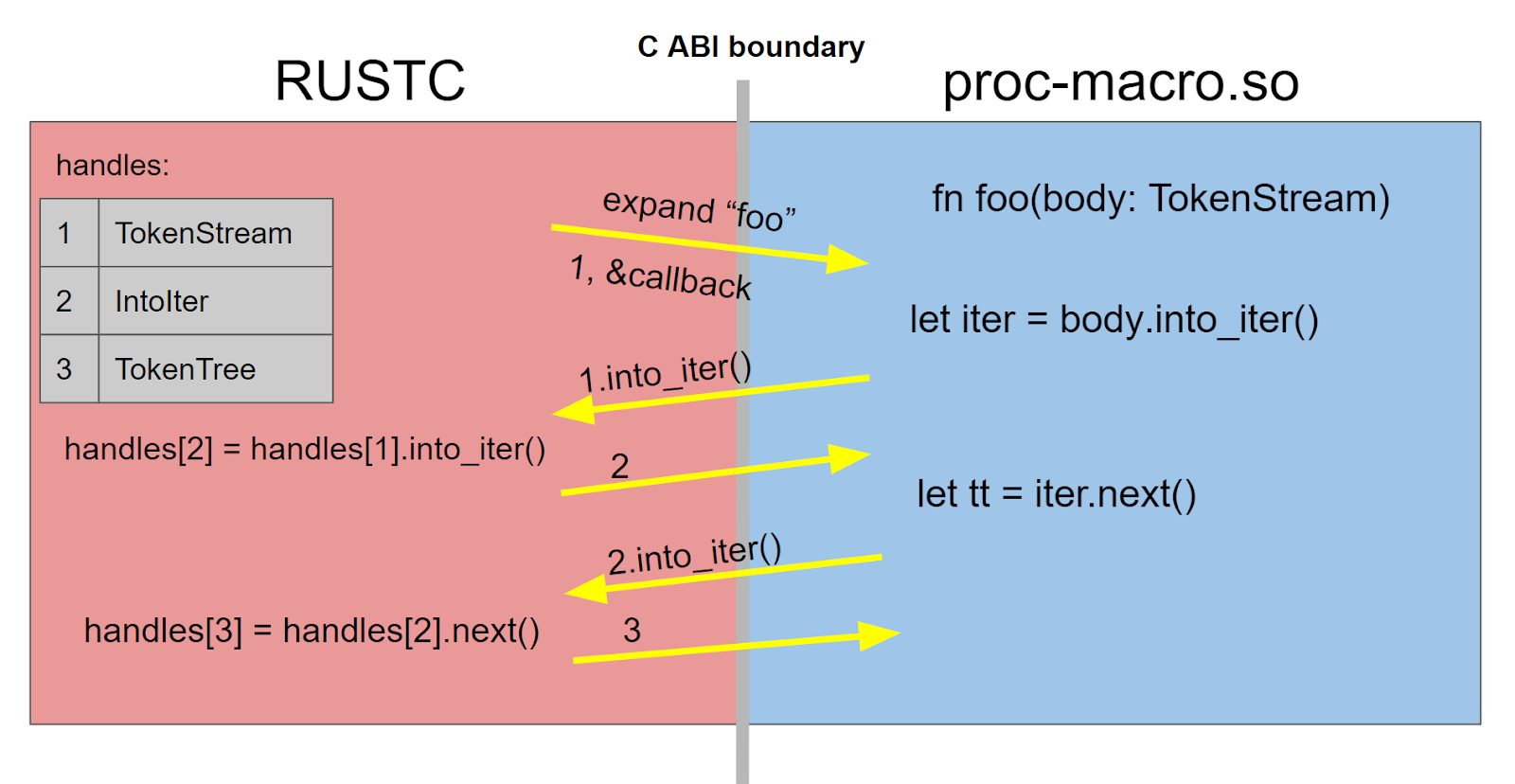

- Before rustc calls a procedural macro, it puts the TokenStream into the table of handles with a specific id. Then the procedural macro is called with that id instead of a whole data structure.

- On the dynamic library’s side, the id is wrapped into the library’s inner TokenStream type. Then, when the method of that TokenStream is called, that call is passed back to rustc with the id and the method name.

- Using the id, rustc takes the original TokenStream from the table of handles and calls the method on it. The result goes to the table of handles and gets the id that is used for further operations on that result, and so on.

How is this approach better than the simpler version with full serialization of all structures or C ABI?

- Spans link a lot of compiler inner types, which are better left unexposed. Also, implementation of serialization for all of those inner types would be expensive.

- This approach allows backcalls (the procedural macro might want to ask the compiler for some additional action).

- With this approach, macros can (in theory) be extracted into a separate process or be executed on a virtual machine.

Proc macros and potentially dangerous code

As we saw, a procedural macro is essentially a dynamic library linked to the compiler. That library can execute arbitrary – and potentially dangerous – code. For example, that code could segfault, causing the compiler to segfault too, or call system fork and duplicate the compiler.

Aside from this apparently dangerous behavior, procedural macros can also make other system calls, such as accessing a file system or the web. These calls are not necessarily unsafe, but might not be good practice in the first place. An illustrative example here is procedural macros that make SQL requests, often accessing databases at compile time.

Procedural macros in IDEs

In order for the IDE to analyze procedural macros on the fly, it needs to have the code of expansion on hand all the time.

In the case of declarative macros, the IDE can expand macros by itself. But in the case of procedural macros, it needs to load the dynamic library and perform the actual macro calls.

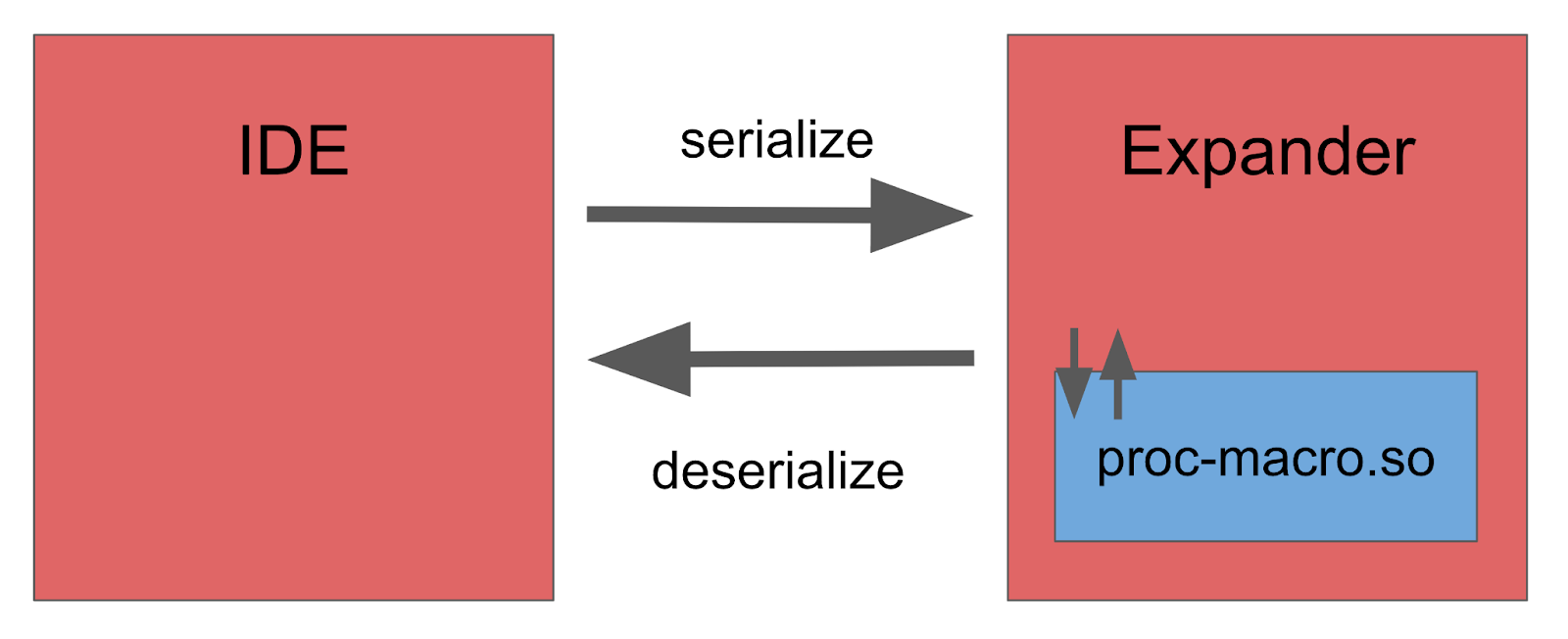

One approach might be to simply replace the compiler with the IDE, keeping the same workflow as described above. However, bear in mind that a procedural macro can segfault (and cause the IDE to segfault) or, for example, occupy a lot of memory, and the IDE will fail with out-of-memory errors. For these reasons, procedural macro expansion must be extracted into another process separate from the IDE.

That process is called the expander. It links the dynamic library and uses the same interface as the compiler to interact with procedural macros. Communication between the IDE and the expander is performed using full data serialization.

The expander is implemented similarly in both Rust Analyzer and IntelliJ Rust.

________________________________

We hope this article has helped you learn more about procedural macros compilation and the way procedural macros are treated by the IDE. If you have any questions, please ask them in the comments below or ping us on Twitter.

Your Rust team

JetBrains

The Drive to Develop

Subscribe to Rust Blog updates