JetBrains AI

Supercharge your tools with AI-powered features inside many JetBrains products

The Best AI Models for Coding: Accuracy, Integration, and Developer Fit

AI models and coding assistants have become essential tools for developers. Today, developers rely on large language models (LLMs) to accelerate coding, improve code quality, and reduce repetitive work across the entire development lifecycle. From intelligent code completion to refactoring, debugging, and documentation, AI-powered tools are now embedded directly into daily workflows.

Drawing on insights from the latest JetBrains Developer Ecosystem Report 2025, this guide compares the top large language models (LLMs) used for programming. It focuses on how leading LLMs balance accuracy, speed, security, cost, and IDE integration, helping developers and teams choose the right model for their specific needs.

Throughout the article, we also highlight how tools like JetBrains AI Assistant bring these models directly into professional development environments, backed by real-world usage data from the report.

Table of contents

- What are AI models for coding?

- How developers choose between AI models

- Top AI models in 2025

- Evaluation criteria for AI coding assistants

- Open-source vs. proprietary models

- Enterprise readiness and security

- How to select the right AI coding model for you

- FAQ

- Conclusion

What are AI models for coding?

AI models for coding are large language models (LLMs) trained on vast collections of source code, technical documentation, and natural language text. Their purpose is to understand programming intent and generate relevant, context-aware responses that assist developers during software creation. Unlike traditional static tools, these models can reason about code structure, explain logic, and adapt to different programming languages and frameworks.

The best LLMs for programming support a wide range of everyday development tasks, most typically being used for code completion, refactoring, debugging, documentation writing, and test creation. By delegating such repetitive or boilerplate-related tasks to an LLM, developers can turn their attention to more complex problem-solving and system design tasks.

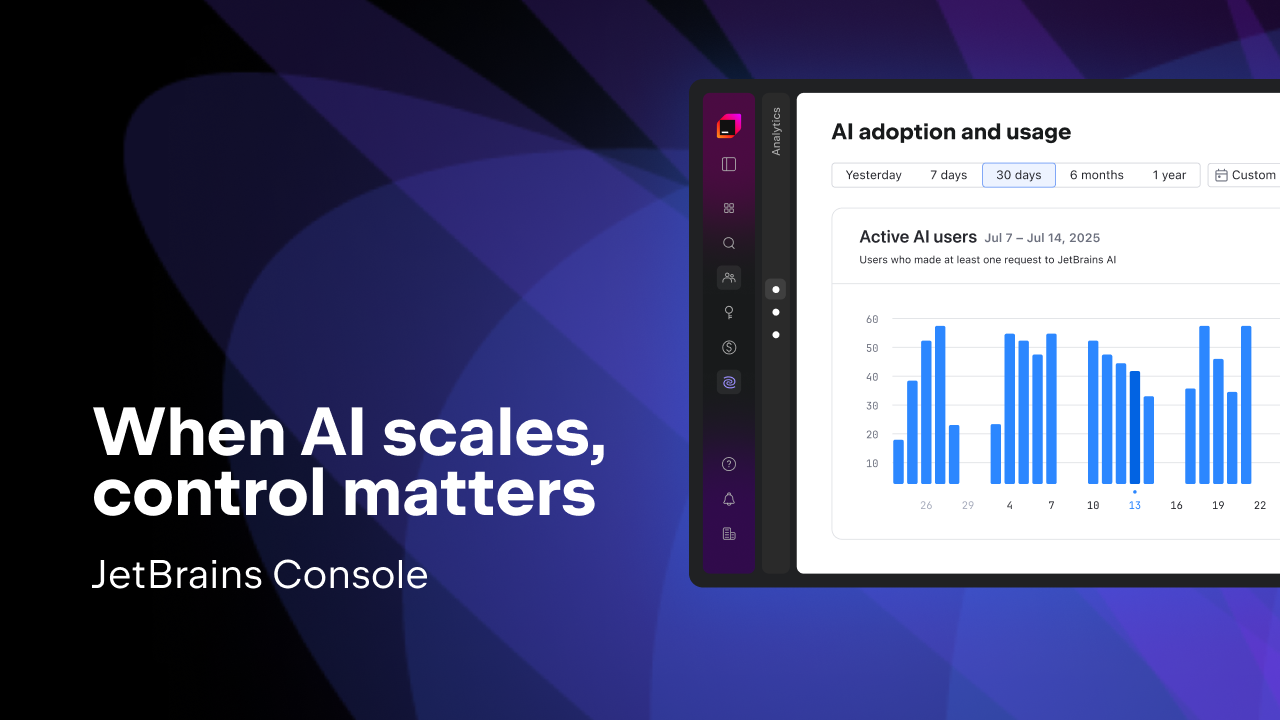

Most developers interact with AI coding tools through IDE integrations, browser tools, or APIs. This is where IDE-based assistants, such as JetBrains AI Assistant, are particularly valuable, as they operate directly within the development context, using project structure, files, and language semantics to improve accuracy and relevance.

The use of AI coding tools is influenced by several critical factors, including accuracy, latency, cost efficiency, and data privacy. According to the JetBrains Developer Ecosystem Report 2025, this adoption of AI is now increasingly widespread, with up to 85% of developers regularly embracing AI tools for coding and development in 2025.

As AI capabilities expand, developers face an important challenge: selecting those AI models that best fit their workflow. The next section discusses how developers can evaluate the various options and make the best decision for their needs.

How developers choose between AI models

Developers’ adoption of AI coding tools in 2025 is driven by how well an AI model integrates into real-world workflows and delivers consistent output. This often goes beyond technical specs alone and involves various practical and trust-based factors.

The top concern identified in the JetBrains Developer Ecosystem Report 2025 is code quality. IDE integration was another major priority. AI tools for developers that work seamlessly inside familiar environments, such as JetBrains IDEs, are far more likely to be adopted than standalone interfaces. Pricing and licensing also mattered for developers, especially for individual developers and small teams who need predictable or affordable access.

For professional teams, data privacy and security increasingly shape decision-making around AI model selection. The ability to control how prompts and code are processed, whether models can be deployed locally, and how data is retained or logged are all critical considerations. Customization options, including fine-tuning and contextual prompts, are also becoming more relevant as teams seek domain-specific optimization.

Overall, insights from the report indicate a clear divide. Individual developer AI preferences prioritize usability, responsiveness, and cost efficiency. But for organizations, the principal focus areas are compliance, governance, and long-term scalability.

Key selection factors for AI coding assistants

This table summarizes the core criteria developers use for quick comparison.

| Criterion | Why it matters | How to assess |

| Code quality | Determines whether generated code is correct, maintainable, and consistent with best practices | Evaluate accuracy and reasoning in real coding scenarios |

| IDE integration | Affects workflow continuity and adoption rate | Check for native support in JetBrains IDEs or other editors |

| Price and licensing | Influences accessibility for individuals and teams | Compare pricing tiers, free limits, and scalability costs |

| Data privacy and security | Ensures that code and prompts are handled safely | Verify local execution, encryption, and data policy |

| Local or self-hosted options | Important for teams with compliance or IP control needs | Assess support for private model deployment |

| Fine-tuning and customization | Enables domain-specific improvements and internal optimisation | Check whether the model supports custom training or contextual prompts |

With these criteria in mind, the next section explores the top AI models developers use in 2025 and how they compare in practice.

Top AI models in 2025

The JetBrains Developer Ecosystem Report 2025 shows that developers do not rely on a single LLM. Instead, they use a small set of the best AI models for coding, depending on accuracy needs, workflow integration, cost constraints, and data-handling requirements.

Based on developer survey data, the report identifies the following AI models in 2025 as the most commonly used and trusted for coding tasks today. It forms the basis of an AI coding assistants comparison guide that is grounded in real-world adoption rather than theoretical benchmarks:

GPT models (OpenAI): Models like GPT-5 and GPT-5.1 are widely used and recognized as some of the best LLMs for programming in day-to-day development, particularly for code generation, refactoring, and explanation tasks. These models are incorporated in daily workflows due to their consistent output quality and large context windows. Their trade-off is cost, especially for teams with heavy usage.

Claude models (Anthropic): Claude 3.7 Sonnet is commonly chosen by developers working with large files, monorepos, or documentation-heavy projects. It is frequently cited among top AI code assistants for its ability to reason over long inputs and maintain structure in explanations and generated code. However, compared to GPT-based tools, it offers fewer native integrations.

Gemini (Google): Gemini 2.5 Pro appears most often in workflows tied to Google’s ecosystem. Developers report using it for tasks that combine coding with documentation, search, or collaborative environments. While it performs well in speed and accessibility, it is less flexible for teams that require deep customization or private deployments when evaluating AI models in 2025.

DeepSeek: DeepSeek R1 has gained attention among developers seeking lower-cost AI coding assistance or local deployment options. It is increasingly included in AI coding assistant comparisons for teams experimenting with AI at scale while maintaining tighter control over data and infrastructure.

Open-source models: These models, such as Qwen and StarCoder, represent another category of best LLMs for programming for a smaller but growing segment of developers. They are most popular among teams with strong DevOps capabilities or strict data-governance requirements. While they offer maximum control, they also require significant operational effort.

Overall, differences in reasoning accuracy, speed, context length, and IDE integration significantly influence developer preferences when selecting among the best AI models for coding. All of these impact developer preferences. For instance, some developers could prioritize performance and reasoning depth with GPT-4o or Claude 3.7. Others may choose more cost-efficient or private alternatives, such as DeepSeek and open-source models, depending on workflow and organizational constraints.

Capabilities of leading AI models for coding

| Model | Deployment model | Config / Interface | Best for | Strength | Trade-off |

| GPT-5 / GPT-5.1 | Cloud / API | Text + code input | Broad coding and reasoning tasks | High accuracy and large context | Higher cost per token |

| Claude 3.7 Sonnet | Cloud / API | Natural language focus | Structured code and documentation | Contextual reasoning, long input handling | Limited tool integrations |

| Gemini 2.5 Pro | Cloud | Multimodal, Google ecosystem | Web-based workflows | Fast response, cloud collaboration | Limited fine-tuning |

| DeepSeek R1 | Cloud / Local | API and SDK | Cost-efficient large-scale coding | Competitive performance, local option | Smaller ecosystem |

| Open-source models (Qwen, StarCoder, etc.) | Local / Self-hosted | Various | Privacy-first or custom use | Control, modifiability | Setup complexity, maintenance |

Pricing and total cost of ownership (TCO) comparison

| Model type | Cost profile | Scaling considerations |

| GPT family | Usage-based, higher per-token cost | Scales well but requires budget planning |

| Claude family | Usage-based, mid-to-high cost | Efficient for long-context tasks |

| Gemini | Bundled cloud pricing | Optimized for cloud environments |

| DeepSeek | Lower usage costs | Attractive for frequent queries |

| Open-source | Infrastructure-dependent | No license fees, higher ops cost |

The next section builds on this by presenting a clear framework for objectively evaluating these models.

Evaluation criteria for AI coding assistants

Selecting an AI coding assistant requires balancing multiple factors rather than optimizing for a single metric, a reality reflected in any meaningful comparison of AI coding assistants. Accuracy, speed, cost, integration, and security all play a role, and their relative importance depends on whether the tool is used for personal productivity, enterprise compliance, or research and experimentation when identifying the best AI for software development.

Developers surveyed in the JetBrains Developer Ecosystem Report 2025 consistently cite code accuracy and IDE integration as top priorities when evaluating LLMs. However, organizational users also emphasize governance, transparency, and scalability as part of a broader AI model assessment.

Core evaluation criteria for AI coding assistants

| Criterion | Why it matters | How to assess |

| Accuracy and reasoning | Determines the reliability of code suggestions, explanations, and test generation | Compare model output on real codebases or benchmark problems |

| Integration and workflow fit | Ensures smooth adoption inside IDEs and CI/CD pipelines | Verify compatibility with JetBrains IDEs, VS Code, or API connectors |

| Cost and scalability | Affects accessibility for individual and organizational users | Review token pricing, API quotas, or enterprise licensing |

| Security and data privacy | Protects proprietary code and complies with organizational standards | Check data retention policies, encryption, and local deployment options |

| Context length and memory | Impacts how well the model understands complex projects or files | Evaluate maximum input size and conversational continuity. |

| Customization and fine-tuning | Enables adaptation to specific domains or internal libraries | Determine whether the model allows prompt tuning, embeddings, or private training |

| Transparency and governance | Important for auditability and compliance | Confirm whether logs, audit trails, and explainability tools are available |

These criteria underscore a fundamental choice developers must make between open-source and proprietary AI models, discussed in the next section.

Open-source vs. proprietary models

AI coding assistants generally fall into two categories: open-source or locally deployed models and commercial, cloud-managed models. A choice between them affects everything from data handling to performance and maintenance.

The JetBrains Developer Ecosystem Report 2025 shows that most developers currently rely on cloud-based proprietary AI coding tools, but a growing segment prefers local or private deployments due to security and compliance requirements. This group is now increasingly turning to local LLMs for coding and leveraging open-source models.

General industry patterns, specifically when it comes to a comparison of AI platforms, suggest there are different reasons behind this choice. Teams that choose open-source AI models for coding often seek transparency, customization, and infrastructure control. Proprietary models, on the other hand, offer faster onboarding, reliability, and vendor-managed updates.

While there is no single “best” option, the selection of either an open-source or proprietary model comes down to organizational priorities such as compliance, scalability, and available DevOps resources. The following comparison table summarizes each type’s advantages, limitations, and best-fit scenarios.

Comparison of open-source and proprietary AI coding models

| Type | Advantages | Limitations | Best fit |

| Open-source / Local models (e.g. StarCoder, Qwen, DeepSeek Local) | Full control of infrastructure and data, ability to customize and fine-tune, no recurring license fees | Requires setup and maintenance effort; updates and security are handled internally; performance may depend on local hardware | Teams with strong DevOps capabilities or strict data-governance requirements |

| Proprietary / Managed models (e.g., GPT-4o, Claude 3.7, Gemini Pro) | Fast setup, robust integrations, vendor-handled compliance, predictable performance, and enterprise support | Costs scale with usage; potential vendor lock-in; less transparency in training data | Individual developers and growing teams focused on speed and reduced operational overhead |

Now that we have explored the various models open to developers, we will examine enterprise-readiness and security and consider how organizations evaluate governance, compliance, and reliability when adopting AI coding solutions.

Enterprise readiness and security

Enterprise AI coding tools must meet requirements far beyond accuracy or productivity gains. Security, compliance, and governance also play a decisive role.

According to the JetBrains Developer Ecosystem Report 2025, many companies hesitate to adopt AI coding tools due to concerns about data privacy, IP protection, and model transparency. These need to be addressed to ensure secure AI for developers.

To achieve this, enterprise-ready AI models typically offer flexible deployment, role-based access control, encryption, audit logs, policy enforcement, and AI governance and compliance.

Some tools, such as JetBrains AI Assistant, support both cloud and on-premises integration, which suits teams that need a balance between agility and compliance. The table below also summarizes the capabilities and example tools required to create enterprise-ready LLMs.LMs.

Enterprise evaluation matrix for AI coding tools

| Capability | Why it matters | Example tools |

| Deployment flexibility | Enterprises need to control where data and models run to meet compliance and integration requirements | TeamCity, JetBrains AI Assistant (self-hosted), GitLab, DeepSeek Local |

| Role-based access control (RBAC) and SSO | Centralizes identity management and reduces risk of unauthorized access | JetBrains AI Assistant, Harness, GitLab |

| Audit and traceability | Supports compliance with ISO, SOC, and internal governance audits | TeamCity, Jenkins (plugins), JetBrains AI Assistant |

| Policy as code / Approvals | Enables automated enforcement of deployment and review policies | Harness, GitLab, TeamCity |

| Data privacy and encryption | Protects source code and proprietary data during inference or storage | JetBrains AI Assistant, Claude 3.7 (enterprise), DeepSeek Local |

| Disaster recovery and backups | Minimizes downtime and preserves continuity in case of system failures | JetBrains Cloud Services, GitLab Self-Managed |

| Compliance standards | Ensures alignment with SOC 2, ISO 27001, GDPR, or regional equivalents | JetBrains AI Assistant, GitLab, Harness |

Now that we understand how to create an enterprise evaluation matrix, the next section will explain how teams can choose the right AI coding model based on their specific needs, balancing control, speed, and compliance.

How to select the right AI coding model for you

The best AI for developers depends on context. They must balance control, cost, integration, and compliance to find the best LLM for team workflows, rather than look for a single winner.

As you have seen, each model is suited to meet specific needs, be they speed, governance, or flexibility. This 8-step selection framework will guide you on how to find the right AI coding model for your requirements when choosing an AI assistant.

Step-by-step selection framework

| Step | Question | If “yes” → | If “no” → |

| 1 | Need full data control or on-premises security? | Use local or self-hosted models (DeepSeek Local, Qwen, open-source) | Continue |

| 2 | Primarily using JetBrains IDEs? | Use JetBrains AI Assistant (supports multiple LLMs) | Continue |

| 3 | Need a model optimized for GitHub workflows? | Choose GPT-4o or GitHub Copilot | Continue |

| 4 | Require large context handling for complex codebases? | Claude 3.7 Sonnet or Gemini 2.5 Pro | Continue |

| 5 | Need cost efficiency for frequent queries? | DeepSeek R1 or open-source alternatives | Continue |

| 6 | Require enterprise compliance (RBAC, SSO, audit logs)? | JetBrains AI Assistant, Harness, or GitLab | Continue |

| 7 | Prefer minimal setup and fast onboarding? | Managed cloud models (GPT-4o, Claude, Gemini) | Continue |

| 8 | Working with multi-language or monorepo projects? | JetBrains AI Assistant or GPT-4o. | Continue |

Summary takeaways

How to choose an AI coding model:

- Need control → local or open-source models

- Need speed → GPT-4o or Claude

- Need compliance → JetBrains AI Assistant

- Focus on collaboration → IDE-integrated tools

Align your tool choice with your team’s priorities.

Now that you have the right AI coding model, in the next section, we will answer the most common developer questions about AI coding tools.

FAQ

Q: Which AI model is most popular among developers in 2025?

A: GPT-4o, Claude 3.7 Sonnet, and Gemini 2.5 Pro are the most frequently used AI models for coding tasks, according to the JetBrains Developer Ecosystem Report 2025.

Q: Are there free or affordable AI models for coding?

A: Yes. DeepSeek R1 and open-source models like Qwen or StarCoder provide cost-efficient options for developers exploring AI assistance.

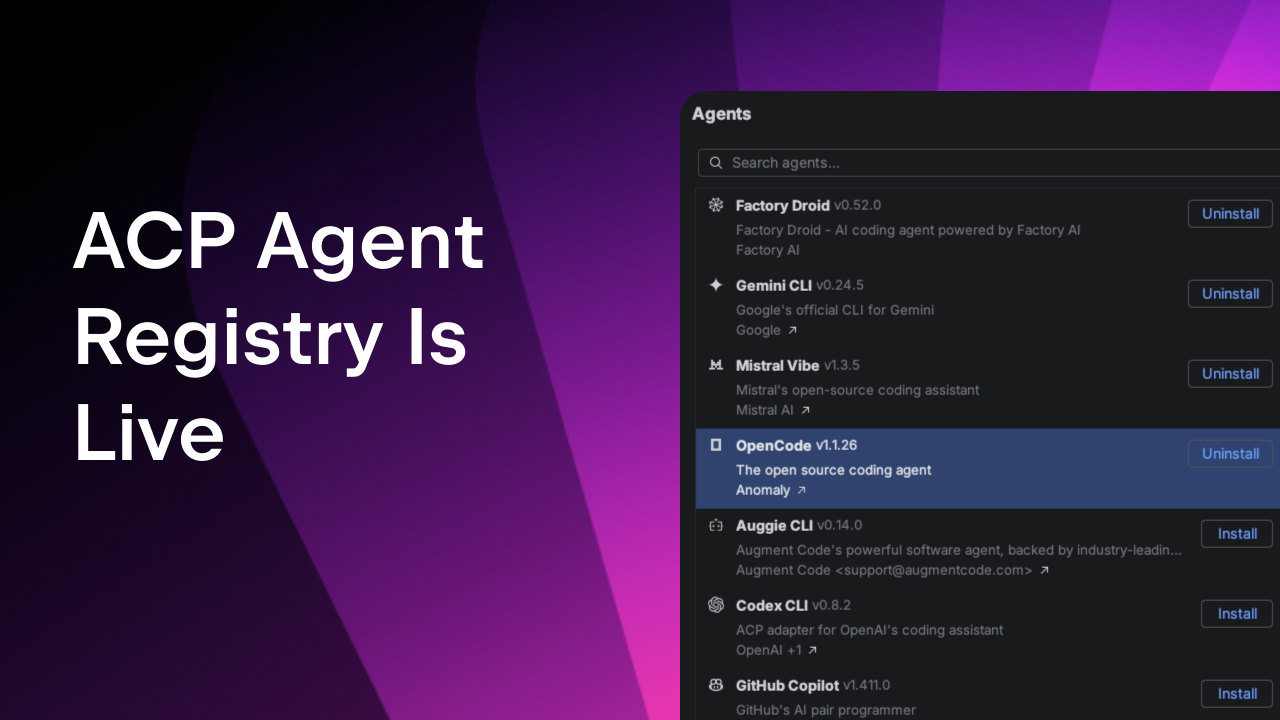

Q: Which AI coding tools integrate best with JetBrains IDEs?

A: JetBrains AI Assistant integrates multiple LLMs, including GPT and Claude models, directly into IDE workflows for real-time suggestions and contextual understanding.

Q: Is it safe to use AI coding tools for proprietary projects?

A: Yes, if using tools with strong data privacy policies or local execution options. Many teams adopt private or on-premises models to retain full control of source code.

Q: What’s the difference between cloud and local AI models?

A: Cloud models offer convenience and scalability, while local or self-hosted models provide greater data control and compliance for enterprise use.

Q: Which AI model is best for enterprise environments?

A: Enterprise-ready tools like JetBrains AI Assistant, Claude for Teams, and Harness provide features such as RBAC, audit logs, and SSO for secure governance.

Q: How widely are AI tools adopted among developers?

A: As seen in data shared from the JetBrains Developer Ecosystem Report 2025 earlier, more than two-thirds of professional developers use some form of AI coding assistance, reflecting strong industry-wide adoption.

The next section will summarize the key insights and encourage readers to explore JetBrains AI tools for their own development workflows.

Conclusion

AI coding models have moved from experimentation to everyday development practice. Developers now rely on AI assistants to write, review, and understand code at scale. Models like GPT-4o, Claude, Gemini, and DeepSeek lead the field, while open-source and local options continue to gain traction for privacy and customization.

Insights from the JetBrains Developer Ecosystem Report 2025 show that there is no single best AI model for coding. The right choice depends on workflow, team size, and governance requirements. As AI-assisted development evolves, improvements in reasoning, context length, and IDE integration will further shape how developers build software with AI’s help.

To experience these capabilities firsthand, start exploring AI-powered development today. Learn more about JetBrains AI Assistant, and see how it can enhance your development workflow.

Subscribe to JetBrains AI Blog updates