TeamCity

Powerful CI/CD for DevOps-centric teams

CI/CD and Serverless Computing: Best Practices for Microservices

This article was brought to you by Mdu Sibisi, freelance writer, draft.dev.

Cloud technology has reshaped how developers manage and deliver software. For example, “serverless computing” allows a provider to dynamically manage the allocation and provisioning of servers for you, which makes it ideal for running microservices.

When paired with CI/CD practices, serverless computing can help shorten development cycles, reduce the incidence of errors, and increase the scalability of pipelines.

However, it does present some unique challenges, such as achieving comprehensive visibility, establishing secure and compliant interservice communication, and managing deployment and versioning. Many of these obstacles can be overcome using a tool like JetBrains TeamCity to integrate CI/CD with serverless computing.

This guide explores the best practices for microservice management through CI/CD integration on serverless computing and how TeamCity can simplify the process.

?Also on the topic: Building and Deploying Microservices With Spring Boot and TeamCity

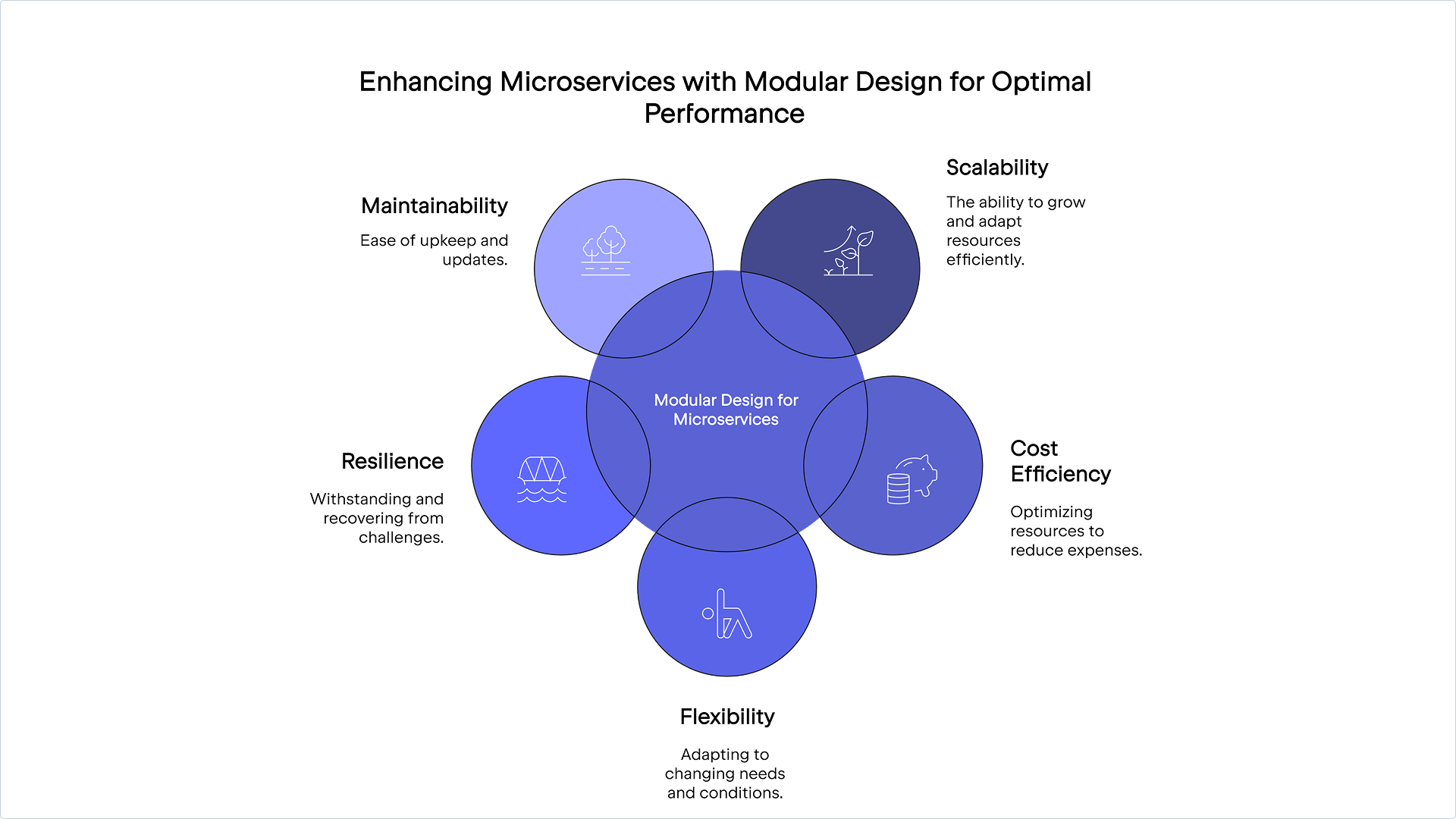

Modular design for microservices

When building microservices for serverless architecture, you should adopt a modular design to optimize compatibility with CI/CD pipelines. While alternatives like monolithic architecture, service-oriented architecture (SOA), and micro-frontend architecture each have their merits, they often introduce complexity and overhead. Modular design, on the other hand, allows you to create flexible, efficient microservices that align with serverless computing.

Modular design allows you to break an application down into smaller, independent components or microservices. A good example is how streaming services use dedicated modules or microservices for each major component, including user authentication, content management, recommendation systems, and billing.

This approach improves each component’s scalability, cost efficiency, flexibility, resilience, and maintainability.

Single responsibility principle (SRP)

Regardless of the use case, it’s crucial that your microservices align with the single responsibility principle (SRP), which states that each microservice should have a clearly defined purpose or responsibility that focuses on a specific business or usage function. This makes them easier to manage, debug, and troubleshoot.

High cohesion

To effectively implement SRP, microservices should be highly cohesive, with components closely related and working together. This improves maintainability, reduces complexity, and allows for focused testing, as each module can be tested in isolation.

Loose coupling

Loose coupling, or decoupling, means that alterations or changes in one microservice should not significantly affect another. It allows for independent development, deployment, and scaling of each service, which can often be challenges associated with running microservices on serverless architecture. Updates or changes to one module can be deployed without taking down the entire application, reducing downtime and improving availability.

Decoupling can make dependency mocking or stubbing simpler and enable you to thoroughly test each module’s functionality without relying on other services.

API-first design

To enhance cohesion and reduce coupling, adopt an API-first approach to microservice design. This involves creating a well-defined API before developing other components, which should provide consistent communication, smooth interoperability, and simplified integration. It also streamlines documentation and monitoring.

Automating builds and deployments

Automated pipelines make it easier to handle multiple microservices. You can use them to manage the build and deployment of multiple microservices simultaneously. These pipelines can also scale in response to increased demand, helping build and deployment processes remain efficient even as the number of microservices grows.

While you can write scripts and develop your own background services to manually build your pipelines, it would be far easier and more efficient to employ a tool like TeamCity, which provides a flexible, all-in-one solution to build, test, and automate deployment.

It offers multiple configuration options (most notably configuration as code) and templating. Alternatively, you can use one of TeamCity’s SaaS implementations for a web-based wizard that allows you to initialize and edit your pipelines visually.

Version control and management

You also need a way to manage versions of serverless functions and microservices to maintain stability, backward compatibility, and smooth deployments. There are two main versioning strategies to consider:

- Semantic versioning is used to indicate major, minor, and patch changes. It makes it easier to identify the impact of changes and manage dependencies.

- API versioning allows you to manage changes in the API contract. You can use URL versioning (such as

/v1/resource), header versioning, or query parameter versioning.

Each version of your serverless functions and microservices should be accompanied by clear and comprehensive documentation.

This must include API endpoints, request-response formats, and any changes introduced in each version. In addition, it’s important to keep a detailed changelog to track changes, bug fixes, and new features for each version. This helps developers understand the evolution of the service.

It’s good practice to ensure that your microservices are backward compatible. This helps prevent changes from breaking existing clients.

Despite your best efforts, things may still go wrong. So, establishing rollback mechanisms is important. They enable quick recovery from deployment failures by swiftly reverting to a stable version. Additionally, they give teams the confidence to experiment with new features or changes to their microservices while knowing they can easily revert if something goes wrong.

Testing strategies for serverless microservices

Testing serverless microservices can be extremely challenging due to their ephemeral nature, event-driven architecture, and distributed systems. These factors make it difficult to reproduce and debug errors, simulate events accurately, and test interactions between services.

Additionally, maintaining consistent performance, security, and compliance across multiple third-party services adds complexity. However, there are tailored strategies and tools you can adopt to help improve the quality and reliability of serverless microservices.

Unit testing

This type of granular testing focuses on assessing whether individual functions or components perform as expected in isolation. Available frameworks include Jest (JavaScript), pytest (Python), and JUnit (Java). Mocking and stubbing frameworks allow you to simulate external services and dependencies.

For instance, you can stub out external API calls and dependencies to control their behavior during testing. This helps in creating predictable and repeatable test scenarios. In addition, it’s important to write tests for all possible input scenarios.

Integration testing

Integration testing examines the interactions between different microservices and components to check that they work together correctly. Examples of available tools include Postman for API testing or integration testing frameworks like TestNG (Java) and pytest (Python).

Use integration testing to assess the communication between services, including API calls, message queues, and data stores. You can also use it to ensure data consistency and correct handling of edge cases.

End-to-end testing

End-to-end (E2E) testing involves validating the entire application workflow from start to finish to confirm that it meets business requirements. Available tools include Selenium, Cypress, and TestCafe.

You can use these tools to simulate real user scenarios and interactions, which can be crucial in making sure your serverless microservices function as they should. Fundamentally, E2E testing should be used to test the complete workflow, including authentication, data processing, and the user interface.

Simulate serverless environments

In addition to using the above approaches, it’s important to create staging environments that closely mirror your production environments. Once you establish your staging environment, deploy your serverless functions to it. You can further optimize and speed up testing by automating your staging environment integration tests.

Infrastructure as code (IaC)

IaC allows developers to define infrastructure configurations in code, which can be version-controlled and integrated into CI/CD workflows. This includes resources like serverless functions, databases, and networking components.

Notable examples of tools that allow you to define and implement IaC include AWS CloudFormation, Azure Resource Manager (ARM) templates, and Terraform.

The typical workflow for using IaC for your infrastructure is as follows:

- Code commit: Developers commit changes to the IaC configuration files in the version control system.

- CI pipeline: The CI pipeline is triggered, running automated tests to validate the IaC code.

- Approval: Once the tests pass, the changes are reviewed and approved.

- CD pipeline: The CD pipeline is triggered, deploying the serverless infrastructure changes to the staging environment.

- Testing: Automated tests are run in the staging environment to check that the changes work as expected.

- Promotion: If the tests pass, the changes are promoted to the production environment.

- Monitoring: The deployed infrastructure is monitored for performance and health, with automated alerts set up for any issues.

Manually integrating IaC with CI/CD pipelines can require significant effort and be time-consuming, especially for serverless infrastructure. This is another area where a tailored solution like TeamCity can help.

You can use it to automate builds and deployments to ensure consistent validation and packaging of IaC configurations. With support for AWS CloudFormation and Terraform, TeamCity automates resource and application deployments, enabling efficient and reliable serverless infrastructure management.

? Read also: Configuration as Code for TeamCity Using Terraform.

Key challenges in CI/CD for serverless apps

Implementing CI/CD for serverless applications comes with its own set of challenges. The following sections cover some key challenges and how they can be addressed.

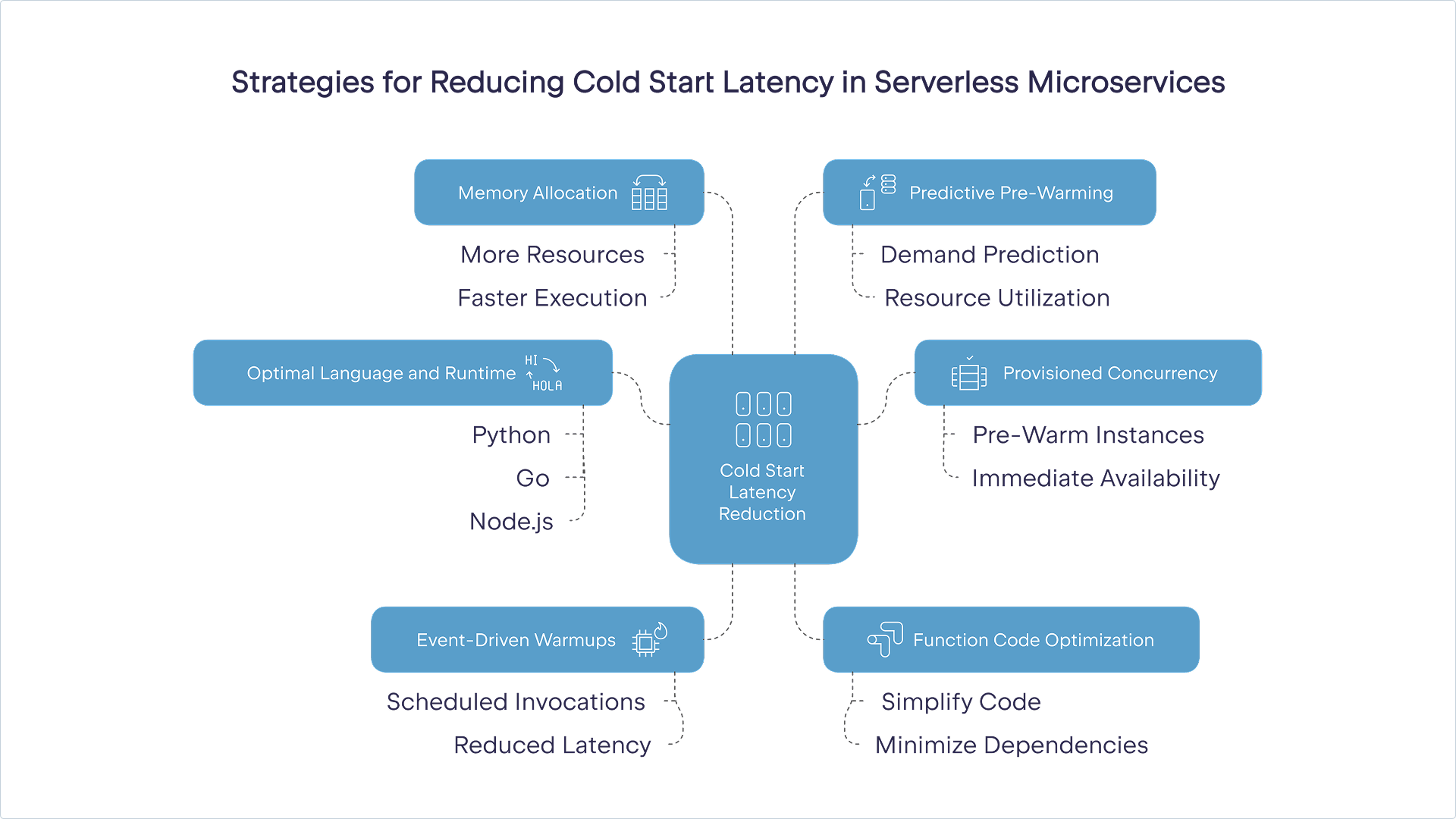

Latency related to cold starts

Serverless functions can experience latency during cold starts, which happens when they are invoked after a period of idleness. This increased latency, caused by the cloud provider provisioning necessary resources, can affect the performance and responsiveness of serverless applications, particularly in CI/CD pipelines with rapid and frequent deployments.

Some strategies you can use to address these issues include:

- Using provisioned concurrency: Pre-warm a set number of function instances so they are always ready to handle requests without delay.

- Preparing event-driven warmups: Use scheduled events to periodically invoke functions, keeping them warm and reducing cold start latency.

- Optimizing function code and dependencies: Simplify function code, minimize dependencies, and use lightweight frameworks to reduce initialization time. For instance, remove unnecessary libraries and optimize code for faster startup times.

- Choosing an optimal language and runtime: Select programming languages and runtimes with faster cold start times. Languages like Python, Go, and Node.js typically have shorter cold start times compared to Java or .NET.

- Increasing memory allocation: Allocating more memory to functions can reduce initialization time, as more resources are available for execution.

- Implementing predictive pre-warming: You could implement schedulers that determine the optimal number of instances to pre-warm based on predicted demand. This helps maintain a balance between resource utilization and latency reduction.

Using pre-warmed containers: Containers can be pre-warmed and kept running, reducing the cold start latency compared to traditional serverless functions. You can use AWS Fargate, Azure Container Instances (ACI), and Kubernetes with serverless frameworks to integrate containers with serverless architecture.

These strategies can minimize the impact of cold starts in serverless applications, leading to better performance and responsiveness in your CI/CD pipelines.

Dependency management

Managing dependencies for each microservice can be complex, especially when different services require different versions of the same library. Dependency management tools like npm (Node.js), pip (Python), and Maven (Java) can be used to give each microservice its own isolated environment to avoid conflicts.

Serverless functions often have deployment package size limits, which can be exceeded by large dependencies, causing deployment failures. To avoid this, optimize dependencies by including only essential libraries. Tools like webpack and Rollup can bundle and minify code, effectively reducing package size.

Dependencies can also introduce security vulnerabilities if not properly managed and updated. It’s important to regularly scan dependencies for vulnerabilities using tools like Snyk or OWASP Dependency-Check. Keep dependencies updated and apply security patches promptly to mitigate potential threats.

Environmental parity is another challenge you’re likely to run into. Ensuring that dependencies are consistent across development, staging, and production environments can be difficult.

You can use IaC to define and manage environments consistently. You can also use containerization to create a consistent runtime environment.

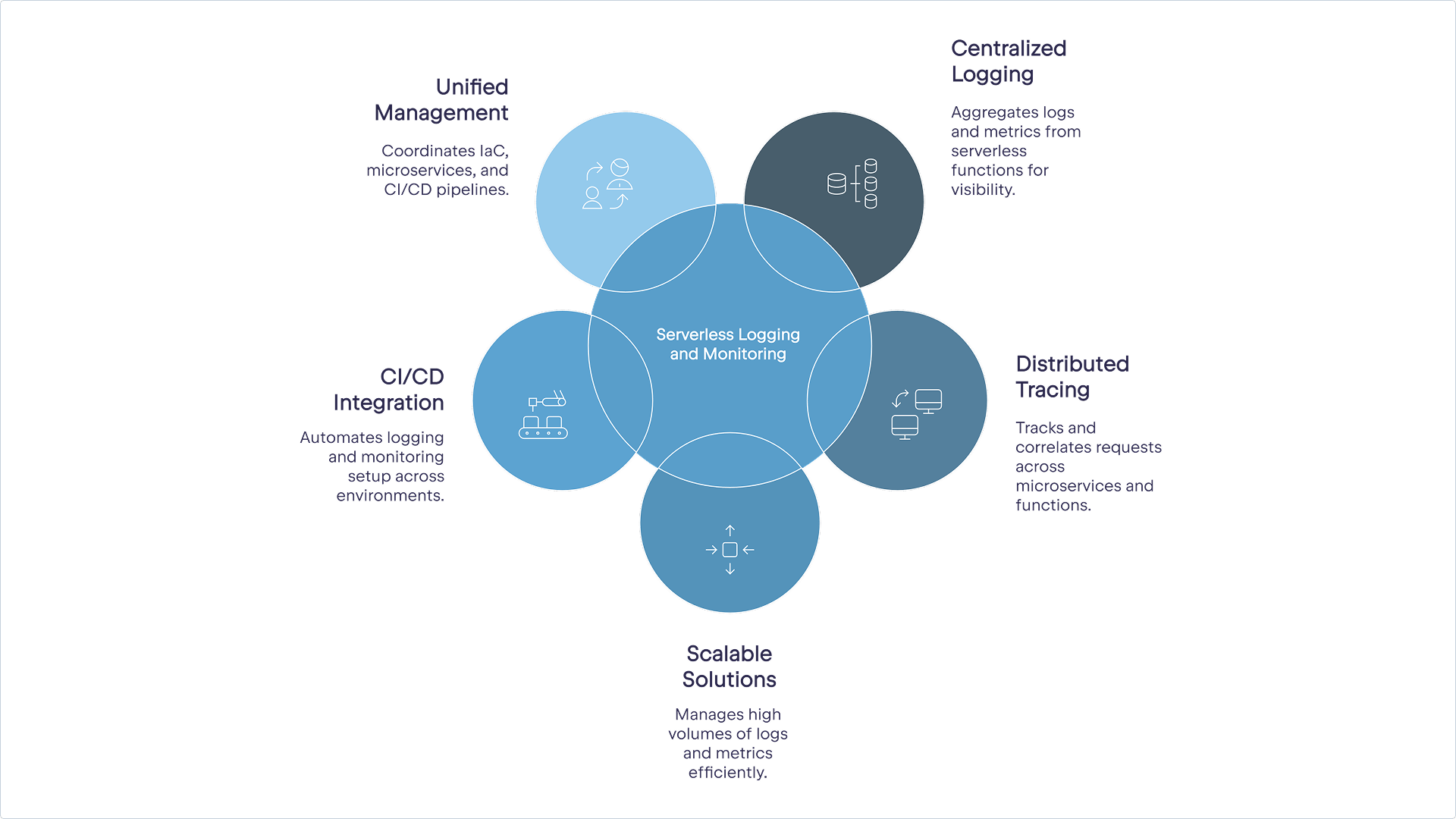

Observability and monitoring

Logging and monitoring are critical components of serverless architectures. They provide visibility into the performance, health, and behavior of serverless applications, enabling developers to maintain reliability, security, and efficiency. However, there are some challenges associated with logging and monitoring in serverless architecture.

For instance, because serverless functions are continuously changing, it can be difficult for observability and logging tools to capture and retain logs and metrics. Centralized logging solutions like Amazon CloudWatch, Azure Monitor, and Google Cloud’s operations suite can aggregate logs and metrics from all functions.

Serverless applications often consist of numerous microservices and functions, making it challenging to track and correlate logs across different components. You can address these shortcomings by implementing distributed tracing tools like AWS X-Ray, Azure Application Insights, or Google Cloud Trace to trace requests across multiple services and functions.

As serverless applications can scale rapidly, they generate a large volume of logs and metrics that can be difficult to manage and analyze. As such, administrators must use scalable logging and monitoring solutions that can handle high volumes of data. Implement log retention policies and use log aggregation tools to manage and analyze logs efficiently.

You can use CI/CD to feed data to monitoring systems. However, this can be challenging, especially when dealing with multiple environments and stages. It’s best to automate the setup and configuration of logging and monitoring as part of the CI/CD pipeline. IaC supports consistent configuration across environments.

It can be daunting to get all these moving parts and configurations to work together smoothly. In such instances, it’s always a good idea to use a single unifying tool to handle your IaC, microservice, and CI/CD pipeline management.

How TeamCity supports CI/CD for serverless microservices

As we already mentioned, TeamCity is a powerful CI/CD tool that can significantly streamline the process of managing serverless applications. Let’s look at a few ways it can help.

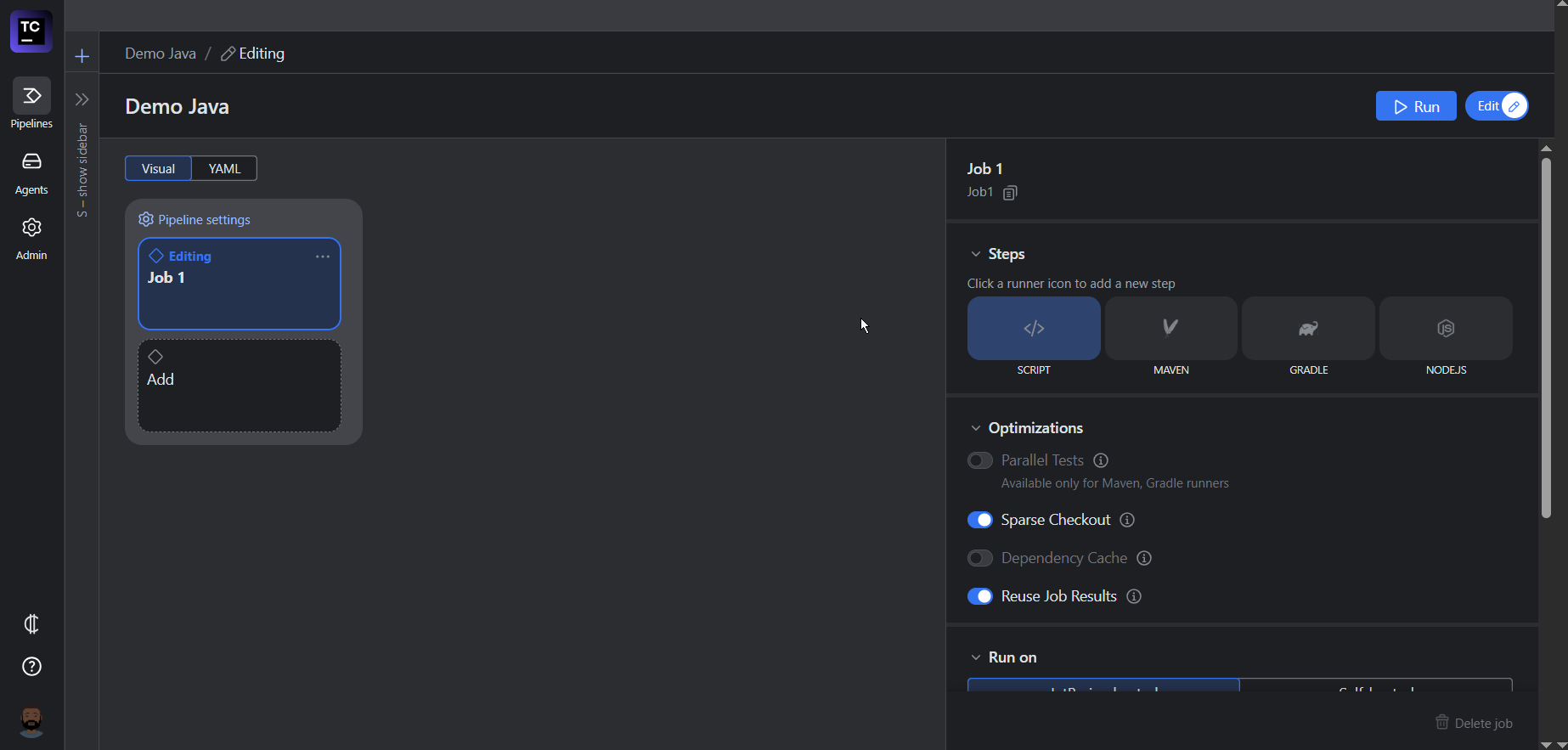

Pipeline configuration made simple

TeamCity’s visual editor provides an intuitive, drag-and-drop interface for configuring CI/CD pipelines. Changes made in the visual editor are instantly reflected in the YAML editor and vice versa.

Along with the visual editor’s smart suggestions, the open terminal allows for easier troubleshooting and debugging. You can import existing YAML files from their repositories to make creating your pipeline easier.

TeamCity also offers robust support for IaC tools and deployment triggers. It integrates with AWS CloudFormation, Terraform, and the Serverless Framework. In addition to this, TeamCity offers a large variety of build triggers, including version control system (VCS), schedule, and dependency triggers.

The basic YAML configuration for a VCS trigger in TeamCity typically follows this structure:

version: 2021.2

projects:

- name: MyProject

id: MyProject

buildTypes:

- name: BuildAndDeploy

id: BuildAndDeploy

vcs:

- id: MyVcsRoot

name: MyVcsRoot

url: https://github.com/my-repo.git

branch: refs/heads/main

steps:

- name: Build

type: gradle-runner

script: build

- name: Deploy

type: gradle-runner

script: deploy

triggers:

- vcsTrigger:

id: VcsTrigger

branchFilter: +:refs/heads/main

quietPeriodMode: USE_DEFAULT

The vcs section defines the version control settings, including the repository URL and the branch to monitor. The steps section defines the build and deployment steps using Gradle. The triggers section defines a VCS trigger that initiates the build and deployment process whenever there is a commit to the main branch. Any changes committed to the main branch will automatically trigger the build and deployment process in TeamCity.

Testing and feedback integration

TeamCity offers support for a variety of testing frameworks. This includes common unit testing, integration testing, and E2E testing frameworks. TeamCity can run these tests in cloud-based environments, ensuring your serverless functions are tested in conditions that closely resemble production.

Additionally, TeamCity allows you to run tests in parallel, which can be especially useful for large projects with extensive test coverage. The platform’s real-time notifications inform you of your build or test status through channels like email, Slack, and webhooks.

Flexibility and scalability

TeamCity’s distributed build agents allow it to facilitate flexible and scalable infrastructure and workflows. For instance, you can configure elastic build agents that can be dynamically provisioned and de-provisioned based on workload. This allows the system to scale up to handle peak loads and scale down during off-peak times, optimizing resource usage and cost.

By using multiple build agents, the platform can make sure that the failure of a single agent does not disrupt the entire CI/CD pipeline. Other agents can take over the tasks, maintaining the continuity of the build process. TeamCity can automatically detect and recover from agent failures, restarting builds on available agents and minimizing downtime.

But how does a typical deployment look in TeamCity? What makes it any different than setting up or creating your own system?

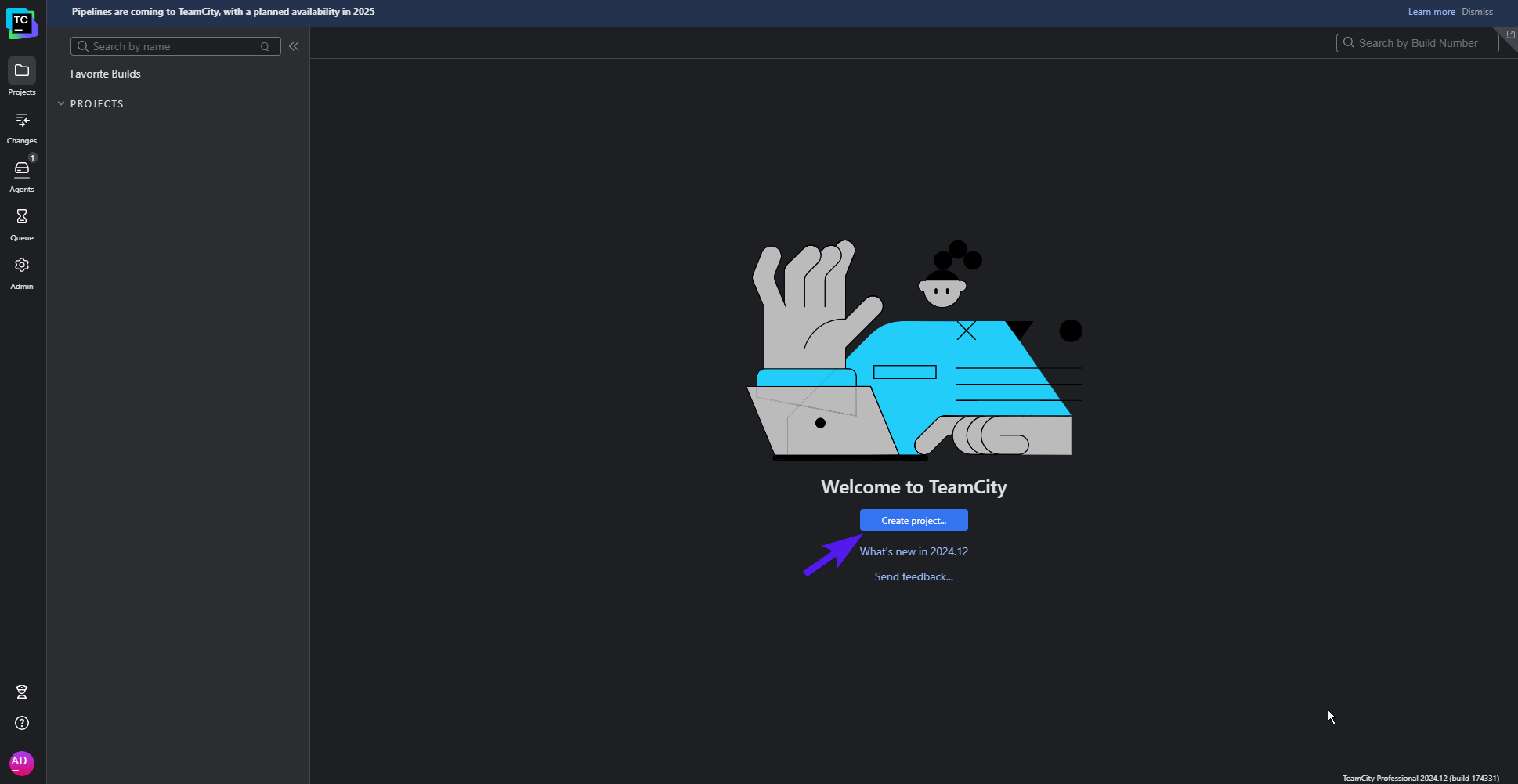

Deploying a serverless microservice

Developing your application using microservices already requires a lot of cognitive overhead. Setting up a system to deploy it on serverless architecture shouldn’t be as energy-consuming.

TeamCity is relatively easy to set up. You can build, test, and deploy a serverless application using the following steps:

- In TeamCity, create a new project for your serverless application.

- Connect your project to a VCS to track your code changes.

- Add build steps to compile your serverless application. For example, if you’re using the Serverless Framework, you might add a step to run serverless package.

- Ensure that all dependencies are installed. For Node.js applications, you might add a step to run npm install.

- Add build steps to run unit tests using your preferred testing framework (such as Jest, Mocha, or pytest).

- Add steps to run integration tests to check that different components of your application work together correctly.

- Add steps to run end-to-end tests to validate the entire application workflow.

- Add build steps to deploy your serverless application. For example, if you’re using the Serverless Framework, you might add a step to run serverless deploy.

- Configure environment variables required for deployment, such as AWS credentials or API keys.

- Configure VCS triggers to automatically start the build and deployment process whenever changes are committed to the repository.

- Monitor the build and deployment process in real time through the TeamCity interface.

- Review detailed test reports to identify and fix any issues.

- Check deployment logs to confirm the application was deployed successfully.

Emerging trends in CI/CD for serverless microservices

Advancements in CI/CD for serverless microservices are shaping the future of software development. Two of the key emerging trends in CI/CD are event-driven pipelines for automation and AI.

Event-driven CI/CD pipelines enhance the efficiency and responsiveness of the software development lifecycle. These pipelines react to specific events, such as code changes, feature requests, or system alerts.

For instance, triggers can come in the form of HTTP requests made to specific endpoints. In cases where an external system or service needs to initiate a build or deployment, it can send a request to the CI/CD pipeline’s API endpoint. TeamCity is well equipped to manage event-driven workflows, enhancing the automation and responsiveness of CI/CD pipelines.

AI is also revolutionizing CI/CD pipelines by introducing advanced optimization techniques that enhance efficiency, reliability, and speed. AI algorithms in predictive build optimization analyze historical build data to predict the likelihood of build failures. When used appropriately, it can improve overall resource utilization.

In addition to the above, AI can make software testing more robust and reliable. TeamCity can be integrated with AI-powered tools that can analyze code quality and suggest improvements. One example of such a tool is SonarQube, which can perform static code analysis and provide code improvement suggestions through its AI Code Fix tool.

Conclusion

Aligning CI/CD practices with serverless computing can help you optimize the microservice deployment. However, it does present some unique challenges, which can be overcome by following the best practices highlighted in the above guide. Tools like TeamCity make it far easier and more manageable to implement these strategies and practices.

The platform offers 14-day trials for its SaaS implementations and a 30-day trial for its On-Premises Enterprise edition. Once you’ve decided on an implementation, learn how to configure your serverless CI/CD pipeline using configuration as code through Terraform or learn how to integrate it with Kubernetes.

TeamCity is a flexible solution that makes configuring and managing CI/CD in serverless environments easier.

Subscribe to TeamCity Blog updates