Upsource

Code Review and Project Analytics

Code Review for Design Evolution

In the last post we covered a review process where the code review is performed after all the code is written with the goal of approving the suggested changes.

If getting the design right is important to your team, then a collaborative and iterative approach may be appropriate — the code can be checked frequently while it’s being built, so design feedback comes at the right time.

In this example, I’m going to assume the team works using feature branches. As with anything, this approach has its supporters and detractors, but it’s a reasonable model to showcase iterative code reviews.

The Process

In our example, I’ll be the developer who works on a bug fix or feature. I create a new branch for this fix/feature, and sketch out a rough start to the solution.

This could be:

- Implementing the first task of the story

- Prototyping an end-to-end implementation

- Writing automated acceptance tests to document my understanding of the problem and the possible edge cases.

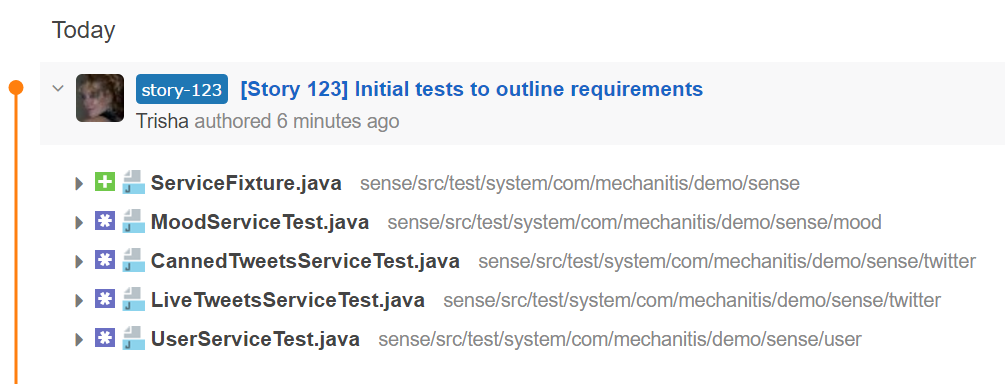

Ideally, this is contained in a single commit — commits should be small and frequent, so this first step is not a large amount of work. The commit should still compile and pass the automated checks, which may mean ignoring tests or inserting feature toggles. I commit this initial sketch to my new branch.

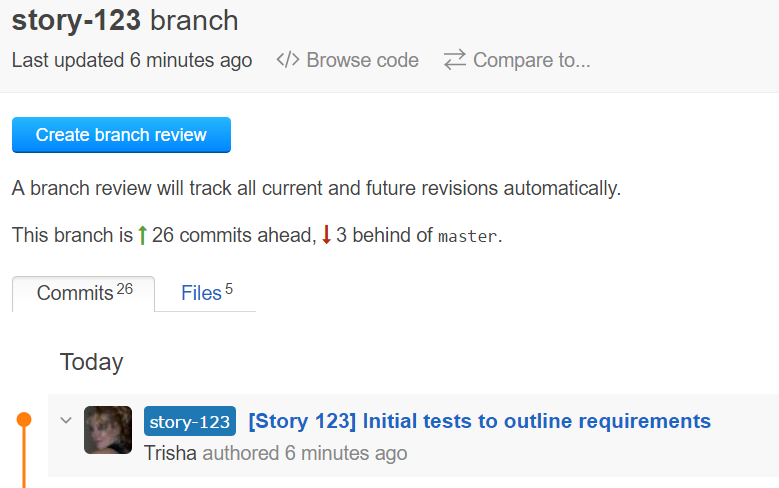

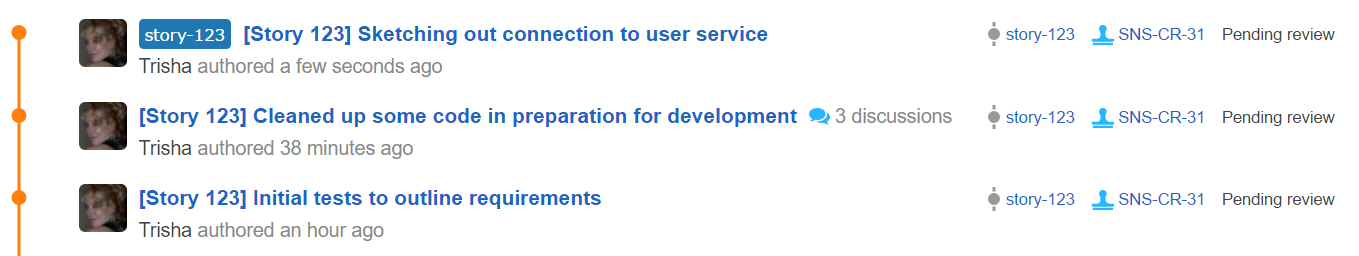

Using Upsource, I create a branch review for the feature branch.

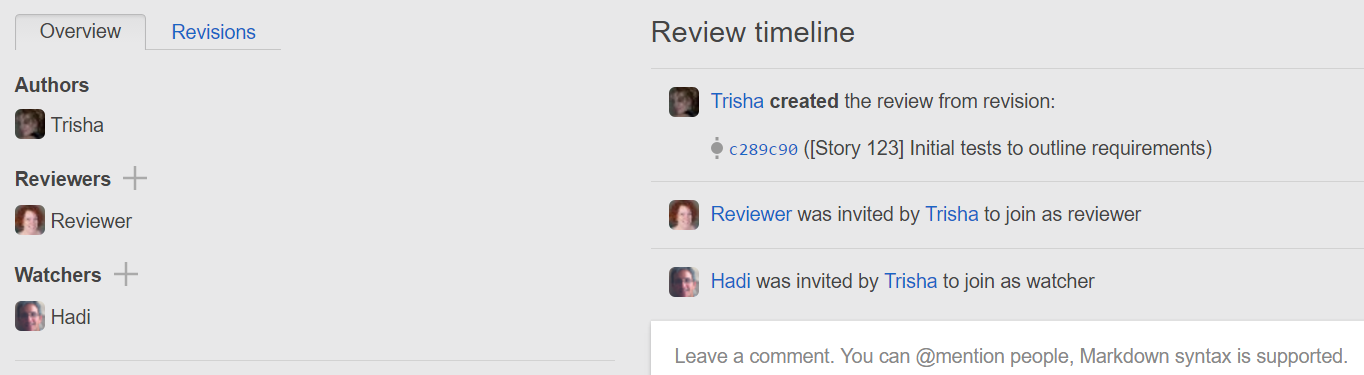

I then invite reviewers. With this type of review, I may want feedback from a number of developers, I’m not simply looking for sign-off. With Upsource, I can select watchers as well as reviewers, who can contribute their comments if they like.

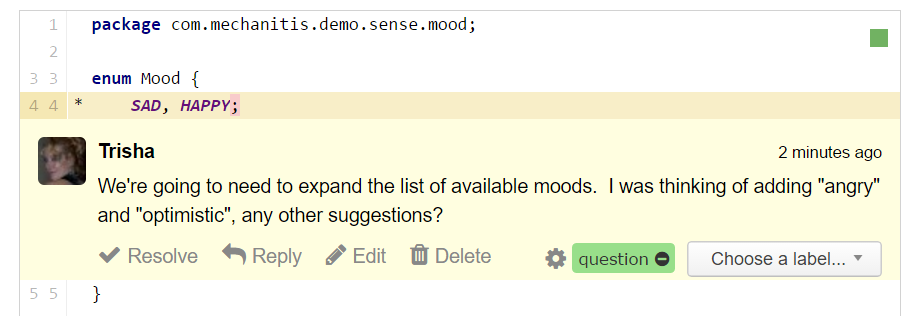

I can use Upsource to make notes on known assumptions, to ask questions, and to explain my choice of approach.

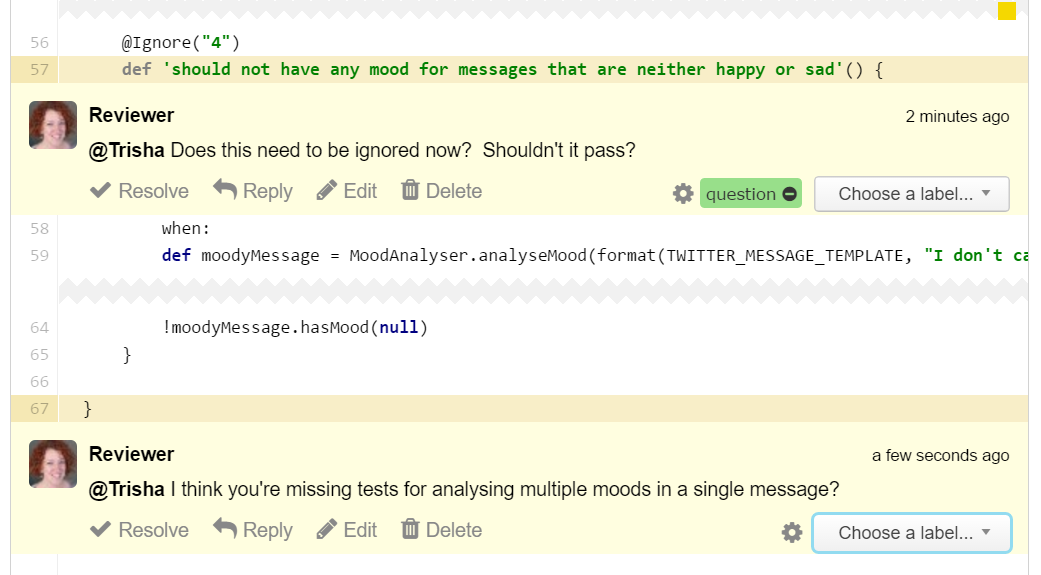

The goal of reviewers at this stage is to see if my initial assumptions are correct or complete and to check if I’m going in the right direction. The first comments are likely to be questions, some suggestions or notes on potential gotchas, rather than critiquing the specifics of the code which is likely to evolve.

While this commit is being reviewed, I almost certainly have more ideas to implement and more tests to write, so I’ll probably continue working on this branch even while the initial code is waiting for review.

I should be quick to respond to any questions, as these not only help reviewers understand the problem or code better, but may also help me to think about the problem differently. And suggestions from the review are incorporated during implementation.

When I’ve completed the next set of changes, I commit this. It doesn’t have to address everything raised in the initial code review — this is an iterative approach and some things may be outstanding until an answer is found or a particular task is worked on.

Because I created a branch review, all commits to that branch are automatically added to the review. Reviewers and watchers will be notified of the update, and Upsource highlights in bold any changes and lets you select which commits to view.

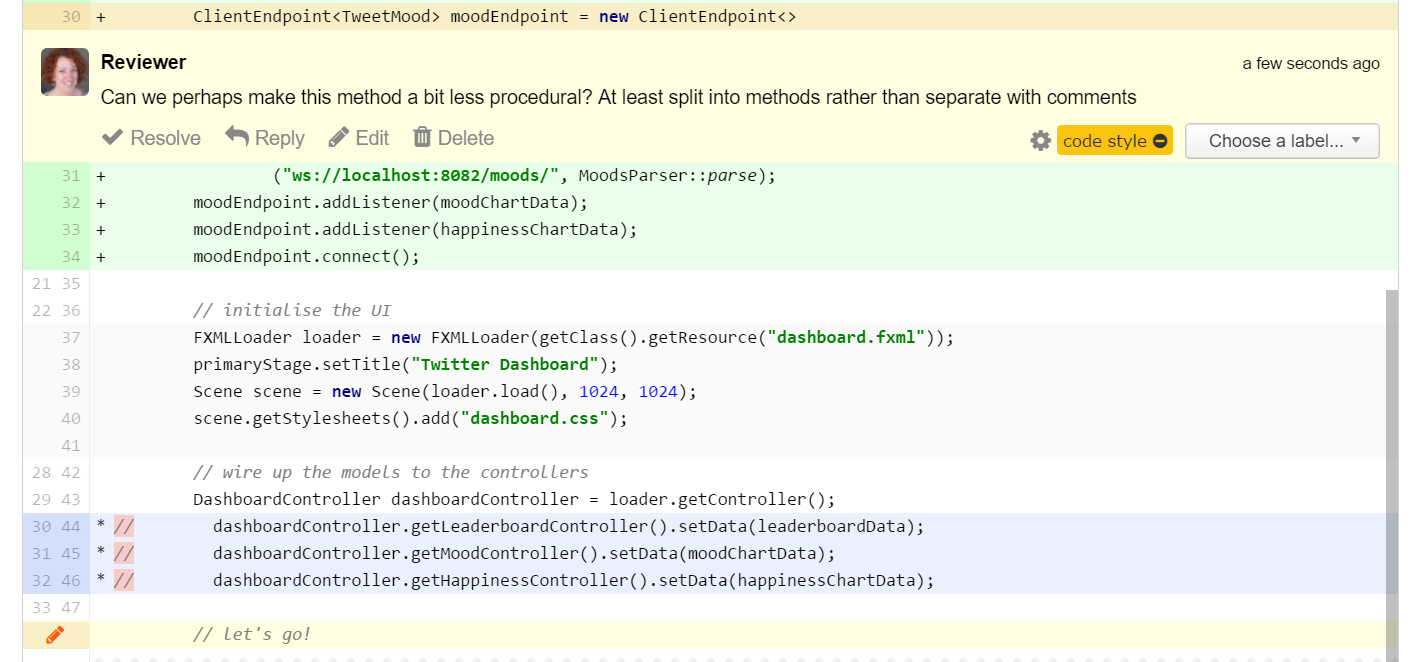

As the code is evolving from a sketch to a working piece, reviewers should be checking: is the new code going into the correct services / modules / classes? Is the design aligned with the code base and the team’s current practices? Have earlier questions or concerns been answered?

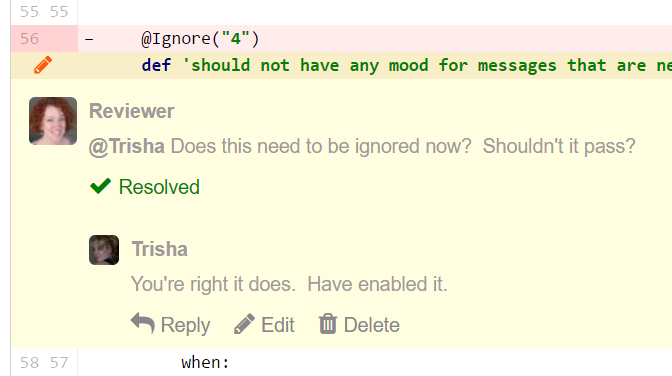

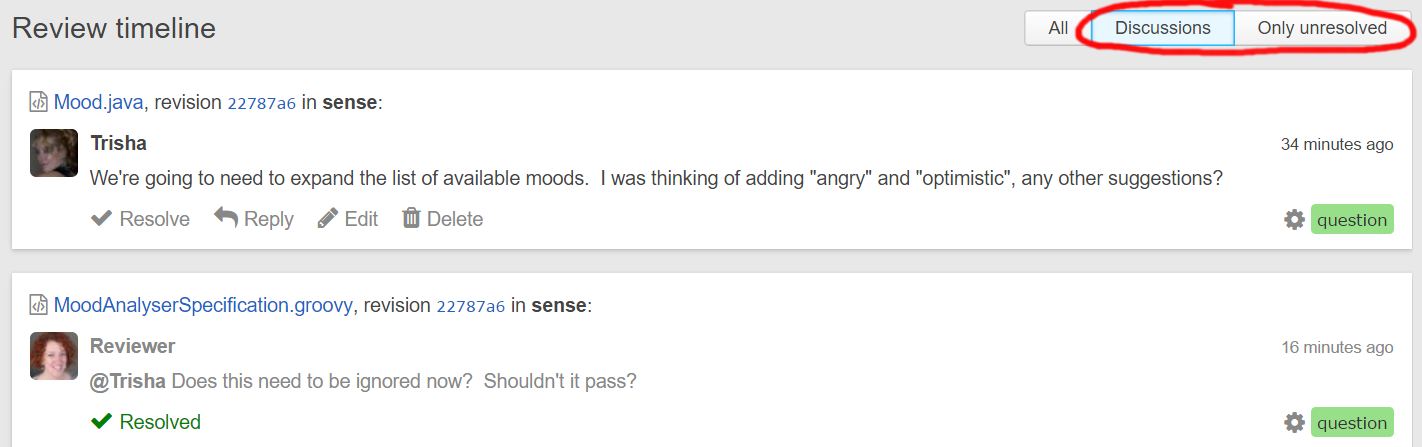

In the last post we showed how labels on comments make it easier for an author to understand the context. Upsource also makes it easy to see which discussions are still active and which have been settled, as authors or reviewers can “Resolve” a discussion.

The discussion is still around, so you can see the history of decisions, but you can more easily focus on the things that haven’t been decided or addressed.

The process of soliciting feedback via code review and acting upon it then iterates until the code is “done”1. At that point, one or more of the reviewers will accept the code.

Discussion

This process addresses the main problem in the Gateway process — in a Design Evolution review, feedback on the design is given early so there’s plenty of opportunity to change direction.

In a review like this, the reviewer(s) doesn’t have to be more senior as the aim is not to say simply “yes” or “no” to merging the code. You can invite the whole team if you want, everyone is in a good position to ask questions, think of edge cases and make suggestions. The team does need to have a policy in place about who has the last say in design decisions to prevent deadlock, and you need at least one person who’s responsible for declaring the fix/feature is “done”1. Their job is potentially easier than that of a reviewer in a gateway review, as they’ve been able to rely on several pairs of eyes to identify potential problems.

These reviews can generate a lot of noise and opinions, and developers on teams practising these types of reviews may be participating in many reviews, so there’s a context-switching cost. To address this problem, the team may batch together work in the same area to reduce the cost of context switching. This can improve the overall design, since the whole team is collaborating and may identify a design that works for a range of cases. However, if the team is using feature branches this does increase the risk of merge conflicts.

Review feedback needs to happen quickly in these iterative reviews, so as not to block the developer or leave it until too late to critique an approach.

Advantages

- Design issues can be caught early and addressed before the code is fully implemented

- Each review iteration contains a small number of changes, so should be quick to review

- No pressure to find defects or showstoppers in the code, goal is to check overall approach

- Varied opinions from the team can lead to more information and create a better design

- If reviews include many developers in the team, everyone learns the whole code base and sees how it’s evolving

- It’s a good approach for learning — you see other people’s thought processes, how developers with different experiences approach problems, and how others spot opportunities for design patterns or architectural approaches.

Disadvantages

- The length of the code review is determined by how long it takes to implement the feature, it’s not a single pass through a completed piece of work, and reviewers are invited to check every commit added to the review

- Developers are invited to many reviews that need their attention, as well as working on their own code

- Too many differing opinions without clear leadership can prevent progress

Anti-patterns

If review feedback is not provided quickly, the developer is either going to be a) blocked, so they may start another piece of work, leading to more work-in-progress or b) continuing to code and submit commits, which may lead to all the feedback being received at or near the end of the implementation. If feedback is not received and acted upon quickly, the review will have the same disadvantages as the gateway code review.

Another failure case is the opposite problem — too many opinions, possibly contradictory feedback, and unproductive arguments will all block progress on the fix/feature.

Alternatively, you may find that one of the reviewers has a clear idea of the direction the code should take but cannot communicate this to the author. The review can end up in a ping-pong between the author trying to implement the review feedback and the reviewer suggesting changes.

To overcome these problems:

- Reviewers should give feedback quickly and code authors should act upon the feedback as soon as they can

- Someone needs to have the power to break deadlock. Either the code author can be responsible for deciding what to implement (implying a level of trust in all developers), or the team can allocate a sponsor who can break deadlocks in design discussions.

- Reviewers with clear design ideas can be encouraged to submit code directly to the branch.

- “Done” needs to be defined. In the same way a Gateway review needs to define what’s a showstopper and deserves a “no”, an Evolutionary review relies on knowing when the code is ready and deserves a “yes”.

Suitable for

- Projects where getting the design right is key. It may be better to incur the cost of longer and noisier reviews over the cost of adding tech debt to the code base

- Teams where shared code ownership is important

- Developers comfortable working with iterative design

- Developers who are comfortable exposing unfinished ideas to their peers

- Teams with a level of trust where all participants feel comfortable leaving feedback

- Teams who have one or more people who can break deadlock on design decisions

- Teams who prefer to coach new/junior team members by collaborating with them on their design and encouraging them to view and critique other code

1 Someone needs to decide what “done” means. Maybe all tests passing and all “potential bug” comments addressed. Or maybe the testers are happy with it and the team is satisfied with the readability of the code. Of course, your definition depends upon what the team values — speed to production; correctness; maintainability; etc. Like CAP theorem, you can’t have all of them, you have to understand the implications of your preferences.