Upsource

Code Review and Project Analytics

What to look for in a Code Review: Tests

This is part 2 of 6 posts on what to look for in a code review. See other posts from the series.

In the last post we talked about a wide variety of things you could look for in a code review. Now we’ll focus on one area: what to look for in the test code.

This article assumes:

- Your team already writes automated tests for code.

- The tests are regularly run in a Continuous Integration (CI) environment.

- The code under review has been through an automated compile/test process and passed.

In this post we’ll cover some of the things a reviewer could be thinking about when looking at the tests in a code review.

Are there tests for this new/amended code?

It would be rare that new code, whether a bug fix or new feature, wouldn’t need a new or updated test to cover it. Even changes for “non-functional” reasons like performance can frequently be proved via a test. If there are no tests included in the code review, as a reviewer the first question you should ask is “why not?”.

Do the tests at least cover confusing or complicated sections of code?

One step beyond simply “is there a test?”, is to answer the question “is the important code actually covered by at least one test?“.

Checking test coverage is certainly something we should be automating. But we can do more than just check for specific percentages in our coverage, we can use coverage tools to ensure the correct areas of code are covered.

You can check the coverage report for the new lines of code (which should be easy to identify, especially if you’re using a tool like Upsource) to make sure it’s adequately covered.

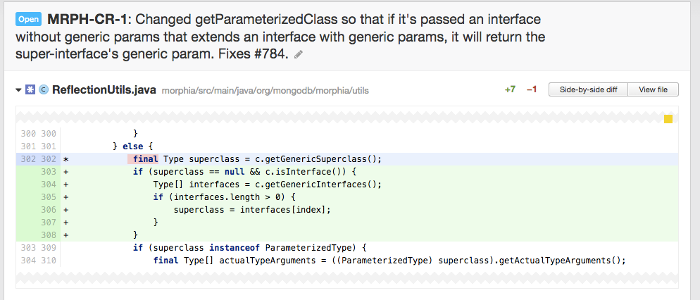

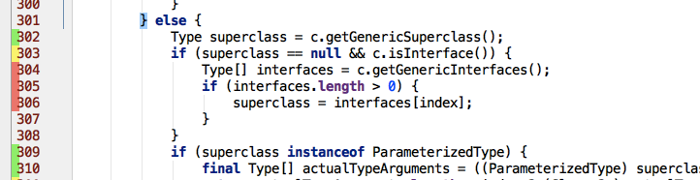

In this example above, the reviewer may ask the author to add a test to cover the case where the if on line 303 evaluates to true, as the coverage tool has marked lines 304-306 with red to show they aren’t tested.

100% test coverage is an unrealistic goal for pretty much any team, so the numbers coming out of your coverage tool might not be as valuable as insights into which specific areas are covered.

In particular, you want to check that all logic branches are covered, and that complex areas of code are covered.

Can I understand the tests?

Having tests that provide adequate coverage is one thing, but if I, as a human, can’t understand the tests, they have limited use – what happens when they break? It’ll be hard to know how to fix them.

Consider the following:

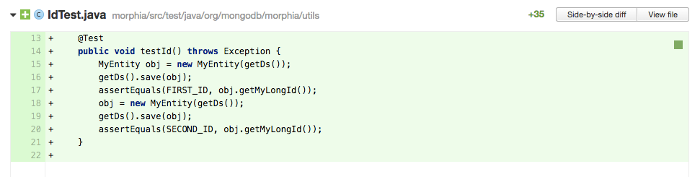

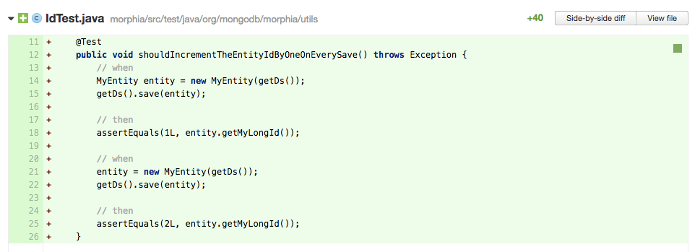

It’s a fairly simple test, but I’m not entirely sure what it’s testing. Is it testing the save method? Or getMyLongId? And why does it need to do the same thing twice?

The intent behind the test might be clearer as:

The specific steps you take in order to clarify a test’s purpose will depend upon your language, libraries, team and personal preferences. This example demonstrates that by choosing clearer names, inlining constants and even adding comments, an author can make a test more readable by developers other than him- or herself.

Do the tests match the requirements?

Here’s an area that really requires human expertise. Whether the requirements being met by the code under review are encoded in some formal document, on a piece of card in a user story, or contained in a bug raised by a user, the code being reviewed should relate to some initial requirement.

The reviewer should locate the original requirements and see if:

- The tests, whether they’re unit, end-to-end, or something else, match the requirements. For example, if the requirements are “should allow the special characters ‘#’, ‘!’ and ‘&’ in the password field”, there should be a test using these values in the password field. If the test uses different special characters then it’s not proving the code meets the criteria.

- The tests cover all the criteria mentioned. In our example of special characters, the requirements might go on to say “…and give the user an error message if other special characters are used”. Here, the reviewer should be checking there’s a test for what happens when an invalid character is used.

Can I think of cases that are not covered by the existing tests?

Often our requirements aren’t clearly specified. Under these circumstances, the reviewer should think of edge cases that weren’t covered in the original bug/issue/story.

If our new features is, for example, “Give the user the ability to log on to the system”, the reviewer could be thinking “What happens if the user enters null for the username?”, or “What sort of error occurs if the user doesn’t exist in the system?”. If these tests exist in the code being reviewed, then the reviewer has increased confidence that the code itself handles these circumstances. If the tests for these exceptional cases don’t exist, then the reviewer has to go through the code to see if they have been handled.

If the code exists but the tests don’t, it’s up to your team to decide what your policies are – do you make the author add those tests? Or are you satisfied that the code review proved the edge cases were covered?

Are there tests to document the limitations of the code?

As a reviewer, it’s often possible to see limitations in the code being reviewed. These limitations are sometimes intentional – for example, a batch process that can only handle a maximum of 1000 items per batch.

One approach to documenting these intentional limitations would be to explicitly test them. In our example above, we might have a test that proves that some sort of exception is thrown if your batch size is bigger than 1000.

It’s not mandatory to express these limitations in an automated test, but if an author has written a test that shows the limits of what they’ve implemented, having a test implies these limits are intentional (and documented) and not merely an oversight.

Are the tests in the code review the right type/level?

For example, is the author doing expensive integration tests where a unit test might suffice? Have they written performance micro-benchmarks that will not run effectively or in a consistent fashion in the CI environment?

Ideally your automated tests will run as quickly as possible, which means that expensive end-to-end tests may not be required to check all types of features. A method that performs some mathematical function, or boolean logic check, seems like a good candidate for a method-level unit test.

Are there tests for security aspects?

Security is one area that code reviews can really benefit. We’ll do a whole post on security later, but on the testing topic, we can write tests for a number of common problems. For example, if we were writing the log-in code above, we might want to also write a test that shows that we cannot enter the protected area of the site (or call protected API methods) without first authenticating.

Performance Tests

In the previous post I talked about performance as being an area a reviewer might be examining. Automated performance tests are obviously another type of test that I could have explored in this article, but I will leave discussion of these types of tests for a later article about looking specifically at performance aspects in a code review.

Reviewers can write tests too.

Different organisations have different approaches to code reviews – sometimes it’s very clear that the author is responsible for making all the code changes required, sometimes it’s more collaborative with the reviewer committing suggestions to the code themselves.

Whichever approach you take, as a reviewer you may find that writing some additional tests to poke at the code in the review can be very valuable for understanding that code, in the same way that firing up the UI and playing with a new feature is valuable. Some methods and code review tools make it easier to experiment with the code than others. It’s in the team’s interest to make it as easy as possible to view and play with the code in a code review.

It may be valuable to submit the additional tests as part of the review, but equally it may not be necessary, for example if experimentation has given me, the reviewer, satisfactory answers to my questions.

In Summary

There are many advantages to performing a code review, no matter how you approach the process in your organisation. It’s possible to use code reviews to find potential problems with the code before it is integrated into the main code base, while it’s still inexpensive to fix and the context is still in the developer’s head.

As a code reviewer, you should be checking that the original developer has put some thought into the ways his or her code could be used, under which conditions it might break, and dealt with edge cases, possibly “documenting” the expected behaviour (both under normal use and exceptional circumstances) with automated tests.

If the reviewer looks for the existence of tests and checks the correctness of the tests, as a team you can have pretty high confidence that the code works. Moreover, if these tests are run regularly in a CI environment, you can see that the code continues to work – they provide automated regression checking. If code reviewers place a high value on having good quality tests for the code they are reviewing, the value of this code review continues long after the reviewer presses the “Accept” button.