Big Data Tools

A data engineering plugin

dbt® deeper concepts: materialization

In the first part of this blog series, I described basic dbt® concepts such as installation, creation of views, and describing models. I could have stopped there, but indeed, there are some drawbacks to only using views to build the whole transformation layer in our database. Sometimes we don’t really need to use a view and a view may run slowly even in databases oriented toward analytical workflows.

I’ll start by giving an overview of ephemeral views.

Ephemeral views

In some cases, we don’t really want to have an entity for a dbt® model, rather we want this model to be inlined in other entities. As an example, take a look at the first model from the previous post. It’s very basic. I reckon we’ll be ok with inlining it into the second model. To make the model ephemeral, we need to perform one single action:

Add a line {{ config(materialized='ephemeral') }} to the top of the model.

If we do it with our user model, the code will become the following:

{{ config(materialized='ephemeral') }}

SELECT * FROM customer

After running the dbt build command (and deleting all the existing views just in case), I see that there is now only one view in the DB: user_post_count. It is exactly as it was defined in the user_post_count model, but the compiled code is different, it’s not:

WITH users AS (

SELECT u.id user_id, u.username username

FROM "demo"."public"."user" u

)

SELECT user_id,

username,

count(post.id) post_number

FROM users,

post

WHERE post.author = user_id

GROUP BY user_id, username

but rather:

WITH __dbt__cte__user as (

select * from customer

),users AS (

SELECT u.id user_id, u.username username

FROM __dbt__cte__user u

)

SELECT user_id,

username,

count(post.id) post_number

FROM users,

post

WHERE post.author = user_id

GROUP BY user_id, username

Do you see how this trick works? dbt added a new, “synthetic” CTE named __dbt__cte__user, and now uses this instead of the call to view, named user. But all the tests still run!

If you want to check what will be executed, you can call dbt compile and then look at everything that is generated, including tests in the target directory.

Of course, ephemeral views do not solve execution speed problems– it is mainly just syntactic sugar.

What if we need to materialize the data because the queries are taking too long?

Table views

To make the model materialize into a table, we just need to add the appropriate config to it:

{{ config(materialized='table') }}

WITH users AS (

SELECT u.id user_id, u.username username

FROM {{ ref('user') }} u

)

SELECT user_id,

username,

count(post.id) post_number

FROM users,

post

WHERE post.author = user_id

GROUP BY user_id, username

After execution, we will find a table named user_post_count in our database. There are cons with such a solution that are obvious. For instance, we will need to rebuild the whole thing every time and, as we know, when we’re working with a large amount of data, queries can take hours to complete. More data means that more time is required to build a view. And what if we need to have lots of materialized views of this sort? It could take forever!

The solution to this is to use incremental models!

Incremental models

Incremental views allow you to store only the changed/new parts of underlying entities. Of course, there is no magic solution, so making incremental models is a bit more complex and involved.

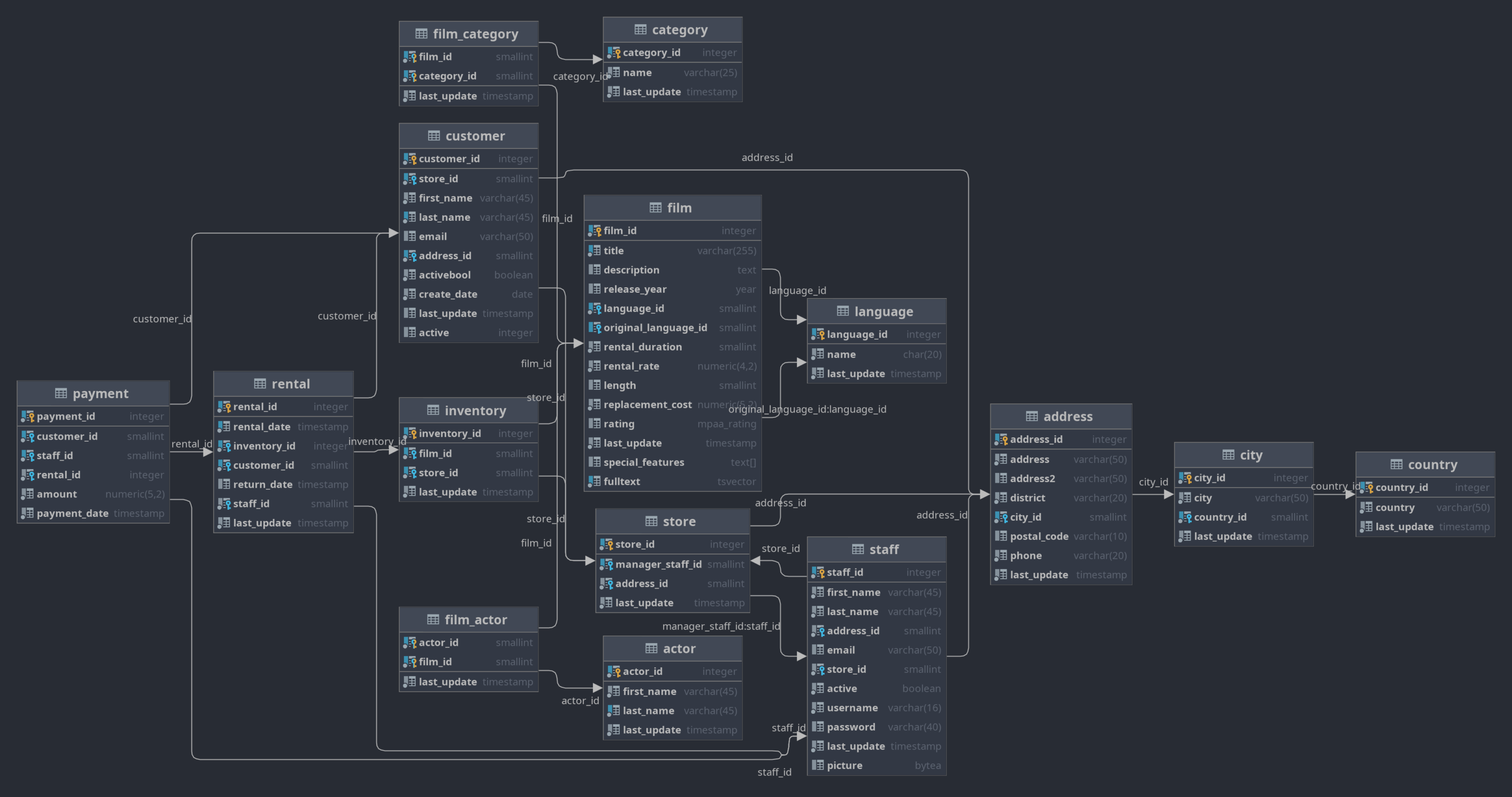

In this case, it won’t be enough to use any of the examples from the previous blog post, so I will use something else. I have chosen the Sakila database.

Sakila database

The Sakila database is a normalized model of the DVD rental business. It was originally developed by Mike Hillyer for MySQL. It has since been ported to other databases by DB Software Laboratory (http://www.etl-tools.com).

You can find everything you need to deploy the Sakila database to PostgreSQL on GitHub at jOOQ repository, courtesy of Lukas Eder.

What is very cool about Sakila is that it already contains everything needed to build incremental views! What makes Sakila so different from our previous test database? Well, first off, every table contains a column called last_updated. We can use these columns to determine if the model needs to be updated and what data should be added there.

First incremental model

Let’s make an example of what can be counted incrementally in our DVD rental store. The first idea that comes to my mind is the total amount of daily payments.

So, let’s start with a simple model: daily_payment.sql

SELECT sum(amount) daily_amount, date(payment_date) date FROM payment GROUP BY date(payment_date)

and describe it in an YAML file

models:

- name: daily_payment

description: "Total amount of payments, daily"

columns:

- name: daily_amount

type: decimal

tests:

- not_null

- name: date

type: date

tests:

- not_null

- unique

Of course, a view named daily_payment will appear in the DB, and it will take longer to open it each time. In our example, there is not a huge amount of data in the payments table, but in real life, it would be different. It looks like an awesome candidate to become an incremental model.

The first thing we need to do is obvious – we should define a different materialization type in the config:

{{ config(materialized='incremental') }}

SELECT sum(amount) daily_amount, date(payment_date) date

FROM payment

GROUP BY date(payment_date)

But this time around, this is not enough. We need to define a way for dbt to find an increment. Doing so will require one more instance of Jinja magic:

{{ config(materialized='incremental') }}

SELECT sum(amount) daily_amount, date(payment_date) date

FROM payment

{% if is_incremental() %}

WHERE date(payment_date) > (SELECT max(date) from {{this}})

{% endif %}

GROUP BY date(payment_date)

In this code, you can see an is is_incremental() block, which adds an additional condition to our SQL query. Why do we need a separate block? Why can’t we just include the WHERE condition in the main body of our SQL query? The reason is that we need to be able to change our model to a different type by replacing only one literal.

There is one more thing we need to look at in this model: the {{this}} term inside the sub-query. This term refers to the current view (daily_payment in our case). It’s the part of the Jinja magic that allows us to change the name of the model without rewriting the whole query: dbt is made with refactoring in mind!

We have the option of defining a unique key constraint for our model. This will ensure that the rows are being updated, not added, when data changes. I won’t demonstrate it here because payments are in order, we hope.

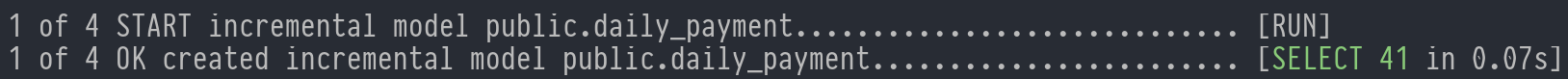

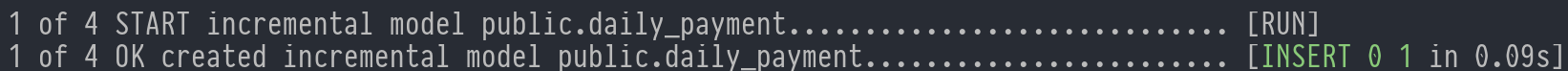

Let’s build our model…

And look at the generated SQL:

create table "sakila"."public"."daily_payment" as ( SELECT sum(amount) daily_amount, date(payment_date) date FROM payment GROUP BY date(payment_date) );

Hmm, that is interesting! There is no sign of our WHERE clause here! Apparently, when there is no materialization of a current model, dbt is smart and throws out everything incremental-related. That is another reason to have an is is_incremental() block! But how will it work when we put a bit more data into the payments table? Let’s insert the data in now:

INSERT INTO payment(customer_id, staff_id, rental_id, amount, payment_date) VALUES (19, 2, 234, 3.99, now()); INSERT INTO payment(customer_id, staff_id, rental_id, amount, payment_date) VALUES (19, 2, 235, 2.99, now()); INSERT INTO payment(customer_id, staff_id, rental_id, amount, payment_date) VALUES (19, 2, 236, 5.99, now());

Now let’s call dbt build again. At first glance, it seems like nothing has changed:

But if we look at the compiled SQL, we can see that something is different!

insert into "sakila"."public"."daily_payment" ("daily_amount", "date")

(

select "daily_amount", "date"

from "daily_payment__dbt_tmp103757815292"

)

dbt, I see what you did there! dbt creates a temporary view with the necessary data only. After that, the data is inserted into our incremental table from this temporary view and the temporary view is dropped.

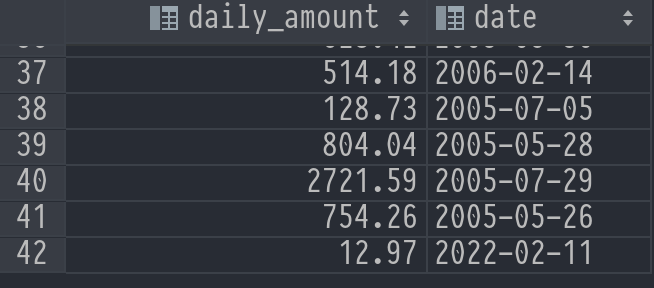

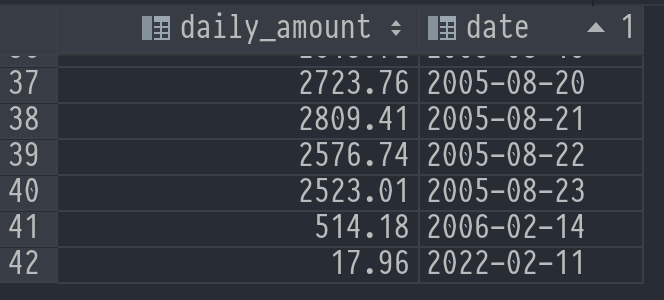

Of course, after execution we can see a new line in our table:

The issue here is that the day is not over yet, so if I rebuild the model in the middle of the day (to obtain my very important report ASAP), the data will be incomplete!

This is another chance to apply our knowledge about unique constraints!

Incremental with unique constraint

Let’s update our model a bit:

{{

config(

materialized='incremental',

unique_key='date'

)

}}

SELECT sum(amount) daily_amount, date(payment_date) date

FROM payment

{% if is_incremental() %}

WHERE date(payment_date) >= (SELECT max(date) from {{this}})

{% endif %}

GROUP BY date(payment_date)

A couple things to notice here are:

- I’ve added a

unique_keyconfiguration. - I’ve replaced

>with>=in the incremental predicate.

Now let’s rebuild our model. This command will refresh all the incremental views. This step is necessary because we have made several changes that dbt doesn’t know about (yet).

dbt build --full-refresh

Of course, in the table, we will find the same $12.97 for today, so let’s add one more payment:

INSERT INTO payment(customer_id, staff_id, rental_id, amount, payment_date) VALUES (19, 2, 237, 4.99, now());

…and then execute dbt build again. The table is updated and the manager will be happy!

Summary

In this post, we learned about three more types of model materialization (table, ephemeral and incremental) that are available in addition to the default ones. incremental models require more effort to make them work, but this effort pays off! If you want to know more about view materialization, please refer to dbt docs to find more details on incremental views, for example, how to update only a portion of the models, not all of them.

dbt continues to show itself to be an extremely easy-to-use tool for transformation layer creation.

Follow this blog for the next part: the holy grail of data engineering – SQL testing!

Subscribe to Big Data Tools Blog updates