Upsource

Code Review and Project Analytics

What to look for in a Code Review: Security

This is part 6 of 6 posts on what to look for in a code review. See previous posts from the series.

How much work you do building a secure, robust system is like anything else on your project – it depends upon the project itself, where it’s running, who’s using it, what data it has access to, etc. Often, if our team doesn’t have access to security experts, we go too far in one direction or the other: either we don’t pay enough attention to security issues; or we go through some compliance checklist and try to address everything in some 20 page document filled with potential issues.

As usual, this blog post aims to highlight some areas you might like to look at when reviewing code, but mostly it aims to get you having discussions within your team or organisation to figure out what it is you do need to care about in a code review.

Automation is your friend

A surprising number of security checks can be automated, and therefore should not need a human. Security tests don’t necessarily have to be full-blown penetration testing with the whole system up and running, some problems can be found at code-level.

Common problems like SQL Injection or Cross-site Scripting can be found via tools running in your Continuous Integration environment. You can also automate checking for known vulnerabilities in your dependencies via the OWASP Dependency Check tool.

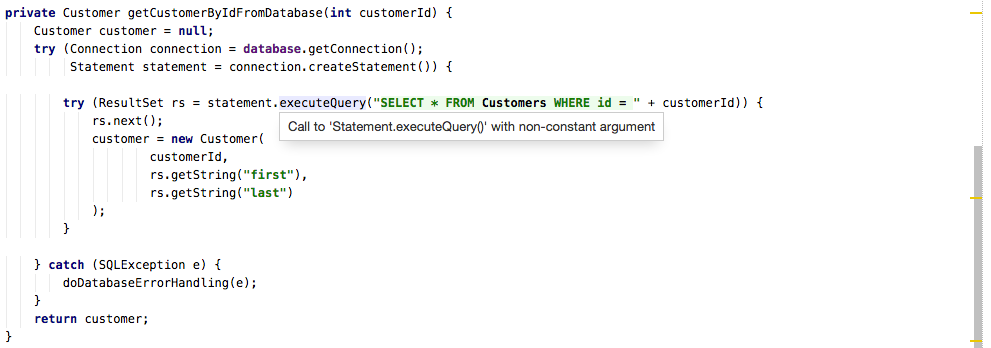

Of course, Upsource also provides numerous security inspections. These can inform a reviewer of potential security problems in the code. For example, this code executes a dynamically generated SQL string, which might be susceptible to SQL Injection:

Sometimes “It Depends”

While there are checks that you can feel comfortable with a “yes” or “no” answer, sometimes you want a tool to point out potential problems and then have a human make the decision as to whether this needs to be addressed or not. This is an area where Upsource can really shine. Upsource displays code inspections that a reviewer can use to decide if the code needs to be changed or is acceptable under the current situation.

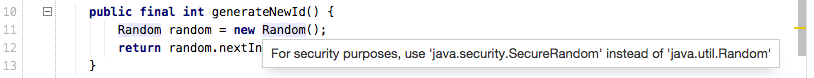

For example, suppose you’re generating a random number. If all your security checks are enabled, you’ll see the following warning in Upsource:

The JavaDoc for java.util.Random specifically states “Instances of java.util.Random are not cryptographically secure”. This may be fine for many of the occasions when you need an arbitrary random number. But if you’re using it for something like session IDs, password reset links, nonces or salts, as a reviewer you might suggest replacing Random with java.util.SecureRandom.

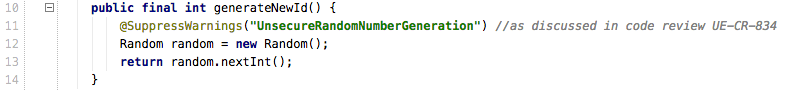

If you and the code author decide that Random is appropriate for this situation, then it’s a good idea to suppress this inspection for this line of code, and document why it’s OK or point to any discussion on the subject – this way future developers looking at the code can understand this is a deliberate decision.

So while tools can definitely point you at potential issues, part of your job as a code reviewer is to investigate the results of any automated checks and decide which action to take.

If you are using Upsource to review your code, you can customise your inspection settings, including selecting security settings. Do this by opening your project in IntelliJ IDEA and navigating to the Inspections settings. Select the settings you want and save them to the Project Default profile. Make sure

Project_Default.xmlis checked in with your project code, and Upsource will use this to determine which inspections to run.At the time of writing, these are the available security inspections:

Understand your Dependencies

Let’s move on to other areas that need a human reviewer. One of the areas where security vulnerabilities can creep into your system or code base is via third party libraries. When reviewing code, at the very least you want to check if any new dependencies (e.g. third party libraries) have been introduced. If you aren’t already automating the check for vulnerabilities, you should check for known issues in newly-introduced libraries.

You should also try to minimise the number of versions of each library – not always possible if other dependencies are pulling in additional transitive dependencies. But one of the simplest way to minimise your exposure to security problems in other people’s code (via libraries or services) is to

- Use a few as sources as possible and understand how trustworthy they are

- Use the highest quality library you can

- Track what you use and where, so if new vulnerabilities do become apparent, you can check your exposure.

This means:

- Understanding your sources (e.g. maven central or your own repo vs arbitrarily downloaded jar files)

- Trying not to use 5 different versions of 3 different logging frameworks (for example)

- Being able to view your dependency tree, even if it’s simply through Gradle/Maven

Check if new paths & services need to be authenticated

Whether you’re working on a web application, or providing web services or some other API which requires authentication, when you add a new URI or service, you should ensure that this cannot be accessed without authentication (assuming authentication is a requirement of your system). You may simply need to check that the developer of the code wrote an appropriate test to show that authentication has been applied.

You should also consider that authentication isn’t just for human users with a username and password. Identity might need to be defined for other systems or automated processes accessing your application or services. This may impact your concept of “user” in your system.

Does your data need to be encrypted?

When you’re storing something on disk or sending things over the wire, you need to know whether that data should be encrypted. Obviously passwords should never be in plain text, but there are plenty other times when data needs to be encrypted. If the code under review is sending data on the wire, saving it somewhere, or it is in some way leaving your system, if you don’t know whether it should be encrypted or not, try and locate someone in your organisation who can answer that question.

Are secrets being managed correctly?

Secrets are things like passwords (user passwords, or passwords to databases or other systems), encryption keys, tokens and so forth. These should never be stored in code, or in configuration files that get checked into the source control system. There are other ways of managing these secrets, for example via a secrets server. When reviewing code, make sure these things don’t accidentally sneak into your VCS.

Should the code be logging/auditing behaviour? Is it doing so correctly?

Logging and auditing requirements vary by project, with some systems requiring compliance with stricter rules for logging actions and events than others. If you do have guidelines on what needs logging, when and how, then as a code reviewer you should be checking the submitted code meets these requirements. If you do not have a firm set of rules, consider:

- Is the code making any data changes (e.g. add/update/remove)? Should it make a note of the change that was made, by whom, and when?

- Is this code on some performance-critical path? Should it be making a note of start-time and end-time in some sort of performance monitoring system?

- Is the logging level of any logged messages appropriate? A good rule of thumb is that “ERROR” is likely to cause an alert to go off somewhere, possibly on some poor on-call person’s pager – if you do not need this message to wake someone up at 3am, consider downgrading to “INFO” or “DEBUG”. Messages inside loops, or other places that are likely to be output more than once in a row, probably don’t need to be spamming your production log files, therefore are likely to be “DEBUG” level.

Conclusion

This is just a tiny subset of the sorts of security issues you can be checking in a code review. Security is a very big topic, big enough that your company may hire technical security experts, or at least devote some time or resources to this area. However, like other non-coding activities such as getting to know the business and having a decent grasp of how to test the system, understanding the security requirements of our application, or at least of the feature or defect we’re working on right now, is another facet of our job as a developer.

We can enlist the help of security experts if we have them, for example inviting them to the code review, or inviting them to pair with us while we review. Or if this isn’t an option, we can learn enough about the environment of our system to understand what sort of security requirements we have (internal-facing enterprise apps will have a different profile to customer-facing web applications, for example), so we can get a better understanding of what we should be looking for in a code review.

And like many other things we’re tempted to look for in code reviews, many security checks can also be automated, and should be run in our continuous integration environment. As a team, you need to discuss which things are important to you, whether checking these can be automated, and which things you should be looking for in a code review.

This post has barely scratched the surface of potential issues. We’d love to hear from you in the comments – let us know of other security gotchas we should be looking for in our code.