Comparing database/sql, GORM, sqlx, and sqlc

This article was written by an external contributor.

Go is known for its simplicity, performance, and complete standard library. Out of the box, Go’s library supports many common operations that many other languages need third-party libraries to perform or may not even support at all. These operations are contained in various packages that handle features such as concurrency, networking, I/O, and text processing.

database/sql is a standard library package responsible for connectivity and interaction with databases (mostly SQL relational ones). It provides generic interfaces, types, and methods for SQL-like interactions. Though it supports many essential modern database features, such as transactions and prepared statements, it also has some limitations. For example, it suffers from a type limitation in which large uint64 values cannot be passed to statements as parameters.

This article compares the database/sql package with 3 other Go packages, namely sqlx, sqlc, and GORM. The comparison of these 4 tools focuses on 3 main areas:

- Features – Core features are explored, including what makes the tools unique and what they lack.

- Ease of use – Comparative ease of use is demonstrated using code samples performing the same operations for each tool.

- Performance/speed – Performance is measured by benchmarking a few database operations using the tools from the standard library’s testing package.

Features

This section looks at the features offered by each package. This comparison uses the base features of the database/sql package as the minimum requirement in terms of features, as all the packages were created to expand the functionality provided by the default package.

database/sql

database/sql was created with simplicity in mind, configured to support the most basic but necessary functionality for interacting with SQL-like databases.

To interact with database management systems, database packages need the appropriate drivers. Currently, database/sql supports a list of over 50 database drivers, covering the most popular DBMSs such as SQLite, MySQL/MariaDB, PostgreSQL, Oracle, and MS SQL Server.

This package also supports features such as basic CRUD operations, database transactions, named parameters, returning multiple result sets, cancellable queries, SQL type support, connection pool management, parameterized queries, and prepared statements.

sqlx

sqlx was created to extend the features of the standard library database package. Since it relies on the database/sql package, all of the features provided by that are also available, including support for the same set of database technologies and drivers.

Outside of these core features, sqlx shines in the following ways:

- Prepared statements with named parameters – These enable you to use the names of struct fields and map keys to bind variables in a prepared statement or query.

- Struct scanning – This allows you to scan query results directly into structs for a single row without having to individually scan each field or column, as is the case with database/sql. It also supports scanning into embedded structs.

SelectandGet– These are convenient methods for handling queries expected to return multiple records or a single record into a slice of a struct or a single struct, respectively. No need to loop on result sets!- Support for IN queries – This allows you to bind a slice of values as a single parameter to an IN query. The slice is spread out with the same number of bindvars in the expected places, compared to database/sql treating the slice as a single value.

- Named queries – This binds the names of struct fields to column names, avoiding the need for positional references to column names when assigning values to bindvars.

- Error-free result sets: Result sets do not return with errors, which allows for chainable operations on returned results, such as scanning results directly into a struct. This can be seen in the following snippet:

var p Place

err := db.QueryRowx("SELECT city, telcode FROM place LIMIT 1").StructScan(&p)

These are only a few examples of the many features of the sqlx package that ensure a better working experience than database/sql.

sqlc

sqlc is an SQL compiler bundled as an executable binary file and can generate type-safe code for your raw SQL schema and queries. So, you don’t have to write any boilerplate code other than your actual SQL statements.

According to the documentation, it can generate code for PostgreSQL, MySQL/MariaDB, and SQLite. However, the generated code also works with the standard library’s SQL package; therefore, it can use all supported drivers but not necessarily supported databases. Aside from supported databases and drivers, the following are some of its other features:

- Query annotations – These are various annotations that allow you to define the name of the function for each query and the type of result expected from the query. These are used during code generation to determine the name and signature of the function.

- JSON tags – sqlc supports the generation of JSON tags for structs or types that will be marshaled and sent to clients as JSON.

- Schema modifications – sqlc has support for reading various formats of migration files to modify schema and generate code to reflect those changes.

- Struct naming – sqlc provides options for naming schemes used to generate struct names from table names.

If you’re good with SQL statements and prefer not to use much code to perform database operations and handle the data, this is definitely your package.

GORM

GORM is a full-featured object-relational mapper (ORM) library for the Go language. It lets you write queries as Go code by calling various methods on objects, which GORM translates into SQL queries when interacting with the database. It provides a rich set of features for database interactions, some of which are listed below:

- Databases and drivers – According to the official documentation, GORM supports MySQL/MariaDB, PostgreSQL, SQLite, SQL Server, and ClickHouse. It also provides the drivers for these database systems. All the same, you may pass on an existing database/sql connection to it, opening it up to possible compatibility with all other databases and drivers supported by the database/sql package.

- Migrations – GORM supports database migration management for modifying schemas or reverting changes. It makes various provisions for that, such as being able to create tables from structs.

- Relationships – You can define database relationships using models or structs with annotations in tags and nested structs. One-to-one, many-to-many, and one-to-many relationships can be achieved.

- Transactions – GORM provides support for transactions, nested transactions, committing and rolling back transactions, etc.

- Database resolver – This allows you to access multiple database and schema sources in one application. This also allows connection switching (automatic and manual) depending on the struct you’re accessing or database availability and load balancing.

- SQL builder – The provided SQL builder supports various forms of SQL and SQL clauses. These include but are not limited to CRUD operations, subqueries, prepared statements, named arguments, upserts, SQL expressions, joins, etc.

- Hooks – These are interfaces that can be implemented to undertake certain activities before or after a DML statement is executed.

This is a non-exhaustive list of this package’s features; it has a wide range of other features that ensure it performs well in almost any use case.

Ease of Use

To maximize productivity, it’s important that a library is easy to use for both experienced developers and newbies. This section looks at how easy it is to get started with each library, considering the available documentation, tutorials (textual and video), and community support. This section also looks at code samples for a few common operations and the learning curve involved.

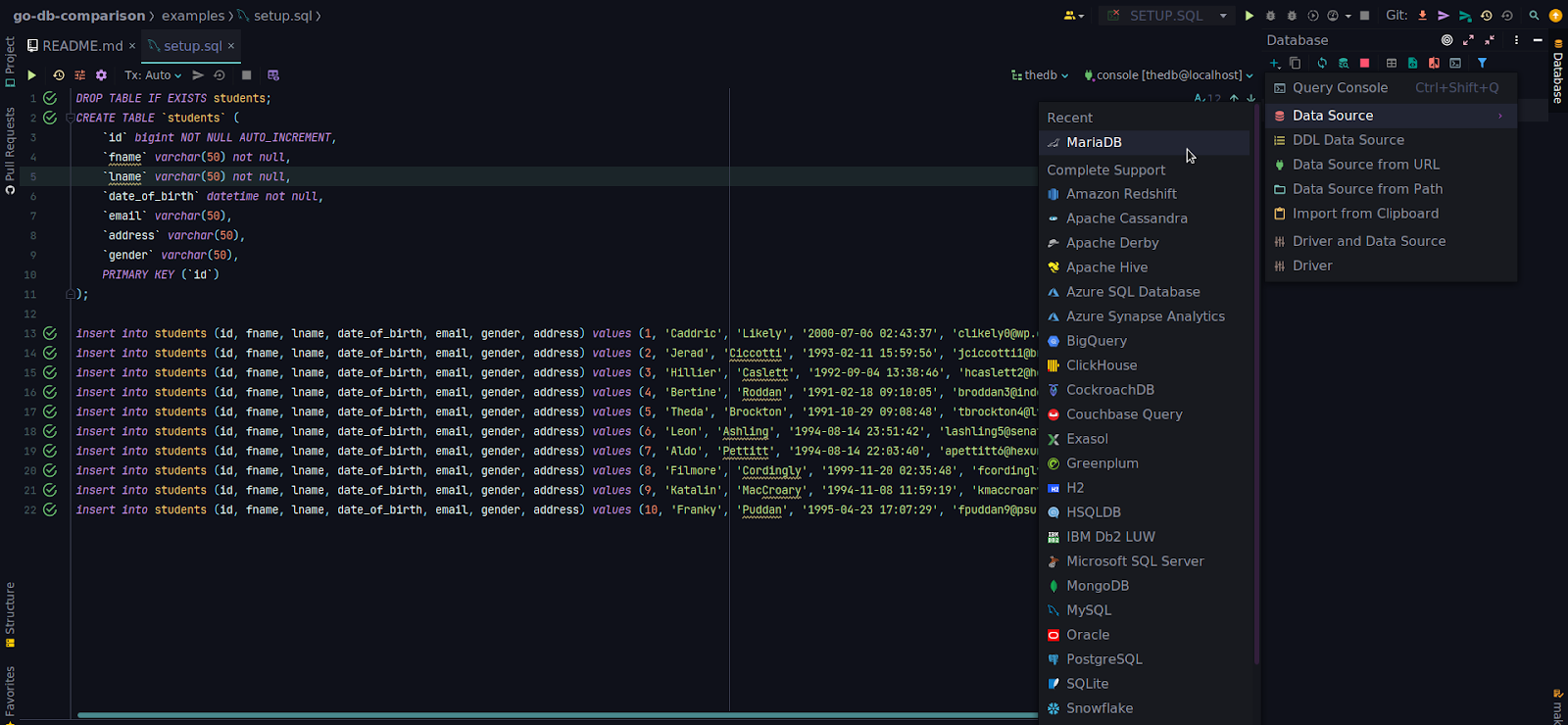

The code samples for this section are provided in examples directory in this repository on GitHub. To run the code samples, you can connect to a database in GoLand. You can use MySQL or MariaDB for this. Make sure that you have either of those installed.

To connect to a database, open the Database tool window, click on the plus icon, and choose Add data source.

Provide a name for this database connection you’re setting up and all the necessary credentials for your setup. This example uses localhost as the host, default MySQL/MariaDB port 3306, a user called theuser, a hidden password of value thepass, and a database named thedb. GoLand will suggest downloading the necessary drivers if they haven’t been downloaded before. Finally, click on the Test Connection link at the bottom-left of the screen. This will validate the credentials by trying to establish a connection to the specified database using the user and password credentials you provided.

Hopefully, you have connected to your database. Now you can run the setup.sql script – just open it and press on the green arrow at the top left corner or press ⌘⏎ /Ctrl+Enter to execute statements.

If everything went smoothly, you will see your table in the tables list. You can click on the students table twice to see the rows.

database/sql

For experienced developers, database/sql has detailed, technically advanced documentation. Additionally, there’s a great tutorial for beginners. The syntax is quite simple, but you’ll need to check for errors often, which means more code. As the go-to package in the standard library, it also has a huge following and strong support from the entire Go dev community.

If you’re comfortable writing your own SQL queries, this package is a good option, especially for basic queries and basic types or structs with a small set of fields.

The following snippet demonstrates how to use the database/sql package with the MySQL driver to insert a record:

// Function to add a record and return the record ID if successful, if not then an error

func addStudent(s Student) (int64, error){

// Insert statement listing all the fields and providing their respective values

query := "insert into students (fname, lname, date_of_birth, email, gender, address) values (?, ?, ?, ?, ?, ?);"

result, err := db.Exec(query, s.Fname,s.Lname, s.DateOfBirth, s.Email, s.Gender, s.Address)

if err != nil {

return 0, fmt.Errorf("addStudent Error: %v", err)

}

// Check to get the ID of the inserted record

id, err := result.LastInsertId()

if err != nil {

return 0, fmt.Errorf("addStudent Error: %v", err)

}

return id, nil

}

As you can see, the insert operation is very similar to writing direct SQL statements. You’ll also see that you need to type each field and its associated value separately. Unfortunately, maintaining the code in large structs or complex types becomes cumbersome over time and increases the chance of introducing errors, which may only be caught during runtime.

The snippet below demonstrates how to retrieve multiple records using the database/sql package:

// Function to fetch multiple records

func fetchStudents() ([]Student, error) {

// A slice of Students to hold data from returned rows

var students []Student

rows, err := db.Query("SELECT * FROM students")

if err != nil {

return nil, fmt.Errorf("fetchStudents %v", err)

}

defer rows.Close()

// Loop through rows, using Scan to assign column data to struct fields

for rows.Next() {

var s Student

if err := rows.Scan(&s.ID, &s.Fname, &s.Lname, &s.DateOfBirth, &s.Email, &s.Address, &s.Gender ); err != nil {

return nil, fmt.Errorf("fetchStudents %v", err)

}

students = append(students, s)

}

if err := rows.Err(); err != nil {

return nil, fmt.Errorf("fetchStudents %v", err)

}

return students, nil

}

In the snippet above, after fetching the records, you loop through them, use row.Scan() to individually scan each field of each record into a struct, then append the struct to a slice. You need to be careful, as the number of fields in the struct provided must equal the number of fields in the records returned. You should also note that it’s difficult to work with queries using the IN clause, as it treats IN bindvar values as a single value and not multiple values.

sqlx

sqlx also provides detailed technical documentation. As it’s mainly an extension of the Go database/sql package, there’s also a comprehensive guide on using this package with database/sql.

On GitHub, sqlx boasts over 12,800 stars, indicating a strong following and a very active issue list.

If you’re coming from a database/sql background, you’ll have a smooth transition, as queries are similar in syntax and compatible. From the snippets below, you’ll notice quite a lot of similarities with the database/sql code seen earlier, especially the insert statement:

// Add inserting student record using the sqlx package

func addStudent(s Student) (int64, error){

query := "insert into students (fname, lname, date_of_birth, email, gender, address) values (?, ?, ?, ?, ?, ?);"

result := db.MustExec(query, s.Fname,s.Lname, s.DateOfBirth, s.Email, s.Gender, s.Address)

id, err := result.LastInsertId()

if err != nil {

return 0, fmt.Errorf("addStudent Error: %v", err)

}

return id, nil

}

There are fewer lines of code than with database/sql, due to the reduced need for error checking using the db.MustExec() method.

The code below shows how to retrieve multiple records using the sqlx convenience method:

// Function to fetch multiple records

func fetchStudents() ([]Student, error) {

// A slice of Students to hold data from returned rows

var students []Student

err := db.Select(&students,"SELECT * FROM students LIMIT 10")

if err != nil {

return nil, fmt.Errorf("fetchStudents %v", err)

}

return students, nil

}

As you can see, compared to using db.Query() to write syntax compatible with database/sql and looping through the results, sqlx provides much simpler and cleaner code to achieve the same goal using db.Select(). It even provides a better way of handling queries with the IN clause, as explained in the Features section above.

sqlc

sqlc’s previously mentioned ability to generate type-safe code is another benefit for ease of use, as it reduces the amount of Go code you need to write for your database operations, saving you time and effort.

With an active and growing community of over 6,800 stars, sqlc also boasts strong engagement and community support.

The documentation provides a step-by-step walkthrough from installation to the generation of code, and there are also concise video tutorials if you’d like a more interactive approach. So, getting started with sqlc couldn’t be easier, as you’ll see for yourself below.

After installing the binary, write your config file in sqlc.yaml, which should look like the snippet below:

version: 1

packages:

- path: "./"

name: "main"

engine: "mysql"

schema: "schema.sql"

queries: "query.sql"

Now all you need to do is write your plain old SQL in 2 files, just as in the image below, and run the command sqlc generate in your working directory:

The following list explains the elements of the above image:

- The red box shows 3 new generated files:

models.gofor structs,query.sql.go, anddb.gofor other database-related code. - The yellow box highlights the

schema.sqlfile containing your schema definition. - The blue box highlights the

query.sqlfile, which contains all the SQL statements for your application’s database operations along with some metadata for generating the functions. - The green box highlights the run that sqlc generated in the working directory in the terminal.

Now, you only need to provide a database connection using the database/sql package, then call your required methods without modifying the generated code, as you can see in the following snippet:

func main() {

// Create a new database connection

conn, err := sql.Open("mysql", "theuser:thepass@tcp(localhost:3306)/thedb?parseTime=true")

if err != nil {

log.Fatal(err)

}

fmt.Println("Connected!")

db := New(conn)

// Initialize record to be inserted

newSt := addStudentParams{

Fname: "Leon",

Lname: "Ashling",

DateOfBirth: time.Date(1994, time.August, 14, 23, 51, 42, 0, time.UTC),

Email: "lashling5@senate.gov",

Gender: "Male",

Address: "39 Kipling Pass",

}

// Insert the record

sID, err := db.addStudent(context.Background(), newSt)

if err != nil {

log.Fatal(err)

}

fmt.Printf("addStudent id: %v \n", sID)

// Fetch the records

students, err := db.fetchStudents(context.Background())

if err != nil {

log.Println(err)

}

fmt.Printf("fetchStudents count: %v \n", len(students))

}

Provided you are good at writing your SQL statements, this package’s generation of type-safe code makes it a better option, especially if you have to interact with many schemas of varying types.

GORM

GORM has very comprehensive yet simple documentation and guides on getting started. Unfortunately, GORM takes more of a code-based approach to interacting with the database, meaning there’s a steep learning curve at the start.

Using the GORM syntax, you might spend a lot of time initially constructing code similar to your raw SQL queries. However, once you’re familiar with the syntax, you’ll have a clean codebase with rare interaction with raw SQL.

GORM is the second biggest Go database package (after database/sql) on GitHub, with over 30,400 stars, which means there should be no shortage of community support.

The following example demonstrates how GORM would be used to implement the same insert statement, and multiple records query as before:

func main() {

// Open a database connection

db, err := gorm.Open(mysql.Open("theuser:thepass@tcp(127.0.0.1:3306)/thedb?charset=utf8mb4&parseTime=True&loc=Local"))

if err != nil {

log.Fatal(err)

}

fmt.Println("Connected!")

// Initialize record to be inserted

s := Student{

Fname: "Leon",

Lname: "Ashling",

DateOfBirth: time.Date(1994, time.August, 14, 23, 51, 42, 0, time.UTC),

Email: "lashling5@senate.gov",

Address: "39 Kipling Pass",

Gender: "Male",

}

// Add student record and return the ID into the ID field

db.Create(&s)

fmt.Printf("addStudent id: %v \n", s.ID)

// Select multiple records

var students []Student

db.Limit(10).Find(&students)

fmt.Printf("fetchStudents count: %v \n", len(students))

}

As you can see in the above snippet, the code is relatively simple, with very few lines. Once you get past the steep initial learning curve, GORM is actually more efficient for writing queries than the other 3 options.

Performance and Speed

The performance and speed of database operations are crucial to the overall performance of data-centric applications. The most suitable database package is highly dependent on performance, especially if you’re developing a low-latency application.

This section compares the performance of all 4 database packages. To undertake this benchmark, a MySQL/MariaDB database was set up with 15,000 student records. Since most use cases for these packages are queries for fetching records, the benchmark captured the performance of all 4 packages fetching 1, 10, 100, 1000, 10,000, and 15,000 records and scanning them into structs:

================================== BENCHMARKING 1 RECORDS ====================================== goos: linux goarch: amd64 pkg: github.com/rexfordnyrk/go-db-comparison/benchmarks cpu: Intel(R) Core(TM) i7-8550U CPU @ 1.80GHz Benchmark/Database/sql_limit:1_-8 9054 124134 ns/op Benchmark/Sqlx_limit:1_-8 8914 138792 ns/op Benchmark/Sqlc_limit:1_-8 7954 147056 ns/op Benchmark/GORM_limit:1_-8 13388 89251 ns/op ================================================================================================= ================================== BENCHMARKING 10 RECORDS ====================================== Benchmark/Database/sql_limit:10_-8 7576 157780 ns/op Benchmark/Sqlx_limit:10_-8 4384 260402 ns/op Benchmark/Sqlc_limit:10_-8 4183 256384 ns/op Benchmark/GORM_limit:10_-8 9466 136556 ns/op ================================================================================================= ================================== BENCHMARKING 100 RECORDS ====================================== Benchmark/Database/sql_limit:100_-8 2521 427603 ns/op Benchmark/Sqlx_limit:100_-8 2139 497755 ns/op Benchmark/Sqlc_limit:100_-8 2838 456938 ns/op Benchmark/GORM_limit:100_-8 1896 563539 ns/op ================================================================================================= ================================== BENCHMARKING 1000 RECORDS ====================================== Benchmark/Database/sql_limit:1000_-8 516 2201303 ns/op Benchmark/Sqlx_limit:1000_-8 445 2786983 ns/op Benchmark/Sqlc_limit:1000_-8 535 2313674 ns/op Benchmark/GORM_limit:1000_-8 315 4186201 ns/op ================================================================================================= ================================== BENCHMARKING 10000 RECORDS ====================================== Benchmark/Database/sql_limit:10000_-8 51 21690323 ns/op Benchmark/Sqlx_limit:10000_-8 38 28458473 ns/op Benchmark/Sqlc_limit:10000_-8 55 21558300 ns/op Benchmark/GORM_limit:10000_-8 28 40463924 ns/op ================================================================================================= ================================== BENCHMARKING 15000 RECORDS ====================================== Benchmark/Database/sql_limit:15000_-8 36 32048808 ns/op Benchmark/Sqlx_limit:15000_-8 28 41484578 ns/op Benchmark/Sqlc_limit:15000_-8 34 31680017 ns/op Benchmark/GORM_limit:15000_-8 20 59348697 ns/op ================================================================================================= PASS ok github.com/rexfordnyrk/go-db-comparison/benchmarks 77.835s

For the purposes of uniformity and fairness, the benchmark was run on the same hardware. The test also ensured a similar code structure by placing each action in a separate function and measuring their performance individually.

There are many factors that influence the performance on a production server, so we typically use simple benchmarks to eliminate external factors as much as possible. While this benchmark uses a single select statement, we encourage you to modify the source code and try out more complex tests, such as using join queries and nested structs and fetching higher record sets to better simulate your own production environment. You can replicate this benchmark using the benchmarks code directory of this repo on GitHub.

Each result set consists of three columns:

- The name of the benchmark method that was run.

- The number of times the benchmark was run until a reliable time was generated.

- The time (in nanoseconds) it took each time the benchmark was executed.

For the first two tests of 1 and 10 records, GORM outperforms the rest of the libraries. However, it trails significantly as the record count increases. sqlx performs quite consistently in third place in terms of performance, better than GORM, but it usually lags shortly behind sqlc and database/sql when data size increases.

The database/sql and sqlc packages perform very well across all six cases in benchmarks. As the number of records fetched increases (to 10,000 and 15,000 records), sqlc proves to be slightly faster than database/sql.

Conclusion

While database/sql is the default Golang package, whether you should use it or not depends on your needs as a developer. In this article, you saw the advantages of each package.

GORM is the best package for you if you need advanced querying, full support functions from underlying database technologies, and a clean codebase—as long as you’re willing to trade off some performance. If you only need basic queries and are comfortable writing your own SQL, then the database/sql or sqlx packages will do just fine.

Finally, sqlc is best suited for backend developers who work extensively with databases and need to write lots of queries under tight deadlines. You can write your raw SQL queries and generate code without having to worry about types, scanning, or other obstacles to productivity. sqlc also provides great performance gains compared to the rest, especially when dealing with greater volumes of data or record sets.

Note that this article has been updated due to an error in benchmarking. Many thanks to Lukáš Zapletal, who commented on the original article with a fix for the bug. Thank you as well to the JetBrains community for providing spaces like this that are designed for learning and contributing together.

Subscribe to GoLang Blog updates