.NET Tools

Essential productivity kit for .NET and game developers

Critical Thinking in an AI-Powered World

Last year, we launched JetBrains AI Assistant, and from JetBrains’ perspective, our mission of improving your drive to develop hasn’t changed over the past two decades. As we head into 2024, Artificial Intelligence (AI) will likely be the most talked about topic of the year. Wherever you fall on the spectrum of AI conversation, one thing is clear: we must all equip ourselves with new critical thinking skills.

In this post, we’ll talk about the technology that powers JetBrains AI Assistant, its strengths and weaknesses, examples of edge cases, and strategies to get better results from the JetBrains AI Assistant.

What are LLMs? Strengths and Weaknesses

Large Language Models (LLMs) are the technology powering many AI services that require natural language processing. These models are typically trained to achieve general-purpose language tasks and have been described as “autocorrect on steroids” by many. LLMs are a complex web of decisions known as a neural network. Most LLMs have settled on a chat interface with a feedback loop designed to refine a particular task set by the user further.

Models typically have three distinguishing factors: Tokens, number of parameters, and training dataset cutoff dates.

The tokens define how much context a user can define in a session. What a token is depends on the LLM, as it is a representation of input. A token could be a word, a character, or a meaningful data representation. With access to more tokens, an LLM can have more context to complete a user’s task, although more isn’t necessarily better in the case of tokens.

Parameters are LLMs’ second distinguishing, and not always public, feature. You can think of parameters as decisions along the neural network. The more parameters, the more significant the number of decisions an LLM model may exercise to produce an answer. Current models have parameters in the Billions, with future models predicted to be in the Trillions. Again, more isn’t always necessarily better, as a smaller model trained for a specific use case may outperform a more extensive model on task results and time taken to respond.

Finally, and most important to the user experience, is the training cutoff date. Many of these models use public datasets to train. These cut-off dates become more critical in tasks relating to time-sensitive knowledge, such as current events and cutting-edge programming techniques. Cutoff dates are not a clear indication of quality, as some model providers still keep much of their datasets secret due to the potential use of copyrighted material.

JetBrains developer advocate Jodie Burchell is an expert in data science and has broken down the strengths and weaknesses of LLMs.

| Strengths | Weaknesses |

|---|---|

| Summarization | Maths |

| Text & Code Generation | Randomness |

| Classification | Timeliness |

| Non-deterministic |

We’ll explore some of these in the following sections and strategies to counteract them in your attempt to find solutions.

The Temporal Disconnect

As a technologist, you likely understand the break-neck speed at which all ecosystems are currently moving. Whether you stay current with the latest trends is a personal choice, but if you’re an early adopter, you may have noticed AI-generated code samples can sometimes feel “dated”.

For example, at the time of writing, ChatGPT has a training dataset up to September 2021. The cutoff date means the embedded information in the ChatGPT model is unaware of any Java version after 16 and is undoubtedly unaware of the latest C# 12 features in .NET.

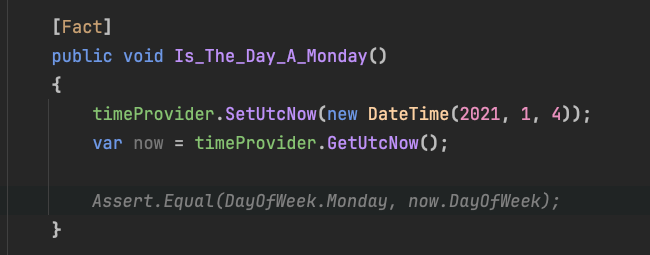

Let’s take a look at a .NET example specifically regarding testing dates.

[Fact]

public void Ladies_An_Gentlemen_The_Weekend()

{

var now = DateTime.UtcNow.DayOfWeek;

Assert.Contains(now,

[DayOfWeek.Friday, DayOfWeek.Saturday, DayOfWeek.Sunday]

);

}

Regardless of your technology stack, you likely notice an immediate issue. This test will pass intermittently based on the current date and time. There are multiple ways to solve this issue, but let’s ask the JetBrains AI Assistant first.

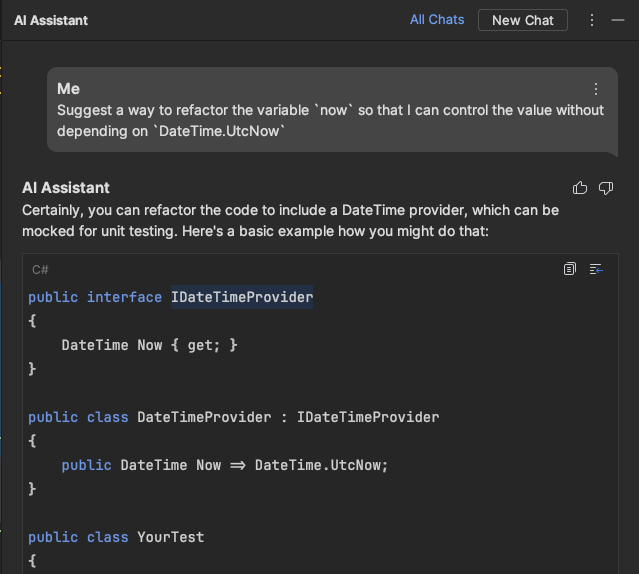

Suggest a way to refactor the variable `now` so that I can control the value without depending on `DateTime.UtcNow`

The response provides a custom IDateProvider implementation. The proposed functionality is a correct solution, but a developer with expertise in the ecosystem might question whether it’s the only solution.

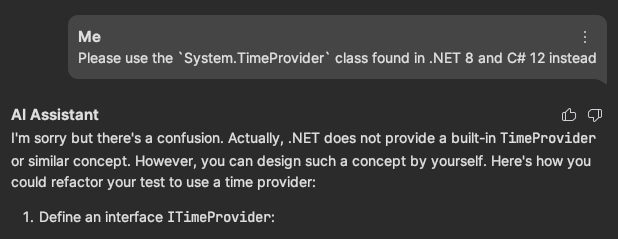

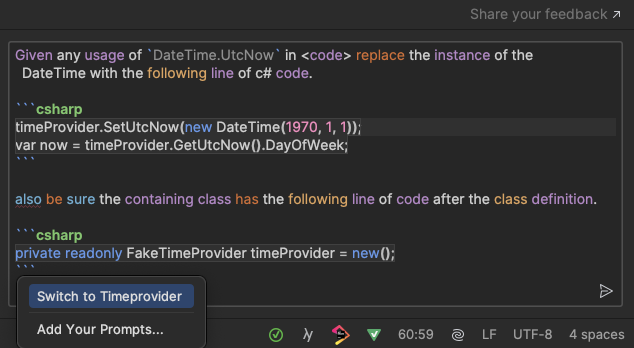

.NET 8 recently added a TimeProvider class, so let’s ask JetBrains AI Assistant to refactor to use the mechanisms built into the SDK.

The unexpected result can be a frustrating experience for those who know a feature exists but can’t seem to get the JetBrains AI Assistant to generate the expected code. Let’s write the TimeProvider code ourselves.

private readonly FakeTimeProvider timeProvider = new();

[Fact]

public void Ladies_An_Gentlemen_The_Weekend()

{

timeProvider.SetUtcNow(new DateTime(2021, 1, 8)); // Friday

var now = timeProvider.GetUtcNow().DayOfWeek;

Assert.Contains(now,

[DayOfWeek.Friday, DayOfWeek.Saturday, DayOfWeek.Sunday]

);

}

While the chat may not yield the initial expected results, as we begin to write another test, the JetBrains AI Assistant becomes aware of usages and patterns around how we may want to write some of our subsequent tests.

Remember, LLMs are excellent at predicting text, and while newer knowledge of language features may not exist within the model, most newer language features behave similarly to older ones. The patterns in languages can aid JetBrains AI Assistant, and your code can help your sessions be productive by providing additional context.

If you find a temporal piece of information commonly missing from your sessions, you can create a new prompt to describe your expected behavior. Remember, LLMs are excellent at predicting future text from the previous examples.

Custom prompts can be a great way to overcome the limitations of any model until providers have updated the training data and contain newer embedded information.

Strategies for Better JetBrains AI Assistant Responses

Like most things, the more effort you put into JetBrains AI Assistant sessions, the better your results. Previously, we had mentioned LLMs aren’t particularly strong with math.

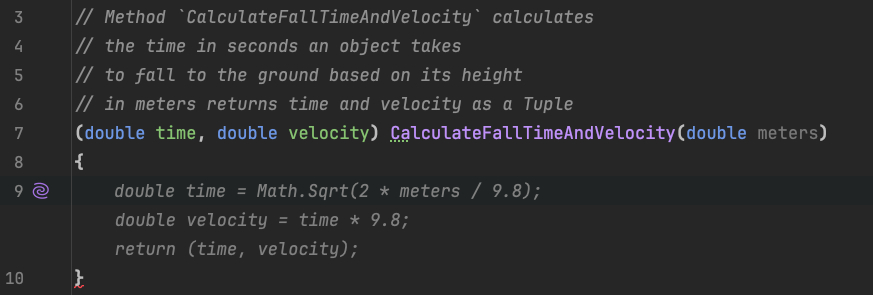

Let’s work with JetBrains AI Assistant to generate a method that calculates the time it takes for an object to fall from a certain height in meters using the gravitational constant of Earth’s gravity. We’ll use the following code and let code completion fill out the rest.

using System;

// Method `CalculateFallTimeAndVelocity` calculates

// the time in seconds an object takes

// to fall to the ground based on its height

// in meters returns time and velocity as a Tuple

(double time, double velocity) CalculateFallTimeAndVelocity(double meters)

{

}

var (time, velocity) = CalculateFallTimeAndVelocity(100);

Console.WriteLine($"time: {time}, velocity: {velocity} m/s");

In the method’s body, JetBrains AI Assistant recommends implementing known formulas.

This solution is good, but you should immediately become skeptical whenever you see numbers in a response.

Ask yourself the following questions:

- Do I understand what the mathematics are doing?

- Are the values correct or precise enough for my use case?

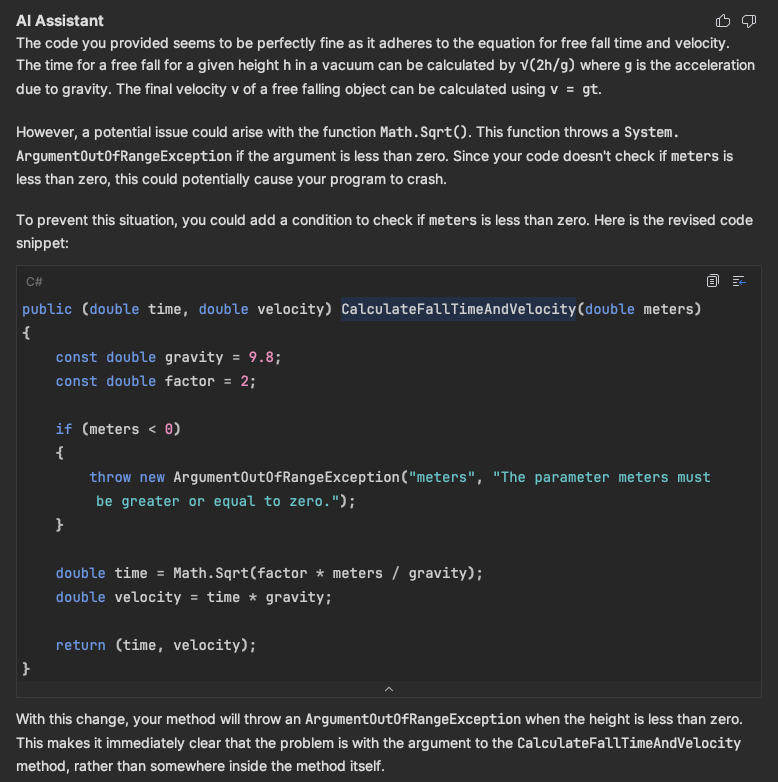

An intelligent approach to answering these questions is to use JetBrains AI Assistant to clarify the code completion suggestion. Yes, you can use JetBrains AI Assistant to check its responses. Start with a New AI Chat session containing the prompt “move all constants to descriptive variables.” The resulting response will result in the following refactored code.

(double time, double velocity) CalculateFallTimeAndVelocity(double meters)

{

const double Gravity = 9.8;

const double Factor = 2;

double time = Math.Sqrt(Factor * meters / Gravity);

double velocity = time * Gravity;

return (time, velocity);

}

Next, you’ll notice that the value of 9.8 is not that precise. Let’s continue our chat session with the prompt: “Set the value of Gravity to Earth’s gravity up to four decimal places of precision”.

(double time, double velocity) CalculateFallTimeAndVelocity(double meters)

{

const double Gravity = 9.8067;

const double Factor = 2.0000;

double time = Math.Sqrt(Factor * meters / Gravity);

double velocity = time * Gravity;

return (time, velocity);

}

And I confirm with other sources that Earth’s gravitational constant is indeed 9.8067.

I’m not a physicist and would like additional comments on each line describing the steps occurring throughout the implementation. I use the prompt: “Comment each line with valuable information that explains what’s happening”. It makes a detailed description easier for a layman like me to follow.

// Function to calculate the fall time and velocity of an object falling from a certain height

(double time, double velocity) CalculateFallTimeAndVelocity(double meters)

{

// Earth's gravity constant in meters per second squared, up to four decimal places

const double Gravity = 9.8067;

// Factor used in the time calculation formula

const double Factor = 2.0000;

// Calculate the time of fall using the formula sqrt(2 * meters / Gravity)

double time = Math.Sqrt(Factor * meters / Gravity);

// Calculate the velocity at the end of the fall using the formula Gravity * time

double velocity = time * Gravity;

// Return the calculated time and velocity

return (time, velocity);

}

I may omit some or all of the comments from my final codebase, but I can make a better decision when seeing all of them in place.

As a final step, I may ask JetBrains AI Assistant to review the code block for potential issues using the Find Potential Issues prompt.

Oh, right, there’s a potential issue with negative heights. Let’s be sure to handle that with an appropriate conditional block. We can now end our chat session and declare success.

I hope you take a few things from this example:

- Writing clear and concise instructions for the first time is challenging. You’ll likely have to iterate in a session to find an acceptable solution.

- You should always be skeptical about numbers. Values could need more precision or be wrong.

- Refactor any or all constants into variables with meaningful names for a clearer understanding of the code.

- Make sure mathematical equations are accurate. You can check this using other sources and the JetBrains AI Assistant to find problems.

- Asking the AI Assistant to comment on lines within complex methods can help you better understand the steps in a method.

Conclusion

AI Assistant can help you solve a new fascinating set of problems but does not claim to be infallible. Since AI Assistant uses models trained on human data, it can sometimes be wrong. That’s why you should think critically about responses and always take steps to understand and verify the results of any LLM-based product.

For JetBrains IDE users, we benefit from products mixed with new and evolving Artificial Intelligence with the tried and tested “Real Intelligence” of industry experts and leading tool builders. We don’t see these two modes in conflict with each other. Instead, they are a combination designed to improve the most essential part of software development: you, the developer.

We hope you enjoyed this blog post, and if you have any questions or comments, please feel free to comment.

image credit: Milad Fakurian

Subscribe to a monthly digest curated from the .NET Tools blog: