Looking at Python through the eyes of a neural net

The JetBrains full line code completion plugin for Python is now available as a public beta. We would like to talk about some of the technologies and algorithms used to create the plugin and share statistics about Python programming that we’ve collected in the process.

What is “full line code completion?”

You are probably already familiar with code completion, the kind that suggests the next word the user is typing. If you are not, we have covered it in a series of articles (one, two, three, four).

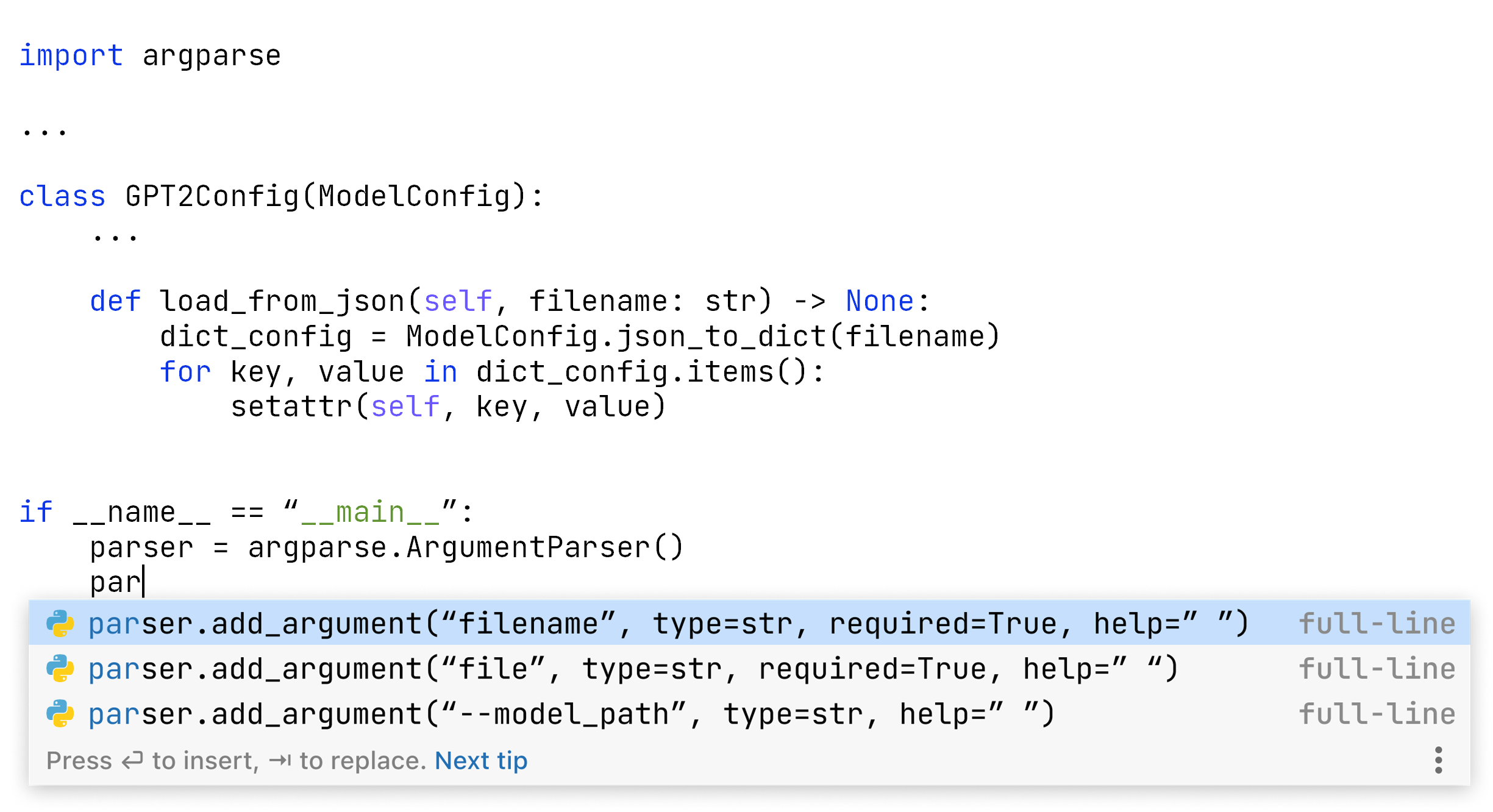

Full line code completion extends the service by suggesting larger fragments of code. Among other things, it can fill in the method call parameters for you or write the error message text. UX-wise, it is similar to normal code completion and uses the same pop-up to present the results:

However, the technology behind this extended completion form is fundamentally different.

The standard code completion uses static analysis to determine classes, methods, field variables, and keywords visible from the current location and then applies machine learning to choose the top recommendations. Selecting the best item from a predetermined set is an example of discriminative modeling. Full line completion on the other hand provides a new chunk of code consisting of several “words”. Writing code looks like a creative process and is more computationally intensive. This is an example of generative modeling. Neural nets are a typical generative modeling tool, which we also use.

We would like to take you on a guided tour under the hood of full line code completion and show you several curious aspects of how it works. Today’s aspect is: how does a neural net see your program?

Algorithms working with programming languages use a variety of tricks from natural language processing. Instead of operating the words of the original language, the neural net works with a sequence of tokens from a special vocabulary. The algorithm builds the vocabulary using the byte pair encoding process. We’ll first look at an example in simple English and then take a look at how this works in Python.

Byte pair encoding in natural languages

The algorithm builds the vocabulary at the beginning of the training phase, gathering the statistics from the texts used for training. The set of tokens doesn’t change after that and stays fixed for all the processing during the exploitation phase.

Let’s imagine that the only English that is available for training is the following saying:

This ancient wisdom may or may not still hold true. In either case, it contains multiple repeating words, which will help us understand the algorithm. What kind of tokens will we be able to obtain from it for further usage? We initialize the token list with all the single letters (symbols) from our training sentence:

[i, f, y, o, u, a, l, w, s, d, h, t, g, e]

Incidentally, Unicode codes each of them into a single byte, so symbols and bytes are the same here.

We don’t include space, instead we can use it as a natural token break. This detail will become important later.

After the initialization, we iteratively expand the vocabulary. We find the most common concatenation of two existing entries and call it a new token. In our example, “you” and “always” are repeated four times, but we can’t add them yet because none of them is a concatenation of two entries. We need to go step by step, and the first candidate is “yo”. Once the new token is defined, it becomes unbreakable, and we can’t use its parts separately. Therefore, “ou” will never become a token as it would require splitting “yo”.

We repeat this step, finding other frequent token concatenations and adding them to the vocabulary. The algorithm will get to “you” and “always” before processing other symbol pairs like “wh” and “at” occurring only twice. The vocabulary of size 32 (a convenient power of two) may look like this:

[i, f, y, o, u, a, l, w, s, d, h, t, g, e, yo, ys, al, ays, ways, you, always, at, wh, what, if, il, id, ot, wil, do, did, ge]

Every token we add is a concatenation of two other tokens, which is why it is called a pair encoding.

Now is a good time to clarify any potential confusion between the different understandings of what a token is. We will call a lexical element of the language a “word”. We will refer to an entry of the vocabulary obtained through byte pair encoding as “token”. There may be tokens that are not words (for example ays). There may also be words that are not tokens (for instance: will and get. We stopped the vocabulary creation before including them).

The neural net takes an input and generates an output in terms of tokens, not words. The required computational resources depend on how large the vocabulary is. This trick allows us to limit the size to any desired value instead of using all the language words.

The amount of tokens in the input is also essential for quality and performance. The algorithm can consider only a fragment (called “context”) of the text for which it needs to generate the continuation. If we use more extended tokens, we can take larger chunks of text as input. Fortunately, working with programming languages provides additional options to achieve that.

From natural to programming languages

The authors of natural language processing systems commonly use word boundaries as a hard border between the tokens. “John Smith” sequence is more frequent in English than “Trantor,” especially if you consider non-fiction. However, “Trantor” has a theoretical chance of becoming a token in a substantially large vocabulary because it is a single word. Being two words, “John Smith” has no such option. Tokens of the natural language usually don’t cross word borders.

When it comes to programming languages, we obtain the natural separation of the tokens from the lexer: the word ends where you can insert a space. A variable, a constant, and a language keyword keep apart.

We thought that for programming languages, we could do better. Look at the following typical Python phrase:

for i in range(

It includes many different lexical elements, including two language keywords, a variable, and a function. Still, the (very unskilled) Python programmer in me thinks that for i in range( should be a single entry in the vocabulary. The neural net should spend one inference iteration instead of four or five to predict it, saving your laptop’s computational capacity for what’s inside the braces.

The idea is to limit the tokens by line breaks instead of lexical elements. Since our target is full line code completion, using complete lines as maximal tokens is natural.

Our vocabulary size limit is 16,384, conveniently again, a power of 2. We’ve initialized the process with Unicode characters covering 99.99% of the text and started processing Python repositories with permissive licenses.

In the following sections, you will learn about the 16,384 most popular Python constructions.

Glitches in the vocabulary

We found a few systemic issues by visually inspecting the vocabulary. According to the statistics, some entries should be there, but including them has some drawbacks.

The first surprise: Chinese

The 600 or so tokens that appeared after the bytes were mostly the Unicode symbols for popular non-Latin-script languages, like Chinese characters. The Chinese itself was a surprise; otherwise, the meaning of the characters looks reasonable.

For example, the top occurrence is 的. This symbol is most commonly used as the analog of the English “‘s” and expresses the possessive case. Statistically, this is the most frequent Chinese character. Some were very popular in our dataset but less frequent in the language itself. For example, 数 and 码 relate to numbers, while 网 means “network.” Given the programming context, nothing is out of the ordinary here. We just need to figure out why these symbols appeared in our vocabulary.

The non-Latin characters, including Chinese, Russian, Japanese, Korean, and sometimes Arabic, come from Python docstrings. It turns out (surprise!), people often write documentation in their native languages.

Including non-Latin characters in the vocabulary probably has more drawbacks than benefits, as they occupy space better used by more popular constructs of the programming language. We can better support the Unicode variable names allowed in Python by having these characters. However, people seldom use them, primarily for code obfuscation.

The second surprise: indentation

Another unpleasant thing appears if we grep the vocabulary for a keyword popular at the beginning of a line, such as return:

return return return return return

(and many other occurrences)

The valuable return statement fragments also appear with all varieties of leading indentations, so we get multiple copies of the following tokens in the vocabulary:

return self.return Truereturn Falsereturn 0return None

Keeping these copies may seem impractical. When we invoke completion, the caret is rarely at the beginning of a line. The IDE tracks indentation, and the user will likely already be at the correct location when they start typing return. Therefore, the return statements with leading spaces have a negligible chance of being used.

On the other hand, it saves the length of the context. Every indented return will be just one token instead of several.

Imports

The other issue that had us pondering what to do was the import statements involving popular frameworks, such as TensorFlow, PyTorch, and Django, for example:

from tensorflow.python.framework import opsfrom django.conf import settings

Such imports usually appear once per file, typically close to its beginning. Also, regular code completion and auto-import mechanisms can do it well enough. On the other hand, again, it allows taking larger chunks of the code into account when generating completion suggestions. It’s an open question whether we need these long imports as tokens.

Look into the data

Perhaps the key takeaway from this section is the importance of visual inspection of the data. The intermediate results of statistical data processing and ML-powered algorithms may contain systemic issues not covered by the tests but immediately apparent to a human expert.

Statistics + heuristics are often much better than just statistics.

Popular symbol pairs

Scrolling down, we finally get to the two-symbol combinations. It’s a lot of fun to investigate why they get to the top of the list. Some come from the most common language keywords, while others are products of naming conventions and programming habits.

Can you guess the most frequent two-symbol combination in Python (after double whitespace, of course)? It’s comma+whitespace, like this: , . Makes sense! Let’s see what’s next.

se comes primarily from self. In fact, self. is the most frequent five-symbol combination and is a token very close to the top. It is above most two-symbol and any three-symbol sequences except those it contains.in Yay! for i in range(re comes mostly from return.on is an interesting case. Many of our variable, field, and even class names end with “tion,” “cion,” or “sion.” “None” and “json” are two other significant contributors.te looks mysterious at first, but further study of the vocabulary reveals the abundance of the word “test” in various combinations. Words like “date,” “text,” “state,” “write,” and ubiquitous “items” also contribute to this token’s frequency.= must be a popular one indeed. If we change the order, = is not as frequent. The difference can’t be less than the number of != operators.or mostly comes from popular language keywords, such as or, for, and import.

Complex symbol combinations

Cross-border tokens

Two pretty popular tokens, s[ and s.append( perfectly describe working with lists in Python. We usually name the lists by nouns in plural form: days, goods, items, lines. These tokens demonstrate the power and beauty of statistics: they cross the borders of Python lexical elements to express our patterns of speaking English in the code.

Range

Let’s return to the range statement with which we introduced the full line tokens. Did it get into the vocabulary? Indeed, we can see it:

for i in range(

Moreover, there is an even longer statement that is popular:

for i in range(len(

By the way, i is the only counter name popular enough. Others like j and k don’t make it to the top.

Return statements

What do programmers use as the results of their functions? There is no surprise in the frequencies of:

return Falsereturn Truereturn None

They are very close to each other, but all are behind return self. One more typical token is return 0. People don’t use 1, 2, or any other number often enough to be visible in statistics. Other curious things:

return []return super(return datareturn valuereturn notreturn len(self.

The last token is obtained combining return and len(self. as return len( is not in the vocabulary.

Classes

Are any combinations with the word class popular enough to make the top 16384? The prominent leader here is class Test which is pretty high. Two other visible items of the kind are way behind:

class Meta:class Base

Other meaningful tokens that include class don’t deal with the class names:

classifierclassificationissubclass(@classmethodBase class for

The two last ones come from the documentation.

Putting it all together

The neural net uses the vocabulary to represent the input program and construct the suggestions. The code generation algorithms are a topic for future articles, while the program representation is available right now.

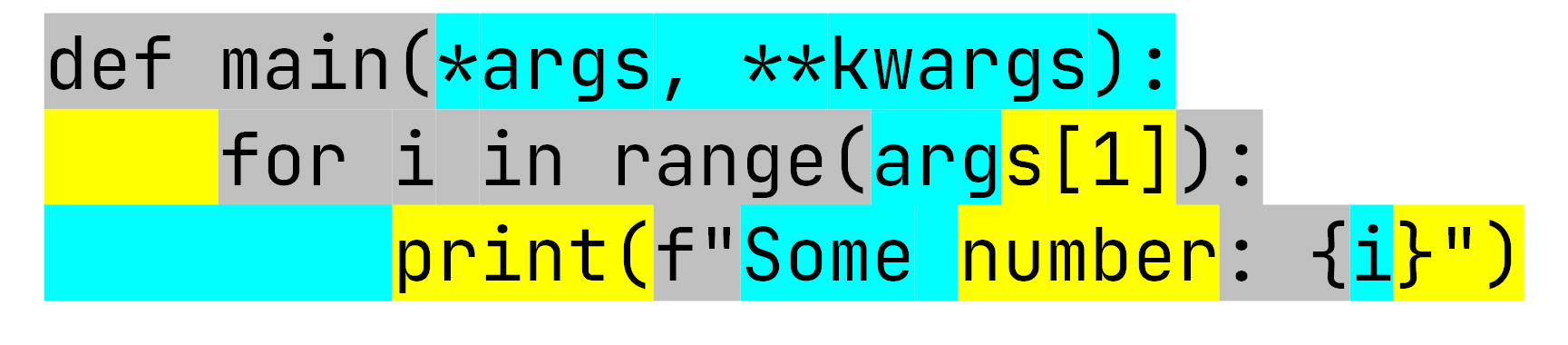

If we take a simple fragment of Python code and run the tokenizer, we can see it the same as the neural net does:

The tokenizer transforms these 3 lines into 15 tokens. Models based on GPT-2 can take up to 1,024 items; ours uses a limit of 384. The median length of a single line is 10 tokens in our training set, which is a bit longer than in the example above. We can estimate that the model uses slightly under 40 lines of context to generate the predictions.

This example also demonstrates how different neural net’s “thinking” is compared to a human. We understand that args in the first and the second lines represent the same thing. However, the algorithm sees them differently, it even splits the second one. This is by design!

Try the full line code completion plugin for Python

Browsing the token vocabulary is a lot of fun, but developing it was just a step towards a higher-level product goal. We plan to tell you more tech stories about how the neural network generates the code and how we tune its performance to fit users’ laptops’ capabilities.

In the meantime, we encourage you to try out the result of our efforts, the full line code completion plugin for Python:

https://plugins.jetbrains.com/plugin/14823-full-line-code-completion

Subscribe to JetBrains Blog updates