Using PyCharm to Read Data From a MySQL DataBase Into pandas

Sooner or later in your data science journey, you’ll hit a point where you need to get data from a database. However, making the leap from reading a locally-stored CSV file into pandas to connecting to and querying databases can be a daunting task. In the first of a series of blog posts, we’ll explore how to read data stored in a MySQL database into pandas, and look at some nice PyCharm features that make this task easier.

Viewing the database contents

In this tutorial, we’re going to read some data about airline delays and cancellations from a MySQL database into a pandas DataFrame. This data is a version of the “Airline Delays from 2003-2016” dataset by Priank Ravichandar licensed under CC0 1.0.

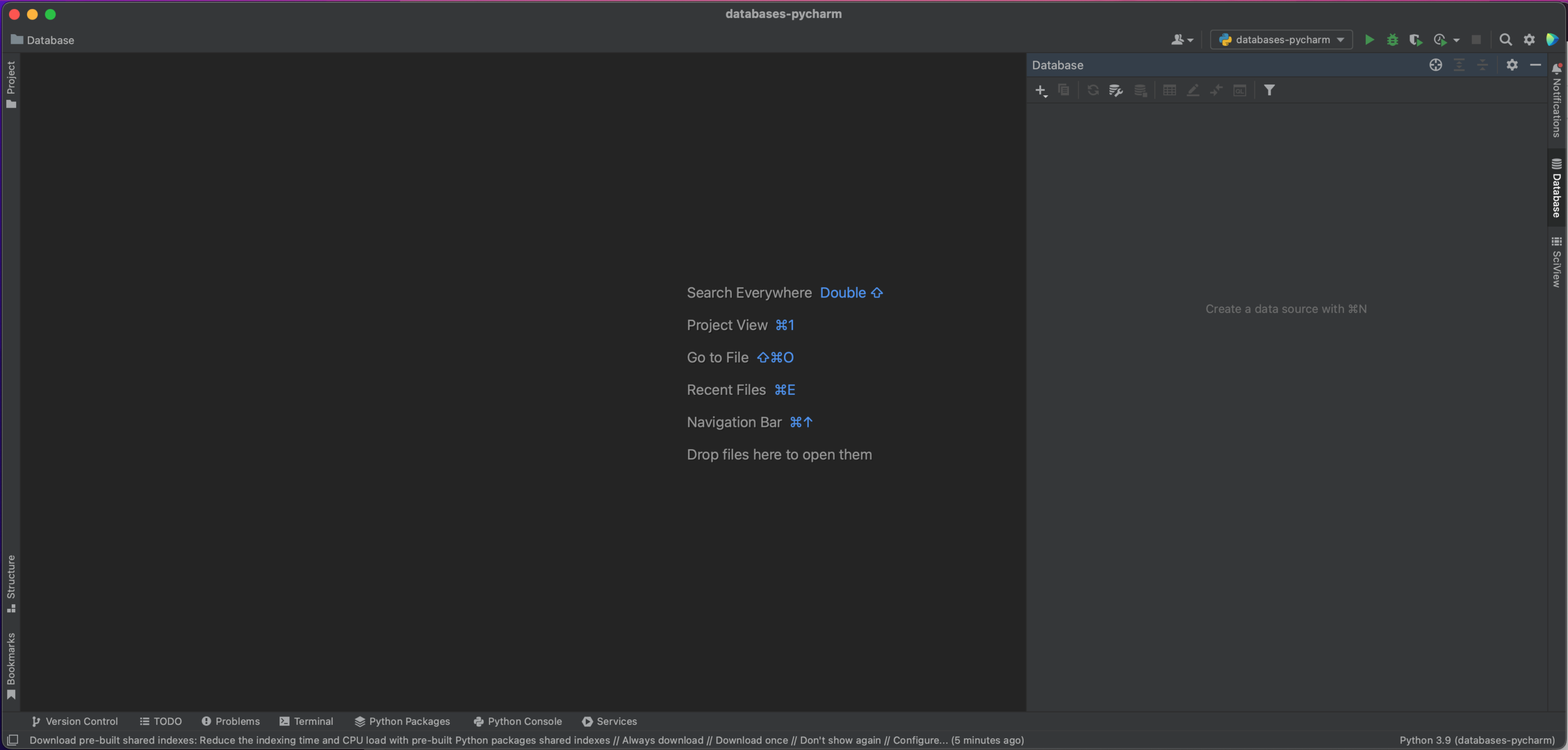

One of the first things that can be frustrating about working with databases is not having an overview of the available data, as all of the tables are stored on a remote server. Therefore, the first PyCharm feature we’re going to use is the Database tool window, which allows you to connect to and fully introspect a database before doing any queries.

To connect to our MySQL database, we’re first going to navigate over to the right-hand side of PyCharm and click the Database tool window.

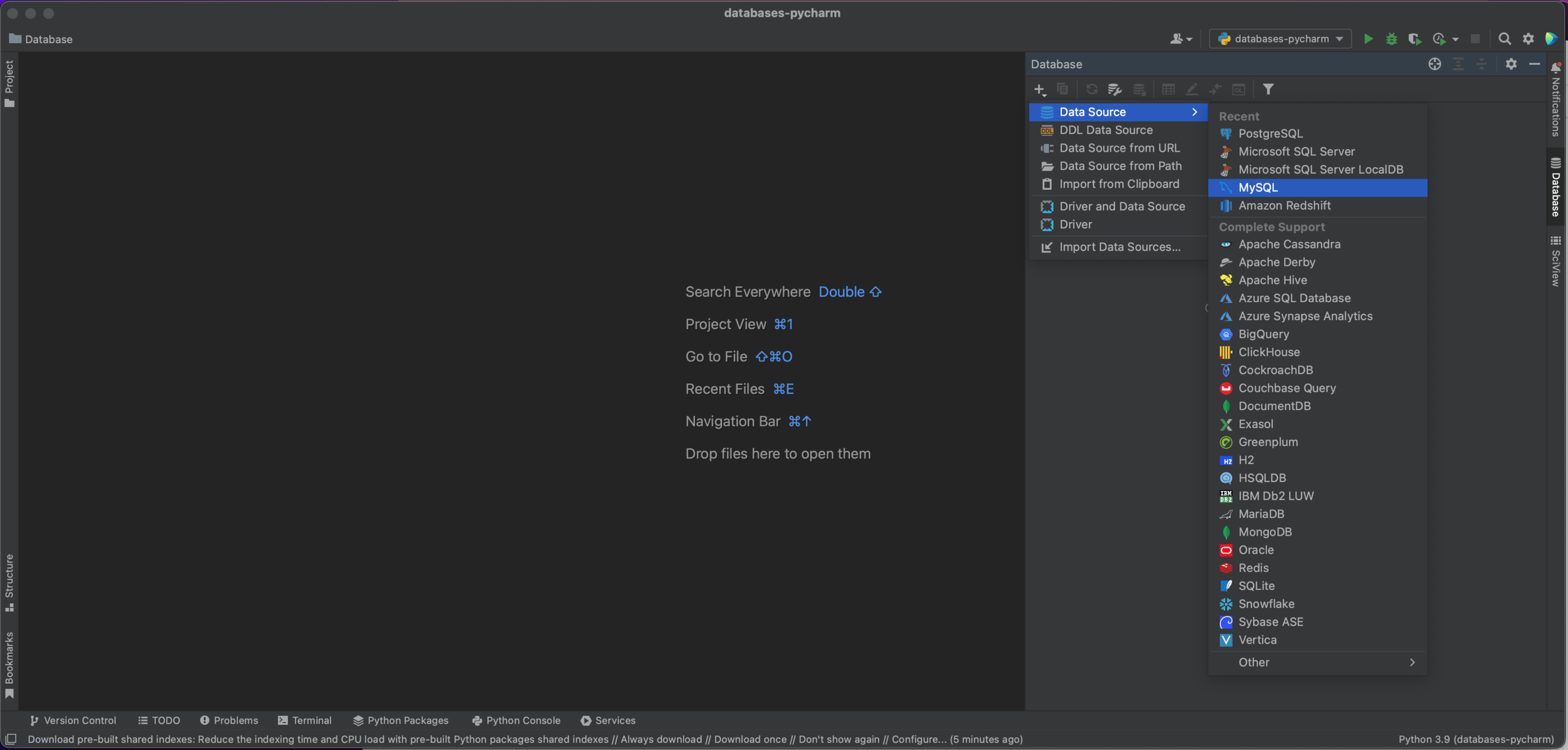

On the top left of this window, you’ll see a plus button. Clicking on this gives us the following dropdown dialog window, from which we’ll select Data Source | MySQL.

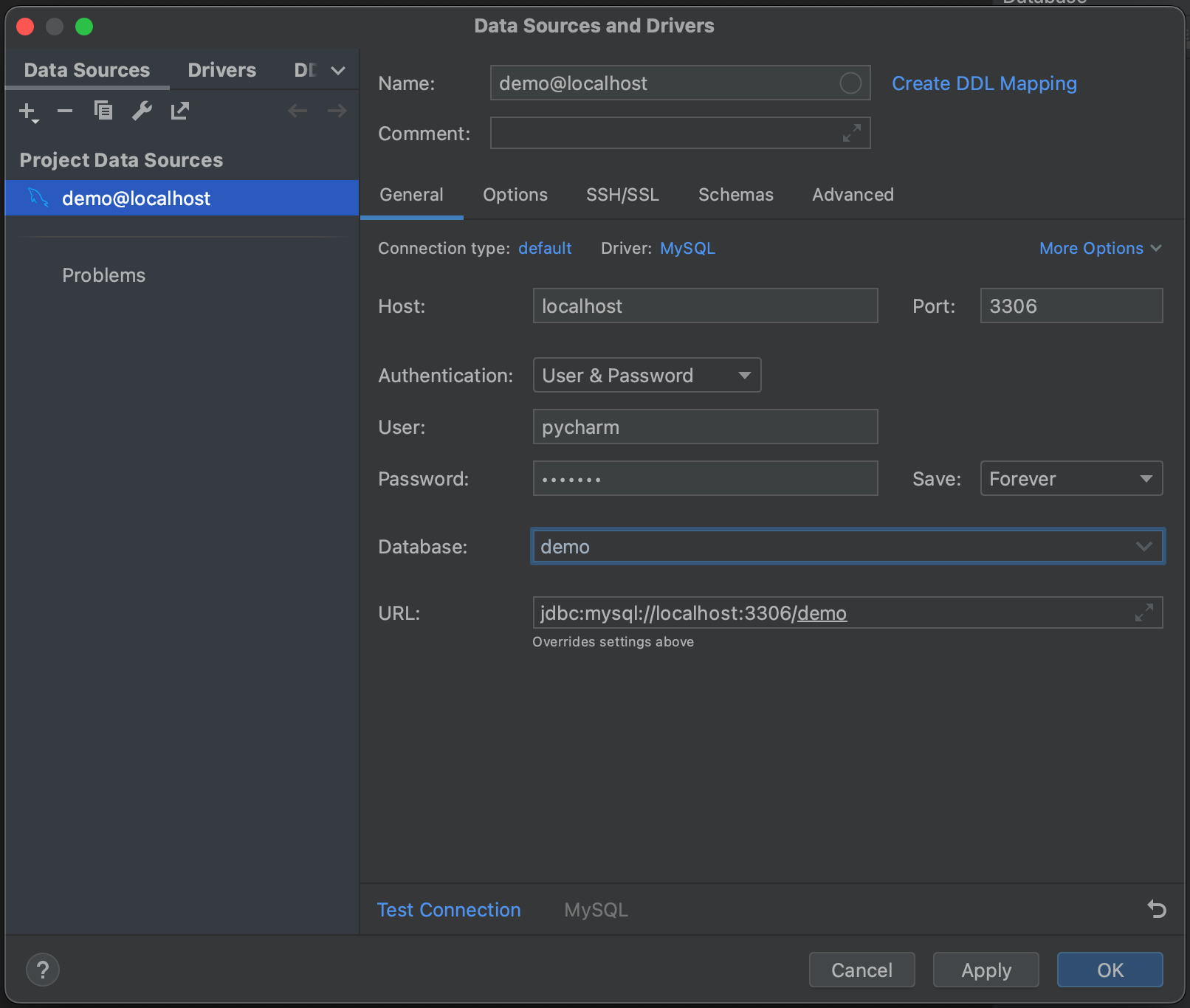

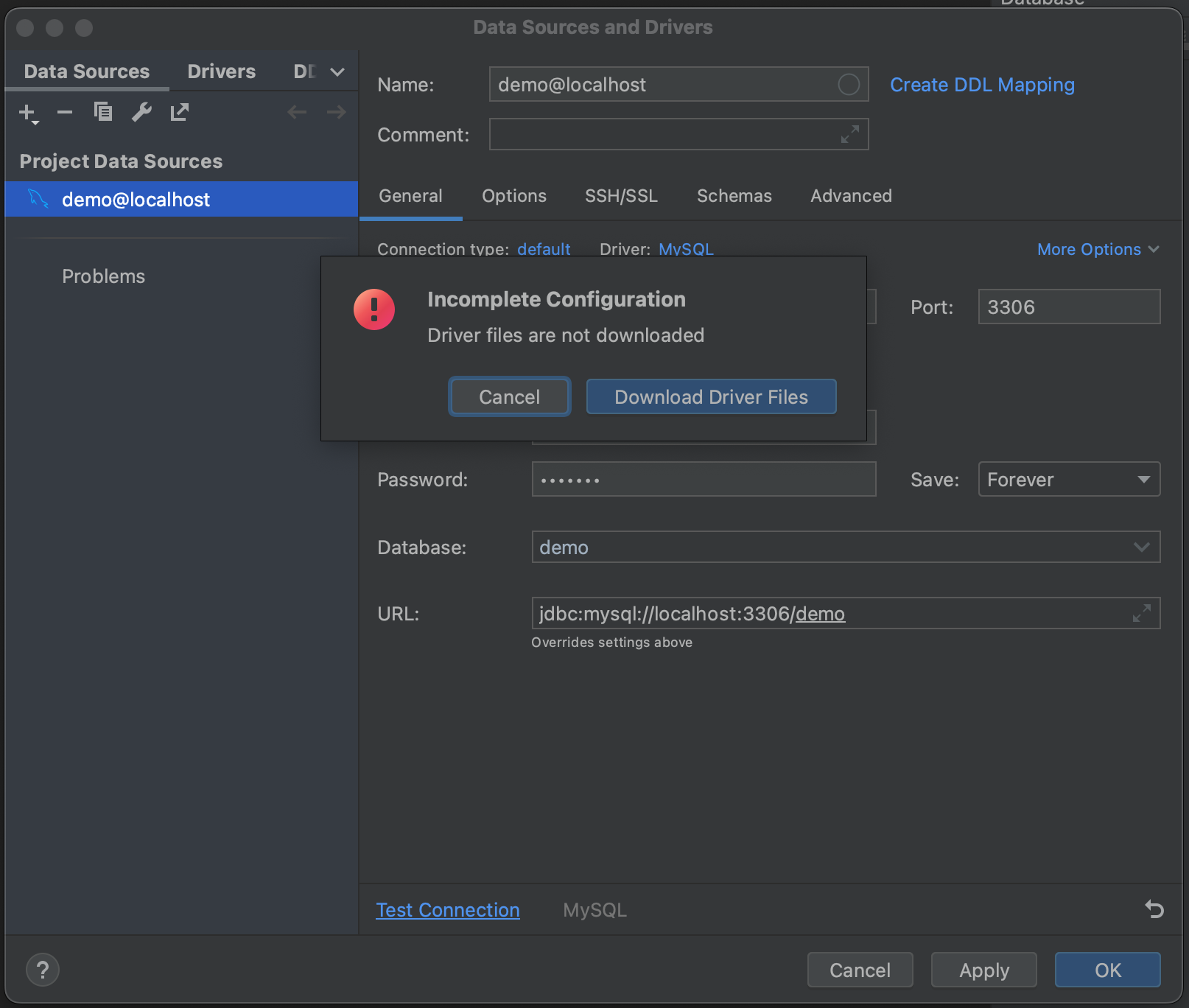

We now have a popup window which will allow us to connect to our MySQL database. In this case, we’re using a locally hosted database, so we leave Host as “localhost” and Port as the default MySQL port of “3306”. We’ll use the “User & Password” Authentication option, and enter “pycharm” for both the User and Password. Finally, we enter our Database name of “demo”. Of course, in order to connect to your own MySQL database you’ll need the specific host, database name, and your username and password. See the documentation for the full set of options.

Next, click Test Connection. PyCharm lets us know that we don’t have the driver files installed. Go ahead and click Download Driver Files. One of the very nice features of the Database tool window is that it automatically finds and installs the correct drivers for us.

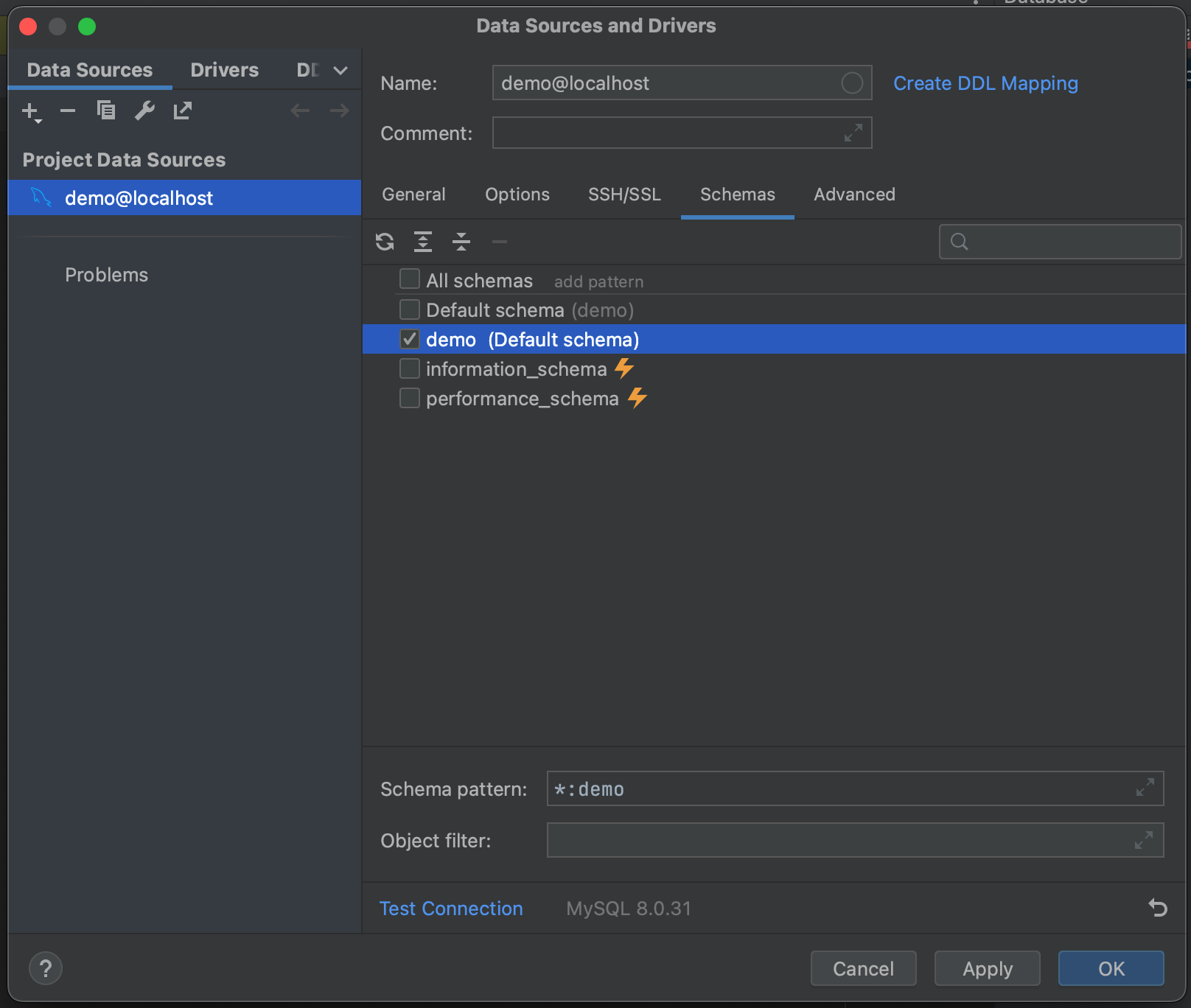

Success! We’ve connected to our database. We can now navigate to the Schemas tab and select which schemas we want to introspect. In our example database we only have one (“demo”), but in cases where you have very large databases, you can save yourself time by only introspecting relevant ones.

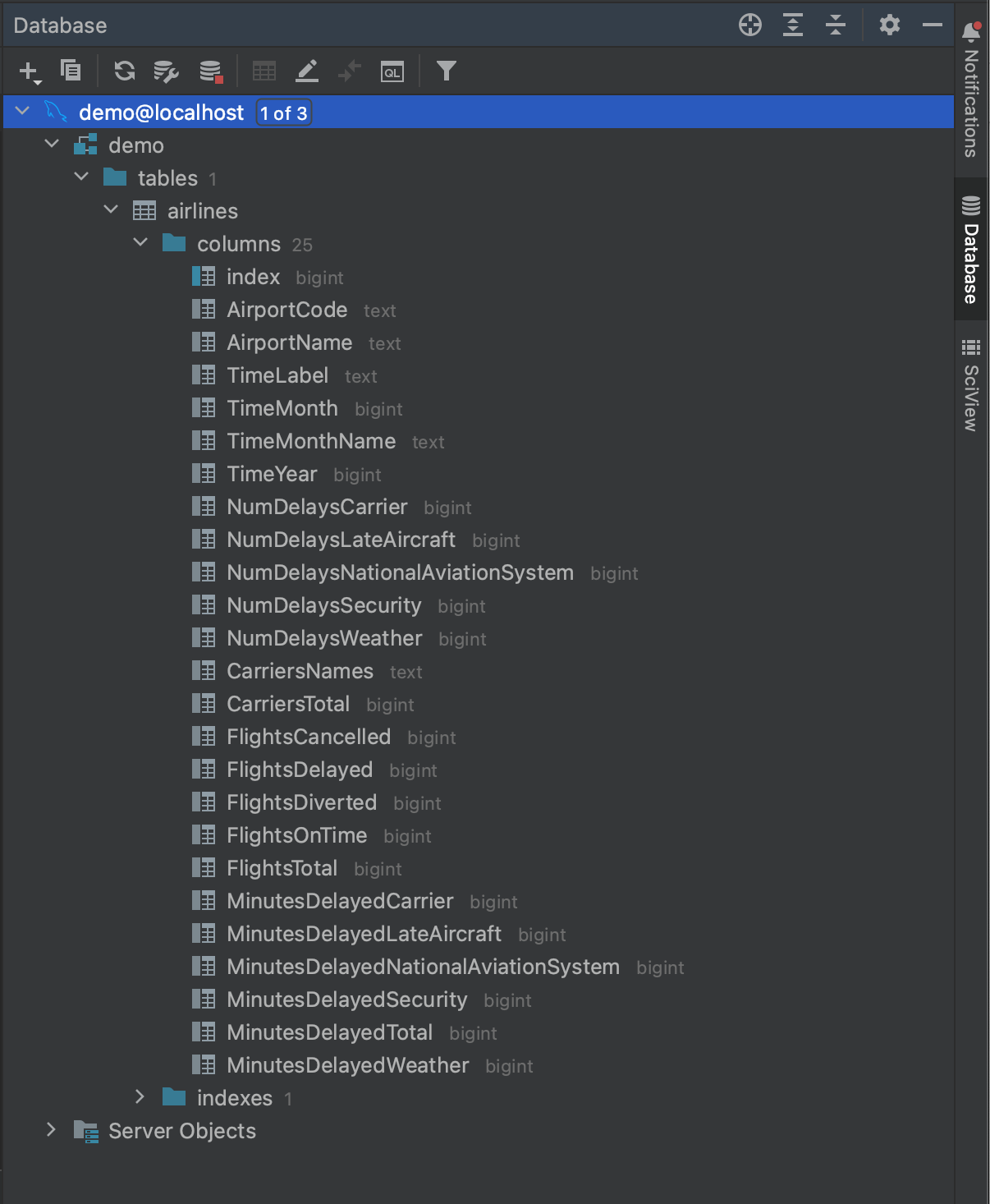

With all of that done, we’re ready to connect to our database. Click OK, and wait a few seconds. You can now see that our entire database has been introspected, down to the level of table fields and their types. This gives us a great overview of what is in the database before running a single query.

Reading in the data using MySQL Connector

Now that we know what is in our database, we are ready to put together a query. Let’s say we want to see the airports that had at least 500 delays in 2016. From looking at the fields in the introspected airlines table, we see that we can get that data with the following query:

SELECT AirportCode,

SUM(FlightsDelayed) AS TotalDelayed

FROM airlines

WHERE TimeYear = 2016

GROUP BY AirportCode

HAVING SUM(FlightsDelayed) > 500;

The first way we can run this query using Python is using a package called MySQL Connector, which can be installed from either PyPI or Anaconda. See the linked documentation if you need guidance on setting up pip or conda environments or installing dependencies. Once installation is finished, we’ll open a new Jupyter notebook and import both MySQL Connector and pandas.

import mysql.connector import pandas as pd

In order to read data from our database, we need to create a connector. This is done using the connect method, to which we pass the credentials needed to access the database: the host, the database name, the user, and the password. These are the same credentials we used to access the database using the Database tool window in the previous section.

mysql_db_connector = mysql.connector.connect( host="localhost", database="demo", user="pycharm", password="pycharm" )

We now need to create a cursor. This will be used to execute our SQL queries against the database, and it uses the credentials sorted in our connector to get access.

mysql_db_cursor = mysql_db_connector.cursor()

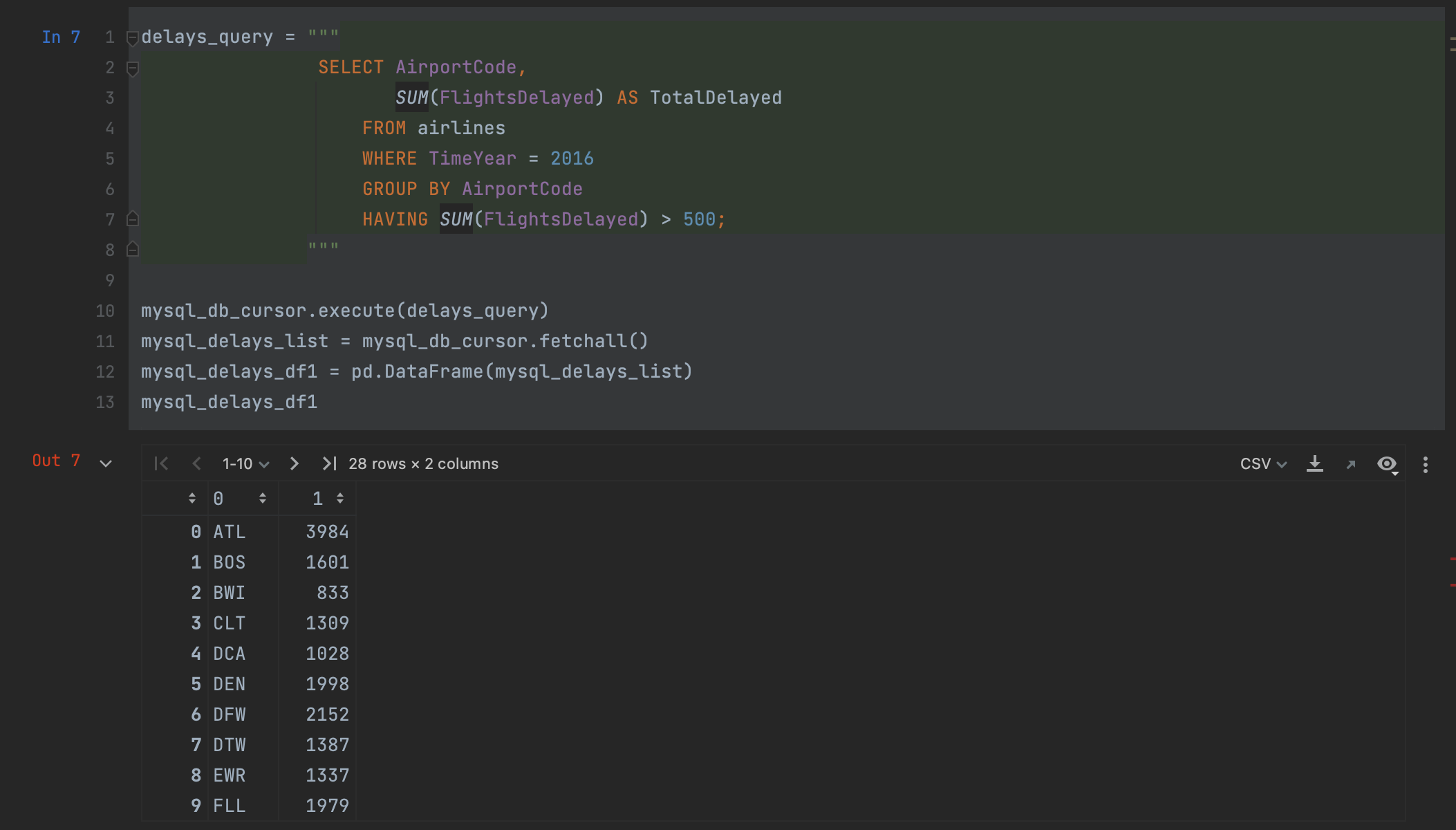

We’re now ready to execute our query. We do this using the execute method from our cursor and passing the query as the argument.

delays_query = """

SELECT AirportCode,

SUM(FlightsDelayed) AS TotalDelayed

FROM airlines

WHERE TimeYear = 2016

GROUP BY AirportCode

HAVING SUM(FlightsDelayed) > 500;

"""

mysql_db_cursor.execute(delays_query)

We then retrieve the result using the cursor’s fetchall method.

mysql_delays_list = mysql_db_cursor.fetchall()

However, we have a problem at this point: fetchall returns the data as a list. To get it into pandas, we can pass it into a DataFrame, but we’ll lose our column names and will need to manually specify them when we want to create the DataFrame.

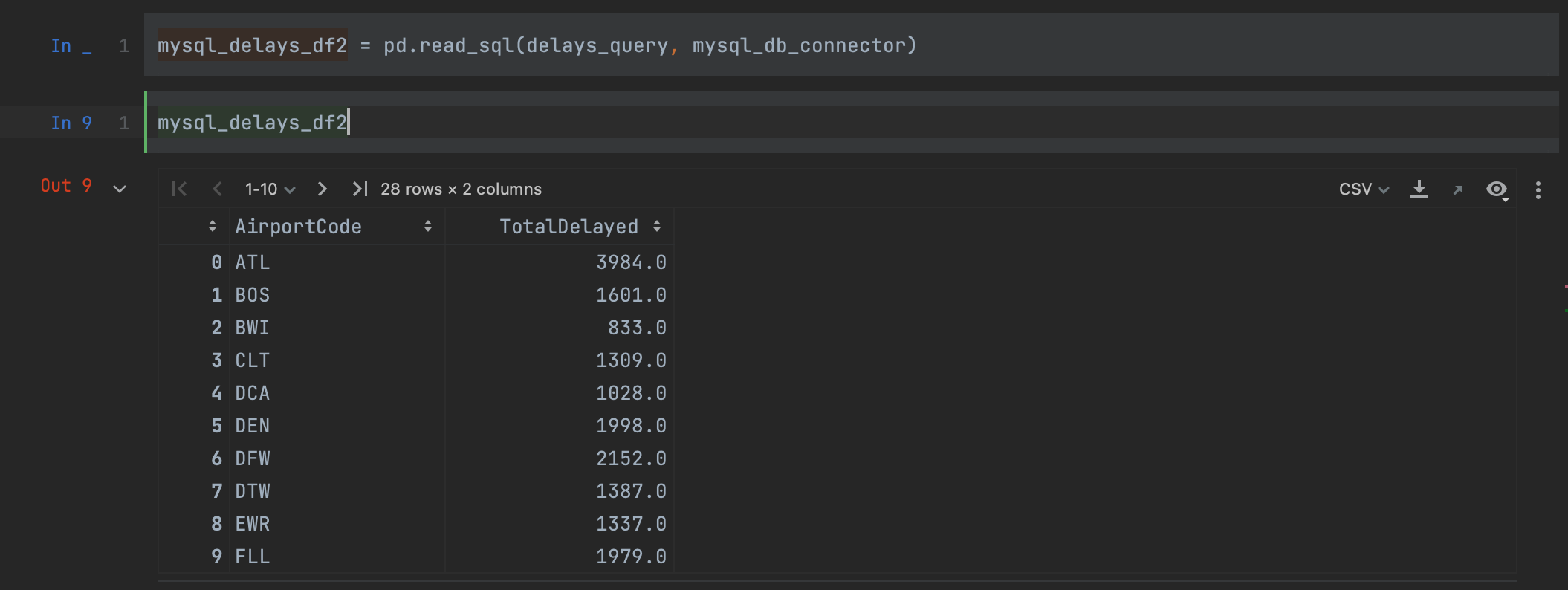

Luckily, pandas offers a better way. Rather than creating a cursor, we can read our query into a DataFrame in one step, using the read_sql method.

mysql_delays_df2 = pd.read_sql(delays_query, con=mysql_db_connector)

We simply need to pass our query and connector as arguments in order to read the data from the MySQL database. Looking at our dataframe, we can see that we have the exact same results as above, but this time our column names have been preserved.

A nice feature you might have noticed is that PyCharm applies syntax highlighting to the SQL query, even when it’s contained inside a Python string. We’ll cover another way that PyCharm allows you to work with SQL later in this blog post.

Reading in the data using SQLAlchemy

An alternative to using MySQL Connector is using a package called SQLAlchemy. This package offers a one-stop method for connecting to a range of different databases, including MySQL. One of the nice things about using SQLAlchemy is that the syntax for querying different database types remains consistent across database types, saving you from remembering a bunch of different commands if you’re working with a lot of different databases.

To get started, we need to install SQLAlchemy either from PyPI or Anaconda. We then import the create_engine method, and of course, pandas.

import pandas as pd from sqlalchemy import create_engine

We now need to create our engine. The engine allows us to tell pandas which SQL dialect we’re using (in our case, MySQL) and provide it with the credentials it needs to access our database. This is all passed as one string, in the form of [dialect]://[user]:[password]@[host]/[database]. Let’s see what this looks like for our MySQL database:

mysql_engine = create_engine("mysql+mysqlconnector://pycharm:pycharm@localhost/demo")

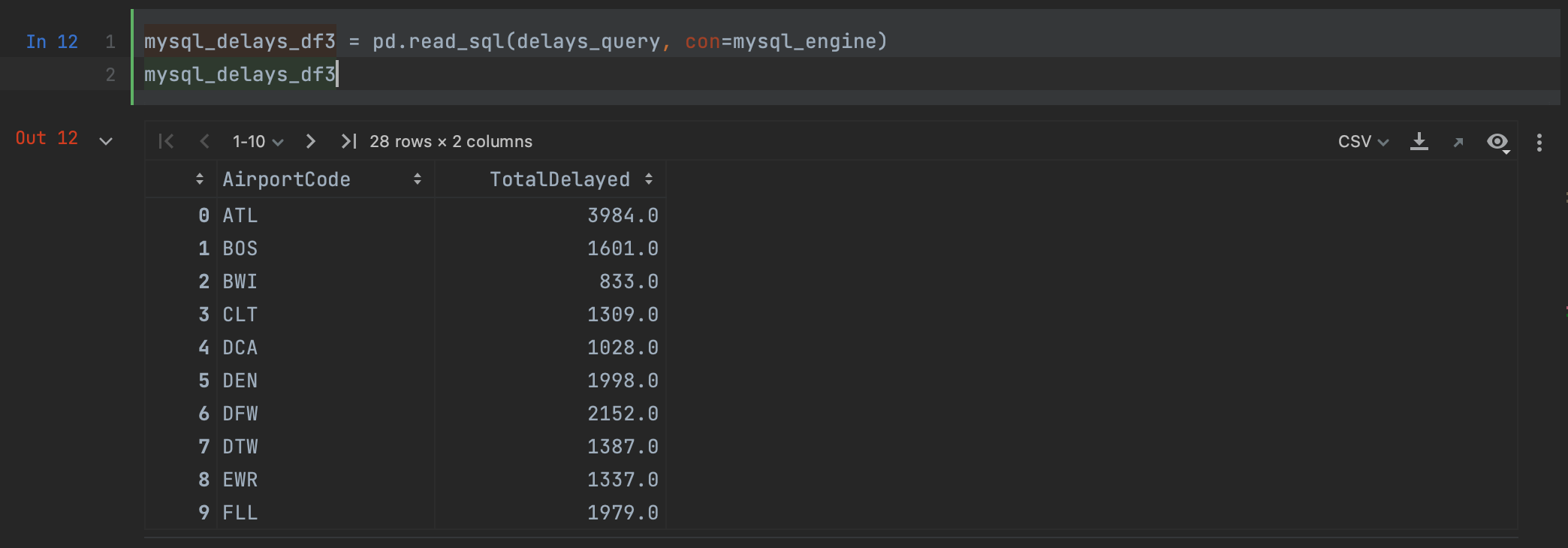

With this created, we simply need to use read_sql again, this time passing the engine to the con argument:

mysql_delays_df3 = pd.read_sql(delays_query, con=mysql_engine)

As you can see, we get the same result as when using read_sql with MySQL Connector.

Advanced options for working with databases

Now these connector methods are very nice for extracting a query that we already know we want, but what if we want to get a preview of what our data will look like before running the full query, or an idea of how long the whole query will take? PyCharm is here again with some advanced features for working with databases.

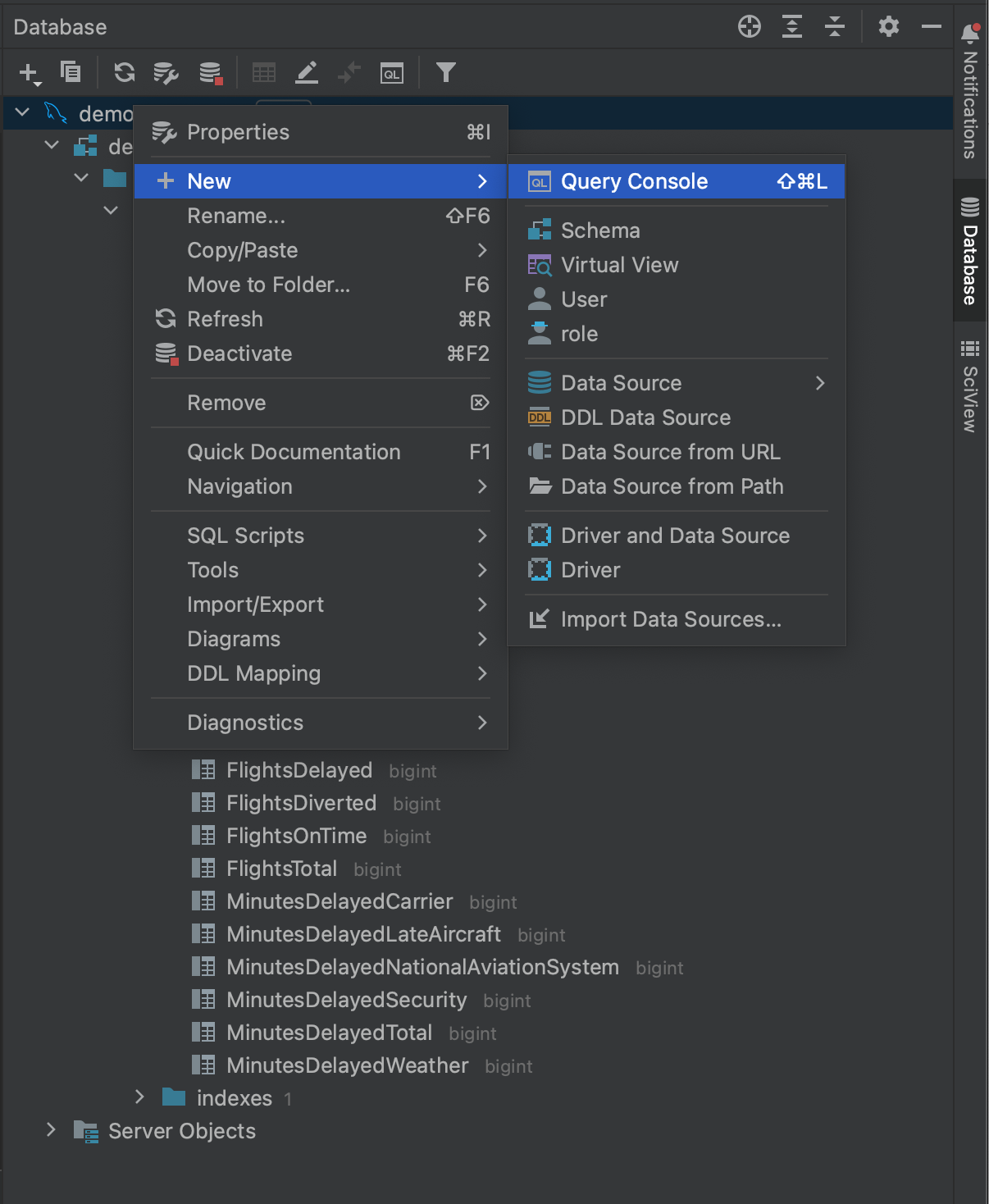

If we navigate back over to the Database tool window and right-click on our database, we can see that under New we have the option to create a Query Console.

This allows us to open a console which we can use to query against the database in native SQL. The console window includes SQL code completion and introspection, giving you an easier way to create your queries prior to passing them to the connector packages in Python.

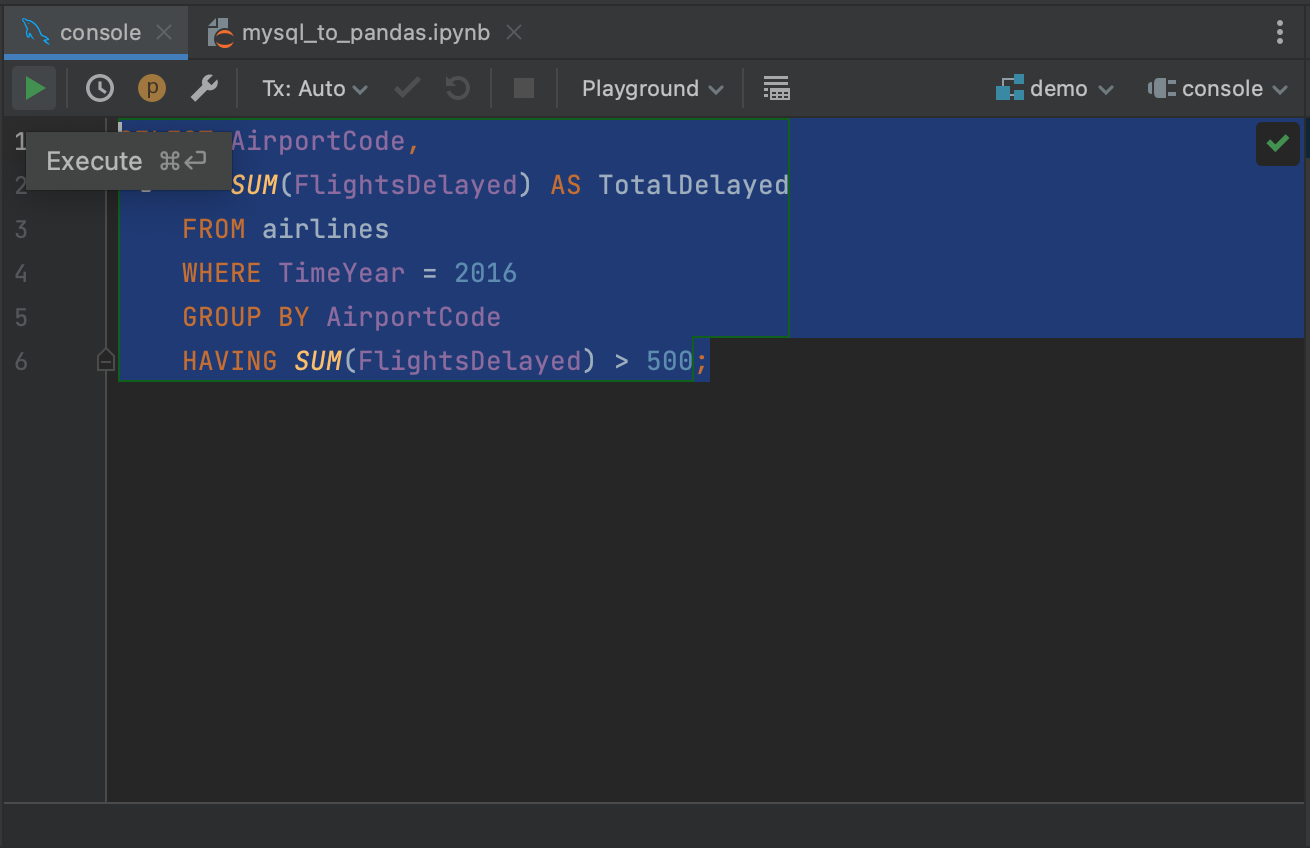

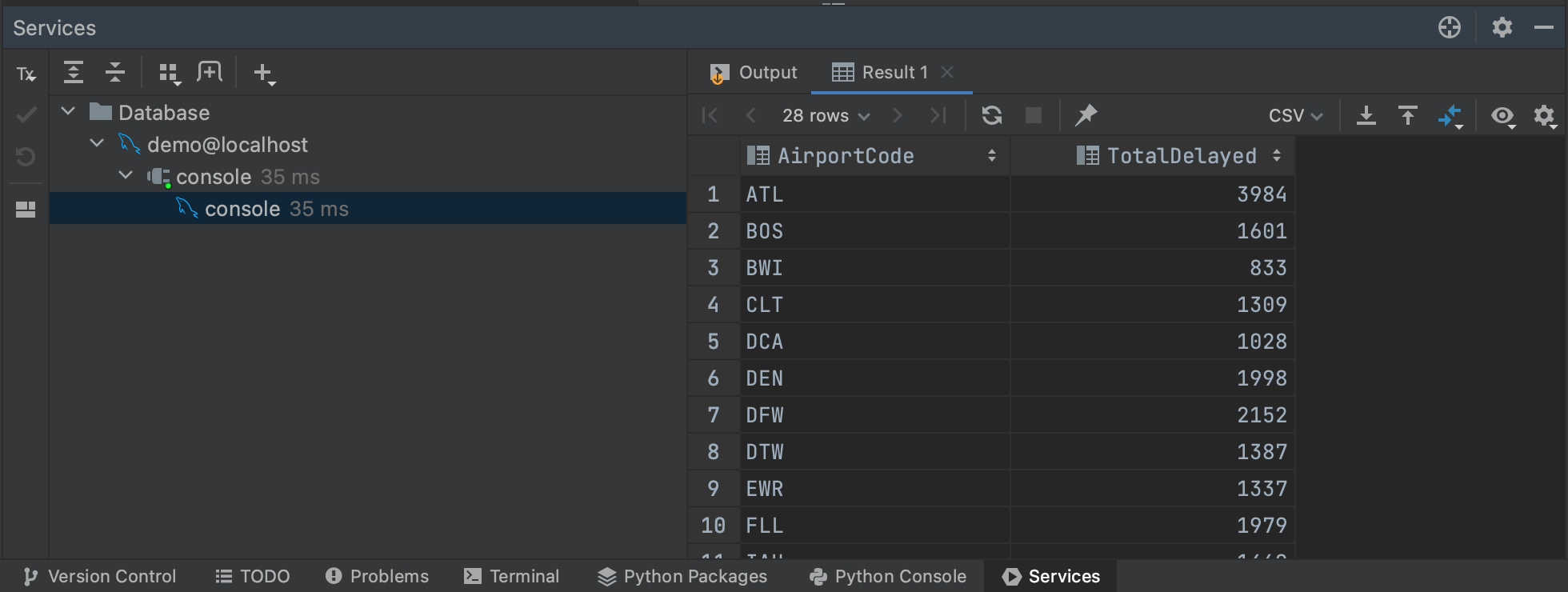

Highlight your query and click the Execute button in the top left corner.

This will retrieve the results of our query in the Services tab, where it can be inspected or exported. One nice thing about running queries against the console is that only the first 500 rows are initially retrieved from the database, meaning you can get a sense of the results of larger queries without committing to pulling all of the data. You can adjust the number of rows retrieved by going to Settings/Preferences | Tools | Database | Data Editor and Viewer and changing the value under Limit page size to:.

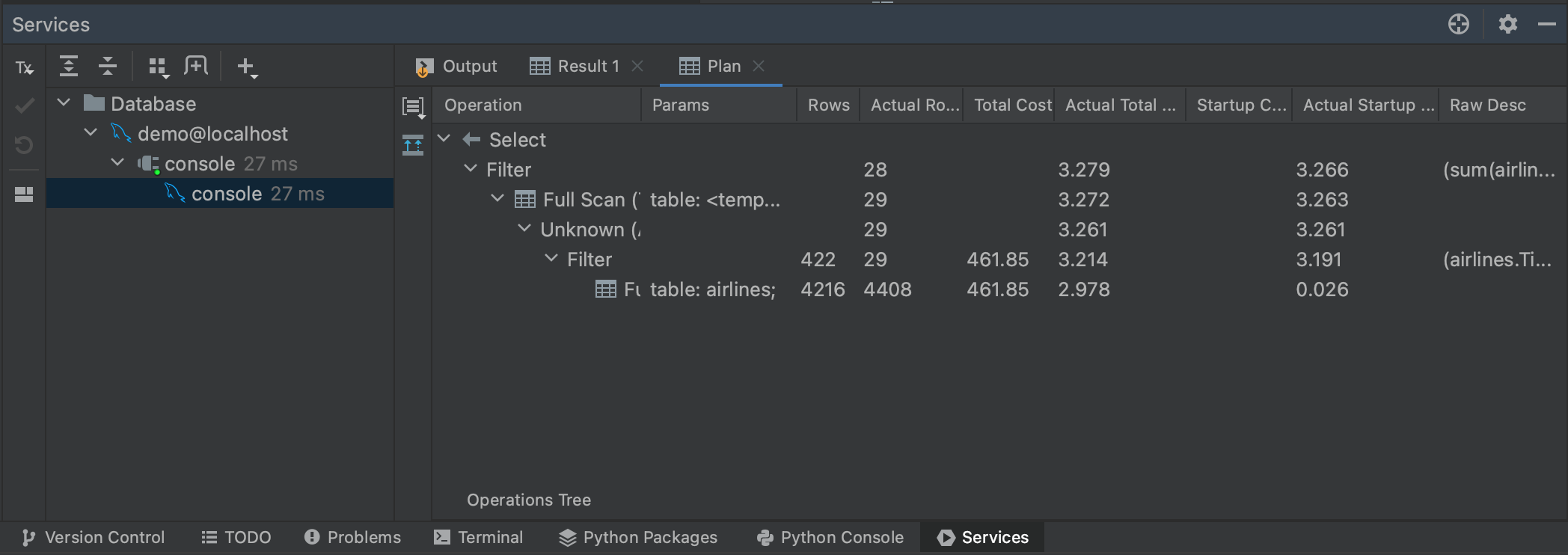

Speaking of large queries, we can also get a sense of how long our query will take by generating an execution plan. If we highlight our query again and then right-click, we can select Explain Plan | Explain Analyse from the menu. This will generate an execution plan for our query, showing each step that the query planner is taking to retrieve our results. Execution plans are their own topic, and we don’t really need to understand everything our plan is telling us. Most relevant for our purposes is the Actual Total Time column, where we can see how long it will take to return all of the rows at each step. This gives us a good estimate of the overall query time, as well as whether any parts of our query are likely to be particularly time consuming.

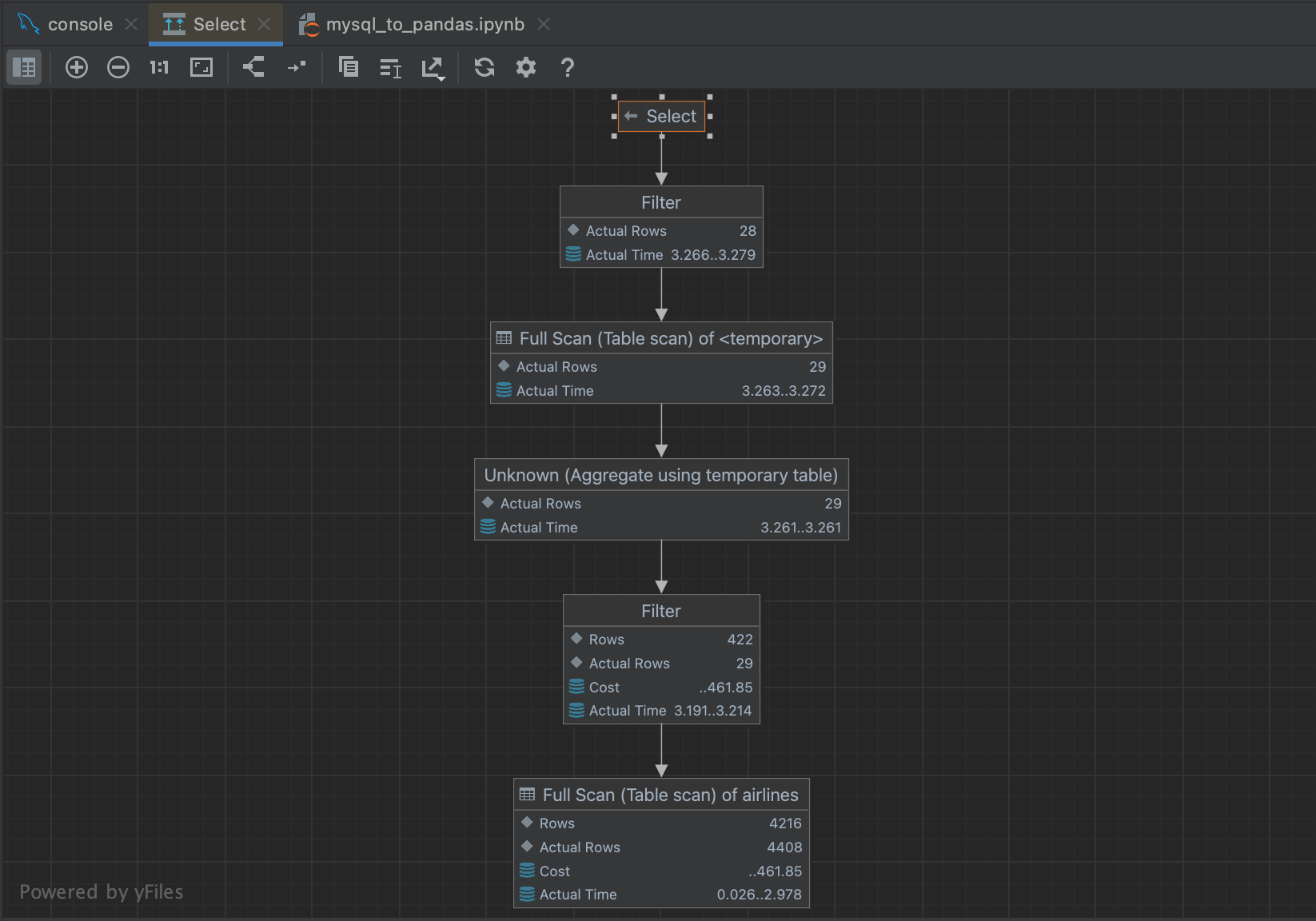

You can also visualize the execution by clicking on the Show Visualization button to the left of the Plan panel.

This will bring up a flowchart that makes it a bit easier to navigate through the steps that the query planner is taking.

Getting data from MySQL databases into pandas DataFrames is straightforward, and PyCharm has a number of powerful tools to make working with MySQL databases easier. In the next blog post, we’ll look at how to use PyCharm to read data into pandas from another popular database type, PostgreSQL databases.

Subscribe to PyCharm Blog updates